This year, the federal government announced it will phase out its use of all privately operated prisons. Many progressives have heralded this as a victory. It is not.

Although for-profit prisons are transparently evil, they house a very small percentage of people ensnared by American mass incarceration. The problem with for-profit prisons is prison, not profit. Without an accompanying effort to draw down the reach, power, and discretion of criminal-justice institutions, the injuries these institutions inflict will be redistributed rather than redressed. When, for instance, federal courts have ordered states to reduce prison inmate populations, the effect has mainly been to increase the strain on already overburdened state and local courts, while inmates are merely reassigned from state to local jails or “resentenced” (as when judges retroactively change sentences after legal statutes change). In large states like California and Michigan, this has forced courts to “do more with less” in expediting the criminal-justice process. That means that judges have had to industrialize how they sentence people.

Government, and especially the overburdened criminal justice system, is supposed to do two things at once: to be more economically efficient and more ethically just. That is where the U.S.’s most spectacularly capitalized industry sector steps in: Silicon Valley caters to the fantasy that those two incompatible goals can be met through a commitment to data and a faith in the self-evident veracity of numbers.

This spirit animates a software company called Northpointe, based in the small, predominantly white town of Traverse City, in northern Michigan. Among other services, Northpointe provides U.S. courts with what it calls “automated decision support,” a euphemism for algorithms designed to predict convicts’ likely recidivism and, more generally, assess the risk they pose to “the community.” Northpointe’s stated goal is to “improve criminal justice decision-making,” and they argue that their “nationally recognized instruments are validated, court tested and reliable.”

Northpointe is trying to sell itself as in the best tradition of Silicon Valley startup fantasies. The aesthetic of its website is largely indistinguishable from every other software company pushing services like “integrated web-based assessment and case management” or “comprehensive database structuring, and user-friendly software development.” You might not even infer that Northpointe’s business is to build out the digital policing infrastructure, were it not for small deviations the software-company-website norm, including a scrubber bar of logos from sheriffs’ departments and other criminal-justice institutions, drop-down menu items like “Jail Workshops,” and, most bizarrely, a picture of the soot-covered hands of a cuffed inmate. (Why are those hands so dirty? Is the prisoner recently returned from fire camp? Is it in the interest of Northpointe to advertise the fact that convict labor fights California’s wildfires?)

Silicon Valley caters to the fantasy that the two incompatible goals of efficiency and ethical justice can be met through a commitment to data. That industry believes itself to be in the business of building crystal balls

Moreover, in the Silicon Valley startup tradition, Northpointe has developed what it views as an objective, non-ideological data-driven model to deliver measurable benefits to a corner of the public sector in need of disruption. If only the police, the courts, and corrections departments had better data or a stronger grasp of the numbers — if only they did their jobs rationally and apolitically — then we could finally have a fair criminal justice system. This is essentially the neoliberal logic of “smaller, smarter government,” spearheaded in the U.S. by Bill Clinton and Al Gore, who ran a “reinventing government” task force as vice president, and it has defined what is regarded as politically permissible policy ever since.

But Northpointe’s post-ideological fantasies have proved to be anything but in practice. At the end of May 2016, ProPublica published a thorough and devastating report that found that Northpointe’s algorithms are inaccurate — in that they have assigned high risk values to people who are not recidivist — as well as racist, consigning a lot of brown, black, and poor white bodies to big houses under the cover of the company’s faux-progressive rhetoric about “embracing community” and “advancing justice.”

The ProPublica report confirmed the suspicions of many activists and critics that emerging technological approaches designed to streamline the U.S.’s criminal justice system and make it fairer might in fact do the opposite. Northpointe, of course, disputes ProPublica’s analysis. In a letter to the publisher, they wrote that “Northpointe does not agree that the results of your analysis, or the claims being made based upon that analysis, are correct or that they accurately reflect the outcomes from the application of the model.”

Of course, their model is proprietary, so it is impossible to know exactly how it works. ProPublica did manage to find that it is based on 137 Likert-scale questions that are broken down into 14 categories. Some of these have obvious relevance, like criminal history and gang membership. Others are specious and confusing, like leisure/recreation (“Thinking of your leisure time in the past few months … how often did you feel bored?”), social isolation (“I have never felt sad about things in my life”), and “criminal attitudes” (“I have felt very angry at someone or something”).

Northpointe makes for an easy target for critics of predictive analytics in contemporary criminal justice. It’s a for-profit company, with an inherent interest in expanding the state’s carceral reach. Its business model depends on a criminal-justice system oriented toward perpetually churning people through its courts and being overburdened. The more overtaxed a court, the more attractive a program that can tell a judge how they ought to rule. But to blame mass incarceration on companies like Northpointe would be akin to blaming private prisons (which house about 11 percent of prisoners) for mass incarceration. The public sector may work with the private sector to outlay some costs and provide some services, but the government makes the market.

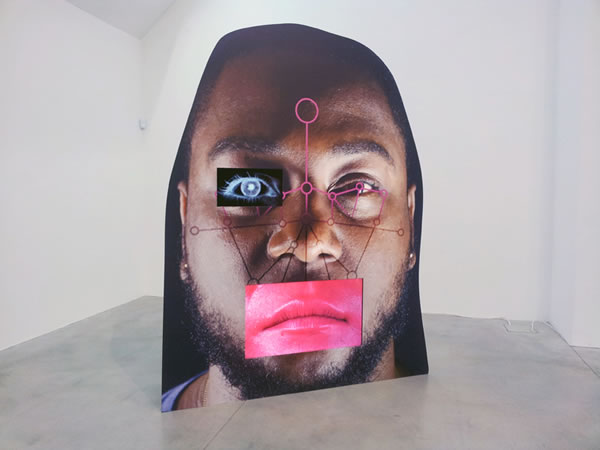

A common critique of algorithmic systems like Northpointe’s is that they replicate existing bias. Because people program algorithms, their biases or motives get built in. It seems to follow, then, that were we to open up the algorithms, we could train them out of their prejudicial ignorance and thereby solve the problems of racism, sexism, queerphobia, and so on that are otherwise written invisibly into the source codes of everyday life. We may not to be able to reprogram humans to be unbiased, but we can rewrite algorithms.

But the problem with predictive policing goes beyond Northpointe or biased algorithms. Focusing on the algorithms relies on a delimited analysis of how power works: If only we could have woke programmers, then we would have woke systems. Swap out “programmers” for “cops” and you have a version of the “few bad apples” theory of policing, which ignores the way in which violence and repression are inherent and structural within law enforcement. The problem with predictive policing algorithms, and the fantasy of smart government it animates, is not that they can “become” racist, but that they were built on a law-enforcement strategy that was racist all along.

Northpointe is emblematic of the sort of predictive and data-driven approaches that have become accepted commonsense policing practices, techniques such as hot-spot policing and situational awareness modeling. And while these methods are often presented as social or politically “neutral,” there is an enormous body of research that has demonstrated repeatedly that they are not. But what made data-driven predictive policing seem like common sense?

To begin to answer that question, one must trace the disparate histories of predictive policing’s component parts through a series of crises and conjunctions. Actuarial techniques like Northpointe’s (or the older Level of Service Inventory–Revised, another recidivism-risk-assessment battery) emerge out of insurance companies’ demand for risk management during the late 19th and early 20th centuries’ chronic economic crises.

Two more pieces of the puzzle, biometrics and organized surveillance, emerge in the 18th and 19th centuries out of the shifting tactics for maintaining white supremacy in both southern slave plantations and northern cities. Simone Browne, for example, has shown that New York’s colonial “lantern laws,” which forbade unaccompanied black people from walking the streets at night without carrying a lit lantern, were originally instituted because of white fear of antislavery insurrection.

And lastly, statistical techniques of crime prediction come down to us through the early-20th century Chicago School of sociology, which swapped cruder theories of physically inherent racial difference for more refined spatio-cultural theories of industrial capitalist “social disorganization.” These shored up sexuality and the color line as the key arbiters of cultural degradation, as in studies positing a “culture of poverty” that generates criminality. This is Roderick Ferguson’s point in Aberrations in Black when he argues that “the Chicago School’s construction of African American neighborhoods as outside heteropatriarchal normalization underwrote municipal government’s regulation of the South Side, making African American neighborhoods the point at which both a will to knowledge and a will to exclude intersected.”

All these histories are individually crucial. But there is a particular point when they all converged: at the 1993 election of Rudy Giuliani as mayor of New York City. A combination of white resentment against David Dinkins, the city’s first black mayor; a referendum on Staten Island’s secession from New York City; and incessant dog whistling about “improving the quality of life” in the city allowed Giuliani to win the mayoral race. The “quality of life” issue stemmed from the unprecedented spike in homelessness and poverty in the wake of the city’s 1970s fiscal crisis. The racist political economy of New York City ensured that poverty and homelessness — coded as “disorder” — fell disproportionately to people of color.

What the NYPD authorities wanted was real-time data on the dispositions and intentions of their “enemies,” to know where “knuckleheads” and “predators” would be before they did. And they wanted it to be legal

None of this was accidental. Robert Moses was a key player in a power elite that famously engineered New York as an apartheid city in the 1950s and 1960s, just as many people of color were immigrating there, particularly from Puerto Rico and the American South. They were largely renters, living rent-gouged in the subdivided former homes of white families who had taken advantage of the GI Bill and home-loan programs to move to the suburbs. When New York City’s industrial core collapsed in the 1960s, it devastated working class neighborhoods, where poverty skyrocketed and landlords systematically abandoned property. Aside from industry, black and Latinx workers had won the greatest labor victories and made the deepest inroads in the public sector. After the federal government induced the fiscal crisis of the ’70s and crippled the municipal government, the city cut one-third of its workforce, further decimating the black and Latinx working and middle classes.

As the city sought to lure major corporate headquarters, financial houses, and wealthy real estate investors back from the suburbs in the 1980s, controlling this racially coded “disorder” became the city government’s paramount concern. The police did this by combining a generalized ratcheting up of displays of spectacular violence meant to “retake” places like Tompkins Square Park from the queer and homeless communities that had set up there, with a “community policing” strategy that focused on outreach to “community leaders” to make the department more responsive. Dinkins’ administration also made harassing black “squeegee men” a centerpiece of its crime fighting effort, a tactic that Giuliani, while campaigning, would point to as a matter of “restoring the quality of life.” That was thinly veiled code for aggressively targeting the poor, people of color, queer people, sex workers, and teenagers as part of a general campaign to, as Police Strategy No. 5 put it, “reclaim the public spaces of New York.”

This policing strategy “worked” in that, by the early 1990s, crime rates had begun to fall, real estate values skyrocketed, and “undesirable” populations had been pushed further to the margins. It also fomented the toxic electoral mood that got Giuliani elected. He appointed William Bratton as police commissioner (the first of his two tours of duty in the position), and Bratton would implement the infamous policing strategy known as “Broken Windows.”

Broken Windows is usually explained as the idea that police should rigorously enforce violations of small crimes with maximum penalties to both deter people from committing larger crimes and incapacitate people who cannot be deterred. But while that is an accurate depiction of how Bratton and other backers have described the approach to the press, the actual Broken Windows theory, developed in the early 1980s and revised through the mid-1990s, is never so coherent. Critics (who have often been cops) have repeatedly pointed this out from the moment the Atlantic first published the article by James Q. Wilson and George L. Kelling that gave the approach its name in 1982. I am partial to Rachel Herzing’s recent description of Broken Windows in Policing the Planet, where she describes the theory as “not much of a theory at all,” but rather “an incantation, a spell used by law enforcement, advocates, and social scientists alike to do everything from designing social service programs to training cops.”

To the extent that Wilson and Kelling’s case can be condensed into a logical argument, it is this: Reforms designed to address corruption and racism in American police departments have incapacitated their ability to fulfill the order-maintenance component of their mission. This crippled American cities in the 1970s by instilling a culture of disorder in the streets and a fatalist sense of impotency in police departments. To fix this, these reforms must be abolished. In their stead, police should walk around more than they drive, because it is hard to be scared of someone when they are in a car (?). They should “kick ass” more than they issue summonses or arrests, because it is more efficient and the criminal justice system is broken (??). They should use their subjective judgment to decide who will be on the receiving end of this order maintenance, rather than defer to any legal regime (???). They should do all this without worrying about whether what they do would stand up in a court of law, because the interests of the community far outweigh the individualized injustice that police may mete out (????). That, plus a chilling nostalgia for Jim Crow and the befuddling decision to rest the entire scientific basis for their case on a study organized by Philip Zimbardo, who also ran the Stanford Prison Experiment (among the most unethical social science studies ever performed), gives the gist of the thing.

Even Bratton’s second-in-command during his first stint as NYPD commissioner in the Giuliani years, Jack Maple, thought that the Broken Windows theory was bogus. He called it the “Busting Balls” theory of policing and said that it was the oldest and laziest one in the history of the profession. He thought that only academics who had never actually worked on the street could ever think it would effectively drive down crime. In practice, he argued, non-systematically attacking people and issuing threats would displace unwanted people to other neighborhoods, where they could continue to “victimize” innocents. Because Broken Windows did not advocate mass incapacitation through mass incarceration, Maple thought it ineffective.

So the strategy that Bratton implemented was not the Broken Windows detailed in the Atlantic essay. Nor was it, as it is sometimes described, a hardline interpretation of Wilson and Kelling’s ideas. But Broken Windows theory did offer Bratton and Maple an intellectual scaffold for reversing what had been considered the best practices in policing for decades. Over more officers and equipment, Bratton and Maple wanted more intelligence. Broken Windows provided a reason to replace six-month or annual target benchmarks for reduction of “index crimes” (crimes reported in Part I of the FBI’s Uniform Crime Reports: aggravated assault, forcible rape, murder, robbery, arson, burglary, larceny-theft, and motor-vehicle theft) with the monitoring of granular crime data on a geographic information system in near real time, to meet day-to-day targets for reductions in the full range of crimes, and not just the most serious.

What Bratton and Maple wanted was to build a digital carceral infrastructure, an integrated set of databases that linked across the various criminal-justice institutions of the city, from the police, to the court system, to the jails, to the parole office. They wanted comprehensive and real-time data on the dispositions and intentions of their “enemies,” a term that Maple uses more than once to describe “victimizers” who “prey” on “good people” at their “watering holes.” They envisioned a surveillance apparatus of such power and speed that it could be used to selectively target the people, places, and times that would result in the most good collars. They wanted to stay one step ahead, to know where “knuckleheads” and “predators” would be before they did, and in so doing, best look to the police department’s bottom line. And they wanted it to be legal.

A company that develops tools to rationalize and expedite the process of imprisoning people is not technically a part of any criminal justice institution, but it automates the mechanics of one

For this corporate restructuring of policing to be successful, they had to populate the city’s databases with as many names as possible. But these institutions were reluctant to adopt new tech — for reasons of expediency (people hate learning new systems, especially when they are untested) as well as for moral reservations about automating criminal justice.

If Bratton and Maple could expand the number of arrests the system was handling, they could force the issue. By their own admission, they created a deliberate crisis in the accounting capacities of New York City’s criminal justice institutions to necessitate the implementation of digital technologies. For instance, they ordered enormous sweeps aimed at catching subway fare-beaters, in which the police charged everyone with misdemeanors instead of issuing warnings or tickets. This flooded the courts with more cases than they could handle and overwhelmed public defenders. To cope, the courts automated their paperwork and warrant-notifications system, and public defenders turned increasingly to plea deals. This piled up convictions, inflating the number of people with criminal records and populating interoperable databases.

Case information was then fed to the NYPD’s warrant-enforcement squad, which could then organize their operations by density (where the most warrants were concentrated) rather than severity of the crime. Most warrants were served for jumping bail, a felony that many don’t realize they are even committing. Faced with the prospect of abetting a felon, many people that the police questioned in the targeted enforcement areas were willing to give up their friends and acquaintances to stay out of trouble. The surveillance net expanded, and the data became more granular. Officers in areas with high concentrations of incidents, newly empowered to determine how to police an area based on their idea of how risky it was, would step up their aggression in poor, black, Asian, and Latino neighborhoods, in queer spaces, and in places where they believed sex workers did their jobs. It was, and is, Jim Crow all over again, but this time backed by numbers and driven by officers’ whims.

By providing the framework for a massive increase in aggressive police behavior, Broken Windows made this possible. It gave a rationale for why officers should be permitted to determine criminal risk based on their own subjective interpretations of a scene in the moment rather than abiding strictly established protocols governing what was and was not within their jurisdiction. This helped support the related notion that police officers should operate as proactive enforcers of order rather than reactive fighters of crime. That is, rather than strictly focus on responding to requests for help, or catching criminals after a crime occurred, Broken Windows empowered cops to use their own judgment to determine whether someone was doing something disorderly (say, selling loose cigarettes) and to remove them using whatever force they deemed appropriate. Broken Windows plus Zero Tolerance would equal an automated carceral state.

A carceral state is not a penal system, but a network of institutions that work to expand the state’s punitive capacities and produce populations for management, surveillance, and control. This is distinct from the liberal imagination of law and order as the state redressing communal grievances against individual offenders who act outside the law. The target of the carceral state is not individuated but instead group-differentiated, which is to say organized by social structures like race, class, gender, sexuality, ability, and so on. As Katherine Beckett and Naomi Murakawa put it in “Mapping the Shadow Carceral State,” the carceral “expansion of punitive power occurs through the blending of civil, administrative, and criminal legal authority. In institutional terms, the shadow carceral state includes institutional annexation of sites and actors beyond what is legally recognized as part of the criminal justice system: immigration and family courts, civil detention facilities, and even county clerks’ offices.”

In a liberal law-and-order paradigm, individuals violate norms and criminal codes; in the carceral state, racism, which Ruth Wilson Gilmore defines “specifically, is the state-sanctioned or extralegal production and exploitation of group-differentiated vulnerability to premature death” is the condition of possibility for “criminality.” The political economic structures of a carceral state deliberately organize groups of people with stratified levels of precarity through mechanisms like red-lining, asset-stripping, predatory lending, market-driven housing policies, property-value funded schools, and so on.

The consequence of these state-driven political decisions is premature death: Poor people, who in American cities are often also black, Latinx, Asian, and First Nation, are exposed to deadly environmental, political, sexual, and economic violence. Efforts to survive in deliberately cultivated debilitating landscapes are determined to be “criminal” threats to good order, and the people who live there are treated accordingly. Lisa Marie Cacho argues that the effect is social death: The “processes of criminalization regulate and regularize targeted populations, not only disciplining and dehumanizing those ineligible for personhood, but also presented them as ineligible for sympathy and compassion.” This is how technically nonpunitive institutions become punitive in fact, as in immigration detention, civil diversion programs that subject bodies to unwanted surveillance and legal precarity, educational institutions that funnel children into a pipeline to prison, and civil-injunction zones that render traversing space a criminal act.

The carceral state’s institutions and cadres are both public and private. For example, a company like Northpointe that develops tools designed to rationalize and expedite the process of imprisoning people, is not technically a part of any criminal justice institution, but it automates the mechanics of the carceral state. Securitas (née “Pinkerton”) might not be a state agency, but it does the labor of securing the circulation of capital to the benefit of both corporations and governments.

For any buildup in surveillance to be effective in sustaining a carceral state, the police must figure out how to operationalize it as a management strategy. The theoretical and legal superstructures may be in place for an expanded conception of policing, but without a rationalized command-and-control process to direct resources and measure effectiveness, there is little way to make use of the new data or assess whether the programs are accomplishing their mission of “driving down crime.” In 1994, the NYPD came up with CompStat to solve this problem, and we are living in its world.

Depending on who is recounting CompStat’s origin story, it stands for “Compare Statistics,” “Computerized Statistics,” or “Computer Statistics.” This spread is interesting, since all three names imply different ideas about what computers do (as well as a total misunderstanding of what “statistics” are). Let’s take these from least to most magical. “Compare Statistics” designates computers as capable merely of the epistemological function of rapidly comparing information curated and interpreted by people. “Computerized Statistics” implies an act of ontological transformation: The information curated and interpreted by humans is turned into “Big Data” that only computers have the capability of interpreting. “Computer Statistics” instantiates, prima facie, an ontological breach, so that the information is collected, curated, and analyzed by computers for its own purposes rather than those of humans, placing the logic of data squarely outside human agency.

These questions aren’t just academic. The Rand Corporation, in its 2013 report on predictive policing, devotes an entire section to dispelling “myths” that have taken hold in departments around the country in the wake of widespread digitization of statistical collection and analysis. Myths include: “The computer actually knows the future,” “The computer will do everything for you,” and “You need a high-powered (and expensive) model.” On the spectrum of Compare Statistics to Computer Statistics, Rand’s view is closest to Compare, but companies like Northpointe are at the other end. That industry believes itself to be in the business of building crystal balls.

And were one to embark on a project of separating out industry goals from the ideologies and practices of smart government, one would find it impossible. Massive tech companies like Microsoft, IBM, Cisco Systems, and Siemens, as well as smaller, though no less heavy, hitters like Palantir, HunchLab, PredPol, and Enigma are heavily invested in making government “smarter.” Microsoft and New York City have a profit-sharing agreement for New York City’s digital surveillance system, called AWARE (the Automated Workspace for the Analysis of Real-Time Events), which has recently been sold to cities like Sao Pãolo and Oakland.

The NYPD’s “Computer Statistics” approach provides easy transparency, in that anybody can look at a map and see if there are more or fewer dots than before. More dots mean the cops are failing. Fewer dots mean they’re doing their job

CompStat sits at the fountainhead of an increasingly powerful movement advocating “responsive,” “smart” government. It has become ubiquitous in large police departments around the world, and in the U.S., federal incentives and enormous institutional pressures have transferred the burden of proof from those departments that would adopt it to those departments that don’t.

Major think tanks driving the use of big data to solve urban problems, like New York University’s Center for Urban Science and Progress, are partially funded by IBM and the NYPD. Tim O’Reilly explicitly invokes Uber as an ideal model for government. McKinsey and Co. analysts advocate, in a Code for America book blurbed by Boris Johnson, that city government should collect and standardize data, and make it available for third parties, who can then use this to drive “significant increases in economic performance for companies and consumers, even if this data doesn’t directly benefit the public sector agency.” In the context of a carceral state, harassing and arresting poor people based on CompStat maps delivers shareholder value for Microsoft, speculative material for some company whose name we don’t know yet, and VC interest in some engineers who will promise that they can build “better” risk analytics algorithms than Northpointe.

A hybrid labor management system and data visualization platform, CompStat is patterned on post-Fordist management styles that became popular during the 1980s and early 1990s. Although it draws liberally from business methodologies like Six Sigma and Total Quality Management, it is most explicitly indebted to Michael Hammer and James Champy’s Re-engineering the Corporation, which calls for implementing high-end computer systems to “obliterate” existing lines of command and control and bureaucratic organization of responsibility. Instead of benchmarks and targets set atop corporate hierarchies in advance of production, Hammer and Champy advocate a flexible management style that responds, in real time, to market demands. Under their cybernetic model, the CEO (police commissioner) would watch franchisees’ (precincts) performance in real time (CompStat meetings), in order to gauge their market value (public approval of police performance) and productivity (crime rates, arrest numbers).

Under CompStat, responsibility for performance and, in theory, strategy, devolves from central command to the middle managers (a.k.a. precinct commanders), who must keep their maps and numbers up to date and are promoted or ousted based on their ability to repeatedly hit target numbers (in their case, crime rates and arrests). Because the responsibility for constantly improving the bottom line has been transferred to the precinct commanders, they lean on their sergeants when the numbers are bad, and the sergeants in turn lean on their patrol officers.

CompStat also gives police managers a simple, built-in way of easily telling whether or not their cops are doing their jobs. They can look at maps to see if they’ve changed. This simplicity has the added bonus for governments of providing easy “transparency,” in that anybody can look at a map and see if there are more or fewer dots on it than there were a week ago. More dots mean the cops are failing. Fewer dots mean they’re doing their job.

This appeals to the supposed technocratic center of American politics, which regards numbers as neutral and post-political. It lends apparent numerical legitimacy to suspicions among the privileged classes about where police ought to crack heads hardest. It also, in theory, saves money. You don’t have to deploy cops where there aren’t incidents.

CompStat is rooted in a sort of folk wisdom about what statistics are: uncomplicated facts from empirical reality that can be transformed automatically and uncontroversially into visual data. The crimes, the logic goes, are simply happening and the information, in the form of incident reports, is already being collected; it merely should be tracked better. Presumably, CompStat merely performs this straightforward operation in as close to real time as possible. Departments can then use these “statistics” to make decisions about deployment, which can be targeted at spaces that are already “known” to have a lot of crime.

But this overlooks the methodological problems about how data is to be interpreted as well as the ways in which the system feeds back into itself. Statistics are not raw data. Proper statistics are deliberately curated samples designed to reflect broad populational trends as accurately as possible so that, when subjected to rigorous mathematical scrutiny, they might reveal descriptive insights about the composition of a given group or inferential insights about the relationships between different social variables. Even in the best of cases, statistics are so thoroughly socially constructed that much of social science literature is devoted to debating their utility.

When CompStat logs arrest information in a server and overlays it on a map, that is not statistics; it is a work summary report. The “data” collected reflects existing police protocol and strategies and are reflective of police officers’ intuitive sense of what places needs to be policed, and what bodies need to be targeted, and not much else. New York City cops don’t arrest investment bankers for snorting their weight in cocaine because they are not doing vertical patrols in Murray Hill high rises. They are not doing vertical patrols in Murray Hill high rises not simply because the police exist to protect rather than persecute the wealthy, but because they have labored for 20 years under a theory of policing that effectively excludes affluent areas from routine scrutiny. It so much as says so in the name: These high-rises don’t have broken windows.

Similarly, the National Center for Women and Policing has cited two studies that show that “at least 40 percent of police officer families experience domestic violence,” contrasted with 10 percent among the general population. Those incidents tend not to show up on CompStat reports.

The reverence with which CompStat’s data is treated is indicative of a wider fetishization of numbers, in which numbers are treated as more real than social structures or political economy. Indeed, it often seems as though metrics are all that there is.

The transparent/responsive/smart government movement argues for reconstituting governance as a platform, transforming the state into a service- delivery app. Its thought leaders, like Michael Flowers and former Maryland governor Martin O’Malley, routinely point to CompStat as the fountainhead of postpolitical governance, as if such a thing were possible. But as feminist critics of technology like Donna Haraway and Patricia Ticineto Clough have long pointed out, technology is political because it is always, everywhere, geared toward the constitution, organization, and distribution of differentiated bodies across time and space. And bodies are politics congealed in flesh. CompStat is designed to maximize the efficiency and force with which the state can put police officers’ bodies into contact with the bodies of people that must be policed.

And how do police determine which bodies must be policed? They do it based on what “feels” right to them, the digital inheritance of Broken Windows. Even cops that are not racist will inevitably reproduce racialized structures of incarceration because that is what policing is. In a city like New York, in a country like the U.S., that level of police discretion always points directly at the histories of unfreedom for black, brown, and queer people that are the constitutive infrastructures of our state.

Northpointe’s algorithms will always be racist, not because their engineers may be bad but because these systems accurately reflect the logic and mechanics of the carceral state — mechanics that have been digitized and sped up by the widespread implementation of systems like CompStat. Policing is a filter, a violent sorting of bodies into categorically different levels of risk to the commonweal. That filter cannot be squared with the liberal ideas of law, order, and justice that a lot of people still think the United States is based on. Programs like CompStat are palliative. They seem to work in data, in numbers, in actual events that happened outside of the context of structural inequalities, like racism or patriarchy, or heteronormativity. But CompStat links the interlocked systems of oppression that durably reproduce the violence of the carceral state to a fantasy of data-driven solutionism that reifies and reproduces our structural evils. Whether or not a human is remanded to a cage because of their race and sex, or because of a number on a dashboard, means very little once the door slams shut.