The Italian surgeon Cesare Lombroso developed his theory of “physiognomy” — that physical traits expressed inborn criminality — in the most tech-startup way possible. In the 1860s, while Lombroso was working as a psychiatry professor at the University of Pavia, a local Italian worker died in prison, where he had been incarcerated for 20 years for stealing food. A trained physician, Lombroso was asked to perform the postmortem autopsy, during which he had a realization: The criminal, and in fact all criminals, looked to his eyes like apes. What if, Lombroso reasoned, this meant that criminality was both a natural-born trait and, as leading scientists of his era were proposing for all sorts of human failings, connected to “racial degeneration”?

Prevailing logics of late 18th and 19th century criminal justice held that crime was a by-product of human nature and a consequence of rational decision-making. People committed crime because they thought it made sense in context, which meant that punishment should work as a deterrent, increasing the cost of being caught. To prevent crime, the state had to make it a bad option. But deterrence, trials, and reactive enforcement were slow and cumbersome and didn’t seem to effectively make people more law-abiding. So Lombroso — looking at a dead and butchered day laborer — imagined a different way.

What if, he thought, it was possible to streamline criminal justice by just looking at a person? What if their face could tell you whether or not they were prone to criminality? Even better, what if you could systematically measure a person’s face and use that process to determine their level of “criminal atavism”? This would be more efficient and socially beneficial than counting on deterrence to control the barbarous masses (and being constantly disappointed).

Here was a way to use science and some apparent common sense to make the world safer, more rational, and more efficient. This objective measurement scheme would force inefficient judicial institutions to abandon ineffective approaches and help law enforcement and lay citizens alike to know who to hold in suspicion. It would, in short, make the world a better place. The problem was that this approach, in trying to map the coordinates of human nature onto an “objective” matrix, merely reinscribed colonial and imperial racial and sexual logics, as well as an endemic bourgeois hatred of the working classes. Lombroso, of course, didn’t think of it that way. Substituting his best intentions for serious inquiry, he imputed a value system designed to stratify people through biology.

All types of Victorian theorizing, often translated into more contemporary languages so animate research and product development that the tech industry is “automating inequality”

Physiognomy was eventually debunked, but its legacy of what Cedric Robinson calls racial thinking has lived on in Silicon Valley’s cultural imaginaries. Lombroso’s theory was, like a great deal currently coming out of the world of tech, an engineering “solution” in search of a problem. It’s no surprise that all types of Victorian theorizing, often translated into more contemporary languages (think “genetics” instead of “racial atavism,” or “evolutionary psychology” and Jordan Petersen’s lobster tales instead of the “feminine sphere” or the “weaker sex”) so animate research and product development that, to quote Virginia Eubanks, the tech industry is “automating inequality.” Physiognomy and related bunk sciences like phrenology have never truly left, and the heavily capitalized project of turning the world into a datafied engineering project means that it is more central than ever before.

In the past two years, a pair of machine-learning studies caused an uproar, stoking concerns that physiognomy and phrenology are “back.” In the first case, “Automated Inference on Criminality Using Face Images,” researchers trained neural nets on mugshots from an undisclosed police department in China to try to determine whether there were physiological bases for “criminality.” In the second, “Deep Neural Networks Are More Accurate Than Humans at Detecting Sexual Orientation From Facial Images,” researchers trained software on profile pictures and found that it did better than humans at guessing a person’s sexual orientation from their face.

Neither study’s methodologies, conclusions, or ethics held up well under scrutiny. In fact, both are now “basic” case studies for the online Calling Bullshit course, which is dedicated to debunking overheated data-science claims.

First among those studies’ failures was their suspicious ethical premises. It’s not clear what good is served by further building out and capacitating the contemporary tech-assisted carceral surveillance state and targeting it at human souls. And in a country where LGBTQ people routinely are harassed, suffer professional punishments, and worse, it is indefensible to create an algorithm that could theoretically out people at scale.

But there were also a number of more basic methodological problems with the studies. For instance, the criminality study’s training data compared convicted people’s ID cards against others’ publicly available profile pictures, raising the question of whether anyone would like their character judged by a state ID photo over a curated headshot. Too, the decision to use photos of convicted people to denote criminals begged the obvious question of whether the algorithm was finding a signal about “criminality” or about how men most likely to be convicted by the existing system look.

As interesting as the studies’ glaring failures, though, is what the popular commentary about them revealed. Many commentators have characterized the digitized “return” of phrenology and physiognomy as a problem of “bad science” and “bad ethics” that place such studies as these as beyond the pale. For example, an article at Splinter on the criminality study starts like this:

“In ancient Greece, the philosopher Aristotle once said that ‘it is possible to infer character from features.’ Through the 1800s, scientists took his cue, pushing theories that bad people could be identified through their looks alone. Then, of course, science happened, and Aristotle’s comment was taken with a mountain of salt” [Emphasis added.]

“Science” here functions as a corrective for those technologists who seek to push possibilities too far. “Better” science and “better” ethics do not ask too much of their technologies. This posits digital physiognomy as overreaching, redeeming the more sober mundanities of biometrics in general.

To talk about phrenology “reappearing” in contemporary technologies misses the fact that its underlying logic never left. To the extent that “science” debunked the most egregious elements of physiognomy, it did so by driving the same premises of deducing character from physical characteristics deeper into the body. Rather than look for a person’s essential nature in their face, research looked for it in DNA, in hormone levels, in imperceptible but nonetheless determinate physical materials. This process, often against the aims of the researchers, has had the predictable effect of re-encoding racial taxonomies in genomic studies, rigidifying sexuality as “genetic,” and legitimating class exploitation as a product of physiological inheritance. The widely derided criminality and the sexuality studies both rely (spuriously) on this type of “legitimate” research to justify their claims.

Most refutations of the criminality study included as part of their argument an explanation that “criminality” is likely driven by a combination of biological and environmental conditions. In particular, testosterone levels have been shown to contribute to the type of “risky” behavior that leads to criminality. This correlation between testosterone and behavior seems to hold across a number of animal species, but animals do not commit crimes. Testosterone levels might be correlated with certain types of behavior, but the biological tells us literally nothing about “crime,” a category of human social organization.

If we’re looking for a real “root cause” of “criminality,” we should look not to biological factors but to politics, which organizes how a society decides what is and is not a crime and how punishment is to be administered. The sort of risk taking and violence associated with testosterone is, after all, just as often lauded or ignored as it is considered dangerous. Politicized attitudes about race, class, and sex tend to determine whether biological factors are seen in one way or another.

Arguing that physiognomy and phrenology were dominant until “of course, science happened” is a misrepresentation. It wasn’t “science” that happened; it was the civil rights movement. The root problem with the recent phrenology and physiognomy studies is their political implications: that they could give a scientific-seeming alibi for monitoring, impoverishing, or incarcerating people, much like earlier social Darwinism helped legitimate racial regimes. If that is the main problem with the “pseudosciences” of phrenology and physiognomy, we need to consider how biometrics in general — routinized facial recognition, gait recognition, and “behavioral” biometrics to list just a few — performs these same core functions.

Depending on how you interpret the history, biometrics traces back to one of a few origin points, none of them particularly proud. The most common lineage traces back to Alphonse Bertillon, the 19th century French police officer who trailblazed anthropometry — the study of measuring the human body — and institutionalized the mug shot as a means of rationalizing the identification and classification of “criminals.” The French government adopted the approach to enroll Roma peoples en masse into its proto-database to better surveil and contain them. Bertillon was also the “expert witness” whose testimony landed Albert Dreyfus, a Jewish military officer falsely accused of treason, on Devil’s Island. Bertillon claimed to have developed a handwriting identification system that incontrovertibly proved Dreyfus’s treason. It was later found to be bunk — not the last time that dubious forensic science would be used to wrongfully incarcerate someone from a marginalized population.

Biometry can also be traced to the British colonial government in India. In the aftermath of the 1857 Indian War of Independence, the British decided that they needed a scientific technology with which to differentiate Indian subjects. As Joseph Pugliese, author of Biometrics: Bodies, Technologies, Biopolitics, puts it, “the technology of fingerprint biometrics was developed in order to construct a colonial system of identification and surveillance of subject populations in the face of British administrators who ‘could not tell one Indian from another.’ ” As Bithaj Ajana points out in Governing Through Biometrics: The Biopolitics of Identity, the technology that emerged out of that effort was not successful, and it fell to Francis Galton — the originator of eugenics — to develop the first workable fingerprinting system.

Ajana notes that “anthropometry and fingerprinting were enlisted not only to identify individuals but also to ‘diagnose’ disease and criminal propensity, define markers of heredity and correlate physical patterns with race, ethnicity and so on.” Every metric is also a graphic, a point of contact in which the body is reorganized according to institutional norms and demands. In Galton’s case, the fingerprint’s mark doubled as a tool to categorize the supposed gradations of human beings’ humanness. Fingerprints became agents in an ongoing social project of determining what sorts of bodies still registered as “beings of an inferior order” with “no rights which the white man was bound to respect.”

We can see the process of measurement as inscription at work systematically in older colonial practices, particularly in the technologies of the middle passage. In her book Laboring Women, historian Jennifer Morgan details how early European slavers used the shape and size of women’s breasts as a key metric to determine which peoples were fit for enslavement or for “civilization.” And drawing the connection between the present and the past, sociologist Simone Browne points to The Book of Negros, probably the first systematic biometric document. When the British colonial army fled from New York in 1783, black people made to flee with them. To speed adjudication in the event that a white person came to claim someone, officers recorded the physical characteristics of the black population of New York.

It wasn’t “science” that happened to physiognomy and phrenology; it was the civil rights movement

Here, again, the particular contours of the body work to reproduce a social structure that depends on the “scientific” principle that there is a difference in kind between peoples and that this difference is written on the skin. Taking this with the work of the brand, a tool designed to produce biometric markers, Browne shows that inscription and description have always twinned on the black body as a fundamental apparatus for maintaining white supremacy. Hortense Spillers takes this point further and argues that the ideographic violence of white supremacy is the basis for the totality of the American “symbolic order” — the entire system of semantics that make Americans intelligible to one another.

Of course, contemporary techniques of biometric inscription and description do not correspond directly to our historical practices. After all, biometrics manages things as mundane as opening a phone. But here, too, is a transfiguration of a body into a specific collection of data points primed for extractable value: “I” transform into a qualitative assemblage that is gatekept and circulated through productivity matrices designed to capacitate, in this case, the Apple corporation. In “knowing” me, Apple re-presents my body to me as a technical object happily in cahoots with the maintenance and expansion of the corporation. And this process has only become more literal over time, as Apple showed in its recent product launch event, in which it promoted its new Watch series by touting its real-time biometric monitoring capabilities as a self-improvement bonus. It comes with a digital coach! But like phrenology before it, this hinges “self-help” on dissolving the distinction between bodily integrity and surveilled commodity for the aspirational class of consumers Apple targets.

The terms and conditions are changed in the contemporary blending of body and digitality, but as with physiognomy and phrenology, the body remains the gateway to the soul. And it is a “soul” organized by the effective extraction of value from the rudiments of flesh.

Biometrics has never been merely about identifying people but about identifying people in particular institutional contexts for the specific purpose of expediency or bureaucratic lubrication. That is, they are conceived not as abstract, objective measures but are tailored to specific institutional purposes.

This means its applications can feel intuitively convincing, as when in a courtroom scene in a movie a fingerprint definitively identifies a murderer. But in practice, it means these technologies never been neutral; they have always been fully integrated with the aims of those agencies that actively work to produce, reproduce, and stabilize regimes of dispossession and violence. They are weapons built for hunting.

This is particularly true in the case of policing and criminal trials. Historically, biometric techniques were slow, specialized, expensive, and bound to tightly controlled laboratory conditions. This made them impractical to use in the field, where conditions are unstable, portability is crucial, and speed is of the essence. Instead, they were largely deployed as forensic evidence during the adjudication process. Prosecutors have tended to overstate the accuracy of biometric evidence (especially DNA evidence), in spite of many documented cases of laboratory errors, false positives, and contamination. Other forensic techniques like bite-plate matching have been found, by and large, to be junk science.

But these findings have not stopped the adoption of biometric techniques. They have instead fueled demand for “improving” them, further automating and mathematizing the techniques as a more robust and reliable alternative to relying on lab specialists. This has accelerated the digitalization of forensic matching and the growing reliance on “multimodal” biometrics — that is, biometric identification systems that depend on more than one type of identifier (for instance facial recognition and fingerprint) and that can combine physiological and behavioral techniques (for instance, iris recognition and voice recognition). More research has been addressed to “soft” biometrics — ambiguous and not concretely measurable traits, such as one’s gait, voice, or nonconscious bodily movements (called “behavior” in the industry).

Historically, the distinction between “hard” biometrics (measurable and tracked to particular individuals, like fingerprints and faces) and “soft” was clear. But contemporary machine learning has essentially eliminated this distinction, rendering “soft” biometric markers into quantifiable identifiers. This is normalizing their use and distribution through social space. In other words, the turn toward digitization and the consequent changes in funding patterns is why gait matching software is integrated into cameras throughout New York City. Remote fingerprint detection and facial recognition have become increasingly common tools in police officers’ kits. Officers use phones and devices with integrated biometric apps in mundane interaction with the public, like traffic stops.

This has moved biometric analysis out of the realm of the forensic and the judicial and into the zone of policing and enforcement. Contemporary police forces increasingly have access to biometric databases that house an array of identifying information, including facial recognition, fingerprint identification, voice recognition, gait matching, and more. Companies like Morpho market devices that allow officers to match fingerprints remotely, in real time, to criminal histories, outstanding warrants and associated files in facial-recognition databases. Or, as the Intercept recently reported, IBM worked directly with the NYPD for almost a decade to develop object-recognition camera technology that allows users to search for people by race and ethnicity, among other identifiers. It’s on the market as Intelligent Video Analytics 2.0, and it’s optimized for use with body cameras.

Every metric is also a graphic, a point of contact in which the body is reorganized according to institutional norms and demands

The information that populates these databases is often collected without the knowledge or consent of the public — sometimes automatically or passively through generalized surveillance. The mass registration of biometric data into open databases creates what the Georgetown Center on Privacy and Technology calls a “perpetual lineup,” which generalizes legal precarity. It’s the realization of Bertillon’s apparent dream: an opaque, automated police cataloging system to be mainly used against socially marginalized populations.

Biometric companies also enfold the forensic laboratory into the algorithm. In the past, police and prosecutors had to take their forensic evidence into laboratories. There, trained technicians would bring professionalization and specialization to bear on expertly “reading” the truths of the biological evidence. They would then translate that information back into evidence for police and the courts. But automation has disrupted that space of verification and validation. Information captured by cameras and scanners is digitally broken down, analyzed, and evaluated by algorithms instead. This distributes “the lab” throughout social space and effectively credentials non-specialists with “expert” knowledge just through having access to these technologies. A police officer that runs a remote fingerprint scan during a traffic stop and flags a facial match, criminal history, and outstanding warrant report is effectively conducting an automated forensic investigation.

This swaps one form of occulted knowledge (the lab technician) for another (the app). Companies that sell biometric analysis products tend to hide their algorithms behind trade secrets and patent protections, which has in some cases made it near impossible for defendants to challenge the biometric evidence that ties them to crime scenes or investigations.

And that, of course, is the point. Getting the tech “right” — which is to say eliminating “bias” from tools like facial recognition — will not rearrange the structural relationships between race, class, sex, and “crime” that plague of the American criminal justice system. In fact, it presents the perverse condition in which “good” tech forecloses critique and reform because it normalizes the ways police and courts in particular go about their work in what David Theo Goldberg theorizes as the “racial state”: a state that functions only as long as it generates, rationalizes, and bureaucratizes racial difference and then modulates life chances accordingly.

The biometric encounter at the fingerprint scanner integrates flesh into the order-maintenance mission of the carceral state, compelling its active collaboration in the reproduction of structures of extraction and inequality. It is a moment of marking in which the context of contact comes to stand for the “truth” of the body. By incorporating a body into a surveilling apparatus, the fingerprint scanner marks flesh as corporeality manifest on the “wrong side of the law” and patterns it in kind. As the biometric databanks of the racial state expand, so too do the somatic patterns of oppression translate into a digital, searchable, analyzable archive of “natural” criminality. If a body is out of step racially, or sexually, or ably, it flags. What side is the right side of the law?

Joseph Pugliese, in an article discussing the consistent failure of biometric technologies to recognize nonwhite bodies, argues that such moments of “Failure to Enroll” (FTE) show the degree to which biometric technics operate from a position of “infrastructural whiteness and the racialized zero degree of nonrepresentation.” For Pugliese, nonwhite bodies do not go unrecognized by biometric technologies because of technical problems but because of the centrality of whiteness to the state and social milieu in which biometric technologies operate. Drawing on Shoshana Magnet, we might argue that rather than understand this as failure, it should instead be seen as the technology doing its job, recognizing those bodies that are socially presumed to be full and representable while literally making racialized bodies invisible.

This critique is often articulated in this kind of framework: In recognition of our humanity, to increase transparency, and to ensure accountability, these technologies need to stop using whiteness as a baseline, and fairly recognize us all. Fair enough — if the state is going to surveil us, they should at least be able to surveil all of us equally well. But tracking back across the history of biometrics, it does not seem that the interests that reproduce racialized FTE are denying humanity to certain groups. Rather they are working in a tradition in which the nature of differential humanity is up for debate and that the value of bodily identification scales directly with the degree to which “individuals” are believed to be known through the body. It is not so much that the sometime excision of nonwhite, non-cisgendered bodies from the normative function of the biometric surround represents a failure to recognize their humanity. It is rather a failure to make sense of that humanity — a humanity that has historically been framed as existing outside the bounds of knowability.

In place of the question that supposedly drives biometrics — who are you? — measurement presents a statement at the moment of recognition: This is what you are. But in the case of the Failure to Enroll — a failure that disproportionately impacts queer people and people of color — biometrics clarifies its relationship to the unrecognized body presented before it. To tweak Paisley Currah and Lisa Jean Moore’s discussion of the history of sex designation in New York City’s birth certificates: Rather than the formulation “this is who you are,” the blinded apparatus makes an injunction We do not know what you are.

From this point unfolds the world-building of metric governance. “We do not know what you are” takes an unrecognizable identity to the infrastructurally white social apparatus and transforms it into surveillance and “risk” mitigation. “We do not know who you are” becomes a mission: We must know what you are. This sets the stage for the extractive and open-ended demand placed on the body-that-fails-to-register to surrender its qualities to the interrogative apparatus. The concept of race transforms biometrics systems’ failures into successes, because the point of race and of the heterosexual matrix is precisely to produce recognition failure as a point of entry for control.

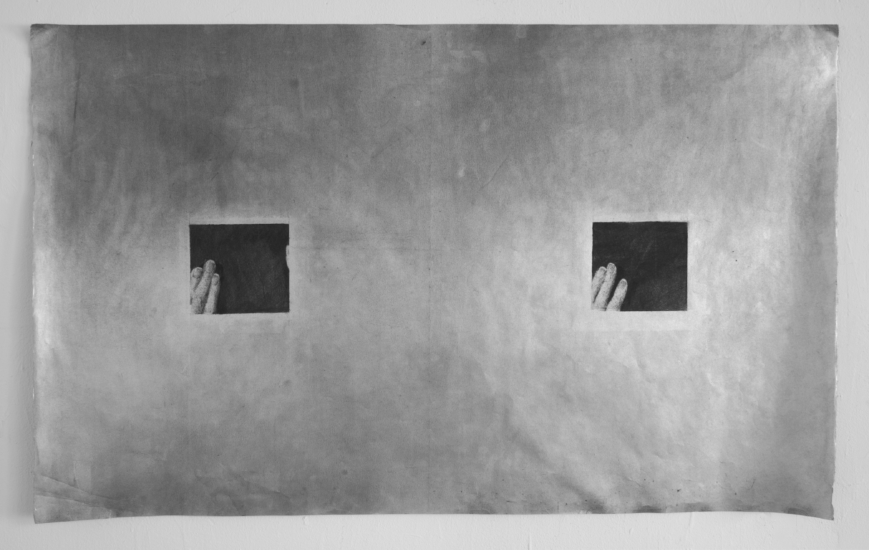

Biometric accountability, accuracy, and fairness matter. But, following artists Zach Blas and Martine Syms, the organizer and technologist Nabil Hussein, and a great many others, it is not at all clear that biometric accountability, accuracy, and fairness are mechanisms for achieving justice, or even a baseline common humanity. That is not what biometric systems do. Instead, they reflect the institutional demands of the entities that employ them — demands that are always intended to sort people into those with access or without; secured by or made insecure by the state; capacitated or incapacitated by a credit score. To survive the inquisitional drives of state and capital, a great many bodies are always made to hide.

It is worth remembering that phrenology was never explicitly a project of explaining racial difference and justifying racial rule. It was primarily considered a means for the self-improvement of the white middle and working classes. Phrenology clubs throughout the United States would seek to identify physically expressed shortcomings and would set goals and work to improve those faculties. Self-measuring the body was a useful means of tracking one’s progress and structuring one’s life goals. Today we might categorize it as something like “wellness” or “self-care.” But of course, the entire structure of phrenology’s utility to the aspiring classes was premised on the systematic exclusion of those populations that were and would continue to be denied participation in American civil society. Aspiration was built on the security of hierarchy. Perhaps technology excised whole groups of people from the human family, but so much the more opportunity for those who could strive and gain. And at any rate, better to be sure of one’s place.

And this, then, is the point. It is convenient to point to the dangerous rise of the newest iteration of the ancient project to mathematize the body and its capacities and call it a new set of clothes for a debunked way of thought. But the reality is that the structure of our biometric surround is always already doing the work that we would prevent the new phrenology from accomplishing. Biometrics have always been in collusion with racial-sexual hierarchies of cisgendered heterosexist white supremacy because they constitute a technique for making some bodies a problem, a question, a mystery, and a pretense for control. And at the same time, they allow other bodies to exist with a greater sense of security and convenience, confident — as Lombroso hoped they would be — that they’re safeguarded from the imagined degenerate and atavistic masses that threaten and surround them. As such, bad studies like those we started with are a scapegoat for biometrics, which perform the work of phrenology regardless.