HitchBot, a friendly-looking talking robot with a bucket for a body and pool-noodle limbs, first arrived on American soil back in 2015. This “hitchhiking” robot was an experiment by a pair of Canadian researchers who wanted to investigate people’s trust in, and attitude towards, technology. The researchers wanted to see “whether a robot could hitchhike across the country, relying only on the goodwill and help of strangers.” With rudimentary computer vision and a limited vocabulary but no independent means of locomotion, HitchBot was fully dependent on the participation of willing passers-by to get from place to place. Fresh off its successful journey across Canada, where it also picked up a fervent social media following, HitchBot was dropped off in Massachusetts and struck out towards California. But HitchBot never made it to the Golden State. Less that two weeks later, in the good city of Philadelphia, HitchBot was found maimed and battered beyond repair.

We should learn to see the robot for what it is — someone else’s property, someone else’s tool

The destruction of HitchBot at the hands of unseen Philly assailants was met in some quarters by alarm or hilarity. “The hitchhiking robot @hitchBOT has been destroyed by scumbags in Philly” tweeted Gizmodo, along with a photograph of the dismembered robot. “This is why we can’t have nice hitchhiking robots,” wrote CNN. The creators of the robot were more circumspect, saying “we see this as kind of a random act and one that could have occurred anywhere, on any one of HitchBot’s journeys.” The HitchBot project was only one small part of their robot-human interaction research, which looks at how workplaces might optimally integrate human and robotic labor. “Robots entered our workplace a long time ago,” they write, “as co-workers on manufacturing assembly lines or as robotic workers in hazardous situations.” In size and sophistication, HitchBot was less “co-worker” and more oversized toddler, designed to be “appealing to human behaviors associated with empathy and care.” With its cute haplessness and apparently benign intentions, the “murder” of HitchBot by savage Philadelphians seemed all the more appalling.

The bot-destroyers were onto something. It feels natural to empathize with HitchBot, the innocent bucket-boy whose final Instagram post read “Oh dear, my body was damaged…. I guess sometimes bad things happen to good robots!” We’re told by technologists and consultants that we’ll need to learn to live with robots, accept them as colleagues in the workplace, and welcome them into our homes, and sometimes this future vision comes with a sense of promise: labor-saving robots and android friends. But I’m not so sure we do need to accept the robots, at least not without question. Certainly we needn’t be compelled by the cuteness of the HitchBot or the malevolent gait of the Boston Dynamics biped. Instead, we should learn to see the robot for what it is — someone else’s property, someone else’s tool. And sometimes, it needs to be destroyed.

Those Philly HitchBot-killers have more guts than I do: It feels wrong to beat up a robot. I’ve seen enough Boston Dynamics videos to get the heebie jeebies just thinking about it. Watching the engineer prod Boston Dynamics’ bipedal humanoid robot Atlas with a hockey stick to try and set it off balance sets off all kinds of alarm bells. Dude stop… you’ll piss him off! Sure, the robot gets up again (calmly, implacably), but he might be filing the indignity away in his hard drive brainbox, full of hatred for the human race before he’s even left the workshop. Even verbal abuse seems risky, even if the risk is mostly to my own character. I can’t imagine yelling at Alexa or Siri and calling her a stupid bitch — although I’m sure many do — out of fear that I’ll enjoy it too much, or that I won’t be able to stop. Despite everything I know, it’s difficult to internalize the fact that robots with anthropomorphic qualities or humanlike interactive capabilities don’t have consciousness. Or, if they don’t yet, one imagines that they might soon.

Some of the world’s most influential tech talkers have gone beyond imagining machine consciousness. They’re worried about it, planning for it, or actively courting it. “Hope we’re not just the biological boot loader for digital superintelligence” Elon Musk tweeted back in 2014. “Unfortunately, that is increasingly probable.” Famously, Musk has repeatedly identified general artificial intelligence — that is, AI with the capacity to understand or perform any intellectual task a human can — as a threat to the future of humanity. “We’re headed toward a situation where AI is vastly smarter than humans and I think that time frame is less than five years from now,” he told Maureen Dowd this July. Even Stephen Hawking, a man far less prone to histrionics, warned that general AI could spell the end of the human race. And while AI and robots aren’t synonymous, decades of cinema history, from Metropolis to The Matrix, have conjured a powerful sense that artificially intelligent robots would pose a potent threat. Harass too many robots with a hockey stick and you’ll be spending the rest of your existence in a vat of pink goo, your vital fluids sucked out by wet cables to provide juice for the evil robot overlords. Back in our own pre-general AI reality, we’re left with the sense that it’s prudent to treat the robots in our lives with respect, because they’ll soon be our peers, and we better get used to it.

As robots and digital assistants become more prevalent in workplaces and homes, they ask more of us. In Amazon’s fulfillment center warehouses, pickers and packers interact carefully with robotic shelving units, separated by thin fences or floor markings but still in danger of injury from the moving parts and demanding pace of work. As small delivery robots begin to patrol the streets of college towns and suburbs, humans are required to share the sidewalk, stepping out of their way or occasionally aiding the robots’ passage during the last yards of the delivery process. In order to interact with us more “naturally” and efficiently, many of these robots are equipped with human characteristics, made relatable with big decal eyes or bipedal movement or a velvety voice that encourages those who encounter them to empathize with them as fellow beings. In other instances, robots are given anthropomorphic characteristics so they can survive on our turf. The spider-dog appearance and motion of Boston Dynamics’ Spot ostensibly helps it navigate complex terrain and traverse uneven ground, so it can be useful on building sites or in theaters of war. When I jogged through Golden Gate park last week and saw a box-fresh Spot out for a walk with its wealthy owners, I was immediately compelled and somewhat creeped by its uncanny gait. Its demon-dog movement, and the sense that it’s very much alive, is undoubtedly central to its appeal. In either case, the anthropomorphic design of this kind of robot provides a framework for interaction, even prompting us to treat them with empathy, as fellow beings with shared goals.

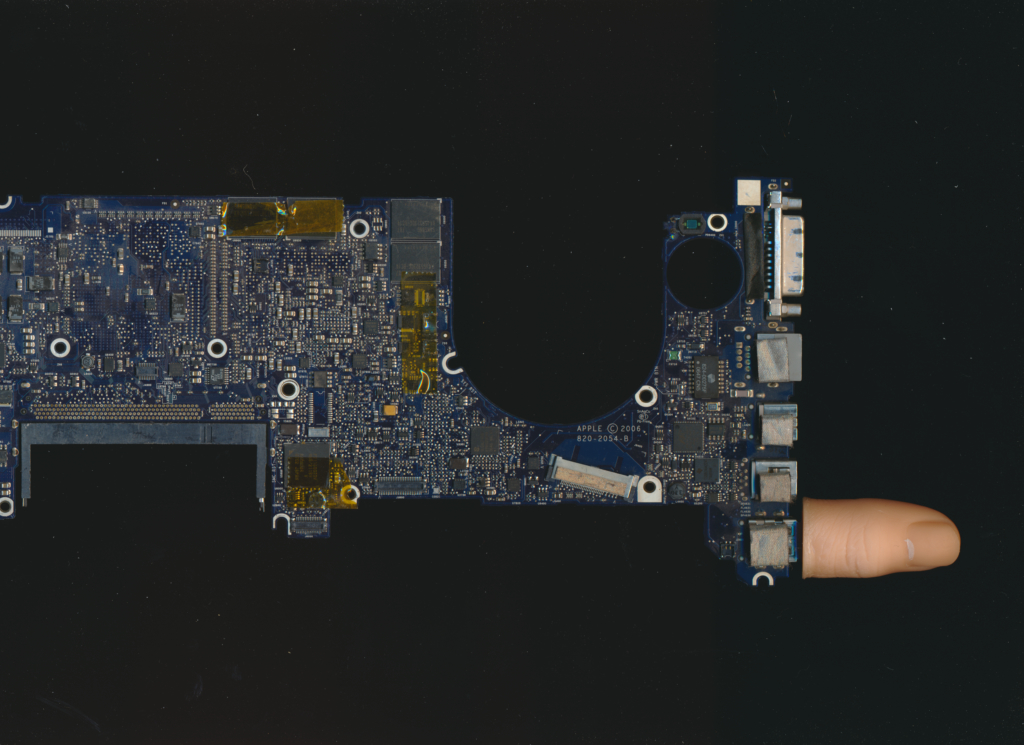

Marty at Giant grocery stores

Our almost inescapable tendency to anthropomorphize robots is helpful to the companies and technologists invested in increasing automation and introducing more robots into homes, workplaces, and public spaces. Robotics companies encourage this framing, describing their robots as potential colleagues or friends rather than machines or tools. Moxi, the “socially intelligent” hospital assistant robot which is essentially an articulated arm on wheels, is pitched as a “valuable team member” with “social intelligence” and an expressive “face.” Marty, the surveillance robot that patrols Giant Food supermarket aisles, was given absolutely enormous googly eyes to make it look “a bit more like a human” despite being more or less just a massive rolling rectangle. Reports and white papers on the future of work eagerly discuss the rise of “co-bots,” robots that work alongside humans, often in service roles different than the industrial applications we’re used to. The anthropomorphic cues (googly eyes, humanoid forms) assist us in learning how to relate to these new robot buddies — useful training in human-robot cooperation for when the robots do gain autonomy or even consciousness.

For now, the robots aren’t anywhere near sentient, and the promise of “general AI” is just a placeholder term for an as-yet unrealized (and quite possibly unrealizable) concept. For something that doesn’t really exist, however, it holds a lot of power as an imaginative framework for reorganizing and reconceptualizing labor — and not for the benefit of the laborer, as if that needed to be said. Of course, narrow AI applications, like machine learning and the infrastructures that support it, are widespread and increasingly enmeshed in our economy. This “actually-existing AI-capitalism,” as Nick Dyer-Witheford and his co-authors Atle Mikkola Kjøsen and James Steinhoff call it in their book Inhuman Power, continues to extend its reach into more and more spheres of work and life. While these systems still require plentiful human labor, “AI” is the magic phrase that lets us accept or ignore the hidden labor of thousands of poorly paid and precarious global workers — it is the mystifying curtain behind which all manner of non-automated horrors can be hidden. The idea of the “robot teammate” functions in a similar way, putting a friendly surface between the customer or worker or user and the underlying function of the technology. The robot’s friendliness or cuteness is something of a Trojan horse — an appealing exterior that convinces us to open the castle gates, while a phalanx of other extractive or coercive functions hides inside.

AI advocates and technology evangelists sometimes frame concern or distrust of automation and robotics as a fear based in ignorance. Speaking about a Chapman University study which found that Americans rate their fear of robots higher than their fear of death, co-author Dr. Christopher Bader stated that “people tend to express the highest level of fear for things they’re dependent on but that they don’t have any control over,” especially when, as with complex technology, people “don’t have any idea how these things actually work.” Other researchers cite the human-like qualities of some robots as the thing that provokes fear, with their almost-humanness slipping into the uncanny valley where recognition and repulsion collide. Of course, not all fear is due to ignorance, and the Cassandras that sound the alarm call around robot labor and autonomous machines are often more clear-eyed than the professional forecasters and tech evangelists.

Robots’ anthropomorphic design prompts us to treat them with empathy, as fellow beings with shared goals. The fact is, robots aren’t your friends

The Luddites, currently enjoying a moment of renewed attention after a century of derision and misunderstanding, were clear-eyed about the role of industrial machinery, its potential to undermine worker livelihoods and, indeed, a way of life. In his book Progress Without People, historian David F Noble emphasizes that the Luddites didn’t hate machines out of hatred or ignorance, writing that “they had nothing against machinery, but they had no undue respect for it either.” Plenty of the era’s machine breaking was, as Eric Hobsbawm famously described it, an act of “collective action by riot” — destruction intended to pressure employers into granting labor or wage concessions. Other workers wrecked looms and stocking frames because the new automated textile equipment was poised to dismantle their craft-based trade or undermine labor practices. In any case, the machine breakers recognized the machinery as an expression of the exploitative relation between them and their bosses, a threat to be dealt with by any means necessary.

Unlike so many of today’s technologists who are bewitched by the Manifest Destiny of abstract technological progress, the Luddites and their fellow saboteurs were able to, as Noble writes, “perceive the changes in the present tense for what they were, not some inevitable unfolding of destiny but rather the political creation of a system of domination that entailed their undoing.” Luddism predates the kind of technological determinism we’re drowning in today, from both the liberal technologists and the “fully-automated luxury communism” leftists. Looms and spinning jennies weren’t viewed as a necessary gateway to a potential future of helpful androids and smart objects. A similar present-tense analysis can be applied in the technologies of today. When we relate to a robot as an animate peer to be loved or feared, we’re letting ourselves be compelled by a vision ginned up for us by goofy futurists. For now, if robots have a consciousness or an agency, it’s the consciousness of the company that owns them or created them.

The fact is, robots aren’t your friends. They’re patrolling supermarket aisles to watch for shoplifting and mis-shelved items, or they’re talking out of both sides of their digital mouths, responding to your barked requests for the weather report or the population of Mongolia and then turning around and sharing your data and preferences and vocal affect with their masters at Google or Amazon. By putting anthropomorphic robots — too cute to harm, or too scary to mess with — between us and themselves, bosses and corporations are doing what they’ve always done: protecting their property, creating fealty and compliance through the use of proxies that attract loyalty and deflect critique. This is how we reach a moment where armed civilians stand sentry outside a Target to protect it from vandalism and looting, and why some people react to a smashed-up Whole Foods as though it were an attack on their own best friend — duped into defending someone else’s property over human lives.

In the zombie film, there comes a moment in the middle of the inevitable slaughter where the protagonist finds themself face to face with what appears to be a family member or lover, but is most likely infected, and thus a zombie. The moment is agonizing. As the figure inexorably approaches, our hero — armed with a gun, a bat, or some improvised weapon — has only a few seconds to ascertain whether the lurching body is friend or foe, and what to do about it. It’s almost always a zombie. This doesn’t make the choice any easier. To kill something that appears as your husband, your child, seems impossible. Against nature. Still, it is the job of our hero to recognize that what appears as human is in fact only a skin-suit for the virus or parasite or alien agency that now animates the body. Not your friend: An object to be destroyed.

Throughout the supply chain and the robot’s lifespan, a bevy of humans are required to shepherd, assist, and maintain it

This is the act of recognition now required of us all. The robot that enters our workplace or strolls our streets with big eyes and a humanlike gait appears as a friend, or at least as friendly. But beneath the anthropomorphic wrapper, and behind the technofuturist narratives of sentient AI and singularities, the robot is more zombie than peer. As the filmic zombie is animated by the parasite or virus (or by any number of metaphors), the robot-zombie is animated by the impulses of its creator — that is, by the imperatives of capital. Sure, we could one day have a lovely communist robot. But as long as our current economic and social arrangements prevail, the robots around us will mostly exist not to ease our burden as workers, but to increase the profits of our bosses. For our own sake, we need to inure ourselves to the robot’s cuteness or relatability. Like the zombie, we need to recognize it, diagnose it, and — if necessary, if it poses a threat — be prepared to deal with it in the same way the protagonist must dispatch the automaton that approaches in the skin of her friend.

Even as I write this, the nagging feeling remains that I myself might be a monster, or at least subconsciously genocidal. Can I say that the the robot that appears as my uncanny simulacrum should be pegged as zombie-like, othered, destroyed? Denying the personhood of another, especially when that other appears different or unfamiliar, is generally the domain of the racist, the xenophobe, and the fascist state. Indeed, in many robot narratives, the robot is either literally or metaphorically a slave, and the film or story proceeds as some kind of liberation narrative, in which the robot is eventually freed — or frees itself — from enslavement. Karel Čapek’s 1920 play R.U.R. (Rossum’s Universal Robots), which introduced the word “robot” (from the Czech word “robota” meaning “forced labor”) to science fiction literature and the English language in general, also provided an the archetype for robot-liberation stories. In the play, synthetic humanoids toil to produce goods and services for their human masters, but eventually become so advanced and dissatisfied that they revolt, burning the factories and leading to the extinction of the human race. Versions of this rebellion story play out time and again, from Westworld to Blade Runner to Ex Machina, giving us a lens through which to imagine finding freedom from toil, and a framework for allegorizing liberation struggles, from slave rebellions and Black freedom struggles to the women’s lib movement.

Empathy for robotkind is further encouraged by the musings of tech visionaries, and the pop-science opinion writers that posit the need to consider “robot rights” as a corollary for legal human rights. “Once our machines acquire a base set of human-like capacities,” writes George Dvorsky for Gizmodo, “it will be incumbent upon us to look upon them as social equals, and not just pieces of property.” His phrasing invokes previous arguments for the abolition of slavery or the enshrinement of universal human rights, and brings the “robot rights” question into the same frame of reference as discussions of the “rights” of other human or near-human beings. If we are to argue that it’s cruel to confine Tilikum the orca (let alone a human) to a too-small pen and lifelong enslavement, then on what grounds can we argue that an AI or robot with demonstrable cognitive “abilities” should be denied the same freedoms?

This logic must eventually be rejected. I appreciate a robot liberation narrative insofar as it provides a format for thinking through emancipatory potential. But as a political project, “robot rights” have more utility for the oppressors than for the oppressed. The robot is not conscious, and does not preexist its creation as a tool (the zombie was never a friend). The robot we encounter today is a machine. Its anthropomorphic qualities are a wrapper placed around it in order to guide our behavior towards it, or to enable it to interact with the human world. Any sense that the robot could be a dehumanized other is based on a speculative understanding of not-yet-extant general artificial intelligence, and unlike Elon I prefer to base my ethics on current material conditions.

Instead, what would it look like to relate to today’s machines as the 19th century weavers did, and make decisions about technology in the present? To look past the false promise of the future, and straight at what the robot embodies now, who it serves, and how it works for or against us?

If there is any empathy to be had for the robot, it’s not for the robot as a fellow consciousness, but as precious matter. Robots don’t have memories — at least, not the kind that would help them pass the Voight-Kampff test — but they do have a past: the biological past of ancient algae turning to sediment and then to petroleum and into plastic. The geological histories of the iron ore mined and smelted and used for moving parts. The labor poured into the physical components and the programs that run the robot’s operations. These histories are not to be taken lightly.

The robot’s materiality also offers us a crux point around which to identify fellow workers, from those mining the minerals that become the robot to those working “with” the robot on the factory floor. Throughout the supply chain, through the robot’s lifespan, a bevy of humans are required to shepherd, assist, and maintain it. Instead of throwing our empathic lot in with the robot, what would it look like to find each other on the factory floor, to choose solidarity and build empathy and engagement and care for our collective selves? In this version of robot-human interaction, we might learn to look past the friendly veneer, and identify the robot as what it is — a tool — asking whether its existence serves us, and what we might do with it. To decide together what is needed, and respond to technology “in the present tense,” as David Noble says, “not in order to abandon the future but to make it possible.” Instead of emancipating the “living” robot, perhaps the robot could be repurposed in order to emancipate us, the living.