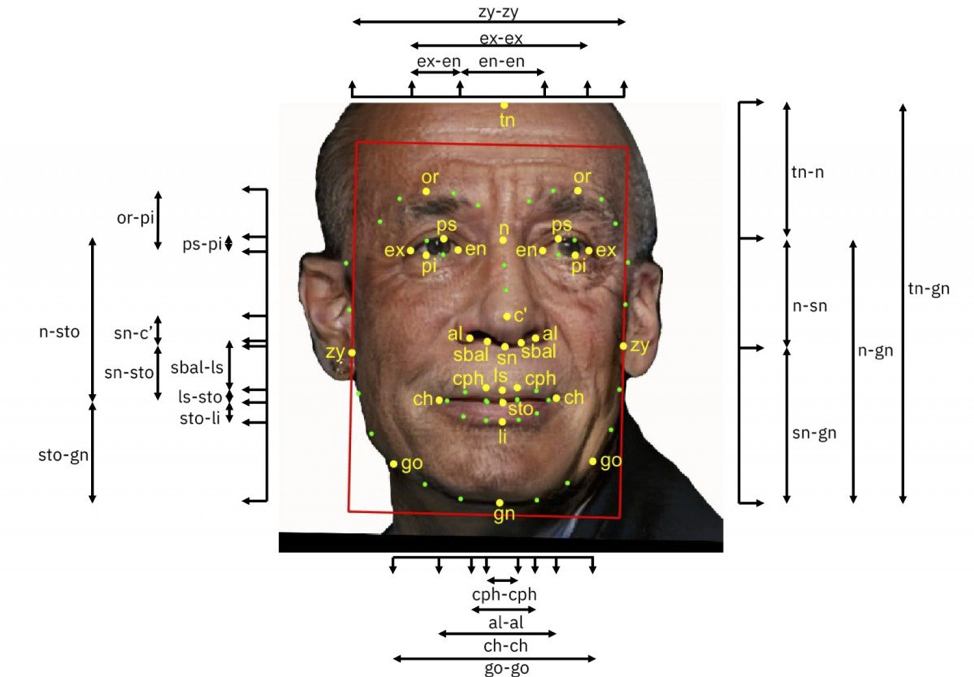

A few recent studies on facial detection and recognition systems, described in this MIT Technology Review report, point to their improved accuracy across race and gender lines and detail their enhanced “measures of facial diversity.” This somewhat terrifying phrenological image illustrates that concept in action:

The newfound accuracy of these systems was celebrated in some of the press coverage; it amounts to an authorization that we can go on pretending that face scanning can be an impartial and failsafe way of attributing identity. Improving face scanning to make it appear more inclusive and trustworthy mainly strengthens arguments made by those who want to impose blanket surveillance everywhere. But as the Technology Review article notes, “Even the fairest and most accurate systems can still be used to infringe on people’s civil liberties.” Indeed, the more fair and accurate the face scanning systems are perceived to be, the more likely those civil liberties will be abrogated. In a trial of a scanning system in London, the police reportedly prevented people from covering their faces and fined one man for protesting it.

The problem with face scanning has never been that it doesn’t work well; it is that it can conceivably work at all. The premise itself is the problem: It’s based on the idea of pre-emptive surveillance of everyone everywhere, and imposing a data trail and a set of analytical conclusions on them wherever they happen to be spotted. Facial recognition technology stems from an unethical approach, and only becomes more unethical when its accuracy is improved. This legitimizes its further implementation and helps build out a new infrastructure of discrimination to complement the one that already exists.

Defenders of these systems sometimes claim that making them universal makes them impartial, as if they were only going to be put to use by other machines capable of impartiality and not by racist, sexist humans in a society structured to its core by historical racism and sexism. The data that facial scanning systems draw from shaped by the biased presuppositions that govern how they were collected. But even if facial recognition was completely accurate and “neutral,” its data would feed into other biased systems (like those of law enforcement, as detailed in this report on “The Perpetual Line-Up”) and drive their biased outputs. As researcher Jake Goldenfein argues in this paper, computer vision — that is, linking surveillance to databases and algorithmic processing— aspires not to identify and represent reality but to construct and predict it: “Computer vision (and other biometric) techniques however, use extremely granular measurements of ‘the real world’ and increasingly deploy a logic of exposing or revealing the truth of reality and people within it.” Regardless of whether these methods are initially “accurate,” they are effectual as a way of seeing and constituting human subjects. At stake in computer vision is “the proliferation of computational empiricism, the difference between visual and statistical knowledge, and the idea that data science can know people better than they know themselves.”

Though it may seem like face scanning is simply a matter of knowing people by their faces, it facilitates what Goldenfein describes as a “shift from qualitative visual to quantitative statistical ways of knowing people”: Facial recognition systems don’t see or identify us so much as measure us, assimilating us into data sets that can be processed dynamically to produce a limitless number of synthetic “insights” about reality that can then be operationalized.

The perceived ubiquity of facial scanning systems can also give them a panoptic effect: Eileen Donahoe, of Stanford University’s Center for Democracy, Development, and the Rule of Law, quoted here, makes the point that “facial recognition technology will take this loss of privacy and liberty to a new level by taking choice about utilization of the technology away from citizens.” Since face scanning can readily be deployed without consent of the scanned, it becomes prudent to assume one is always being scanned, a procedure that has been extensively normalized and even glamorized by the biometric scanners built into phones.

When Apple introduced its Face ID biometric authentication protocol in late 2017 with the iPhone X, many commentators praised the company for its innovative new security feature. Gee whiz, it unlocks my phone so fast! It’s so convenient! What will they think of next? Some were excited that they could just look at their phone and get notifications, messages no one else could see. Face ID let you exchange knowing, meaningful glances with your phone, which recognized you not in a cold, detached, retinal-scanner sort of way, but by unobtrusively disclosing your secrets to you.

At the time, some expressed concerns about how well Face ID would work, but few talked about whether or not we should want it to. Instead there was jubilation at another level of friction between user and screen being effaced: As Wired‘s reviewer put it: “A phone that never forces you to think about the object itself, but disappears quietly while you pay attention to whatever you’re doing.” Face ID, from that perspective, isn’t an invasion or an imposition, but a purification of the experience, a way to merge with the device. You don’t even think about the routinizing of biometric authentication, how it engrains scanning as a fundamental, virtually invisible component of everyday life — a normal and reasonable prerequisite for access. If we can save a second or two, isn’t it worth getting accustomed to biometrically authenticating ourselves repeatedly, everyday, and making our bodies the indelible index of our permissions and exclusions?

As convenient as it may be to turn your face into a secure password, it still transforms the face into something fundamentally static, a solvable cipher. As a “mathematical representation of your face,” as the Apple white paper described it, it represents and authenticates your identity without expressing it. The face is reductively instrumentalized, a key that fits only certain sorts of locks. The possibility that your face could be dynamic, could be used to express a range of emotions and intentions is subordinated to its function as a barcode. In fact, Apple’s system records the face in its multiplicity and tries to reduce it to a single map. You have just one look; your face can communicate only one “true” tautological thing: you are you.

The technology of Face ID also permitted the capture and codification of facial expressions, which paves the way toward increased efforts at sentiment tracking, ascribing emotional content to users based on how they look. This TechCrunch piece from 2017 pointed out that the iPhoneX’s real-time expression-tracking capabilities could be used for “hyper sensitive expression-targeted advertising and thus even more granular user profiling for ads/marketing purposes.” But the marketing applications are only the tip of the problem. AI Now’s 2018 report, in pointing out the potential dangers of facial recognition and the need for stringent regulation, singled out “affect recognition” for special opprobrium:

Affect recognition is a subclass of facial recognition that claims to detect things such as personality, inner feelings, mental health, and ‘worker engagement’ based on images or video of faces. These claims are not backed by robust scientific evidence, and are being applied in unethical and irresponsible ways that often recall the pseudosciences of phrenology and physiognomy. Linking affect recognition to hiring, access to insurance, education, and policing creates deeply concerning risks, at both an individual and societal level.

In a essay from August 2018, Woodrow Hartzog and Evan Selinger proposed an “outright ban” on facial recognition technology, identifying it as “a menace disguised as a gift,” and an “irresistible tool for oppression that’s perfectly suited for governments to display unprecedented authoritarian control.” They describe surveillance through facial recognition as “intrinsically oppressive” — which suggests that even if you enjoy the convenience of unlocking your phone with your face, you are still being oppressed by that act and still participating in reinforcing the values implicit in it: reducing people to their biometric traits, presupposing the right to record people as data, abolishing any ability to be anonymous in every imaginable context, eliminating what Hartzog and Selinger call “practical obscurity,” endorsing prejudicial assessments based on superficial characteristics, and more.

Ironically enough, a San Francisco lawmaker has introduced legislation to ban facial recognition from city use, exempting its many tech-industry-affiliated citizens from being subject to the sort of surveillance they are happy to see shipped around the world. But this sort of ban doesn’t apply to the more personalized uses that many opt in to, or to the many potential commercial instantiations of facial recognition. It also seems to be grounded in the same sort of logic that leads to banning or criminalizing fake accounts on social media platforms (as in this New York State settlement regarding the sale of “fake followers”). Trying to fix fake accounts on social media seems analogous to trying to make computer vision more accurate. This are systems that ascribe identity externally in an effort to contain and control populations and dictate their future behavior. Improving their “accuracy” or “authenticity” means chiefly that their ability to control us have been improved.

Decrying “fake accounts” implicitly claims that a social media account ordinarily is “true” — a direct and equivalent representation of a person, much like their face is taken to be in a facial recognition system. But these accounts are not even in our control; they are statistical constructions made about us drawing on data inputs we don’t fully know about. A social media account prescribes our identity and makes us own it; castigating fake accounts strengthens the idea that we must accept these accounts as real iterations of ourselves. It grants the company the power to verify who is legitimate based on its prerogatives.

So when this New York Times article asks, “Does Facebook Really Know How Many Fake Accounts It Has?” maybe the answer is yes, all of them. All accounts are “fake” because the company overextends the implications of what it means to have a “real” account. Or one can flip the terms of that and say that there is no such thing as a fake account because there is no such thing as real one. The accounts are not accurate to the humans they are used to represent, but accurate only to the intentions Facebook has for those representations. As far as Facebook is concerned, we are real to the extent that our attention to ads can be measured.

Giving private companies the status of reputation authenticators is akin to giving them control over your face. They get to determine what it signifies about you; they get to choose what information about you is real or not, based on their own modes of accounting and despite how you would prefer to represent yourself. The appeal of these totalizing systems is precisely that they allow others to bypass your input — they allow people to assess you without having to interact with you over any amount of time. These systems project a world in which trust doesn’t have to be built because other people can always be processed instead. Why try to get to know someone on their own terms? That’s just fake news. The whole point of trying to measure someone’s identity or personality is to be able to dictate it to them. And there is no longer any difference between seeing a person and measuring them.

Facial recognition systems and “real” social media accounts make identity a prison; one is assigned a face or a self so that one becomes trapped in it, and all the underlying ideological assumptions that allowed anyone to see those ascriptions as “accurate.” The self is no longer a category through which one expresses agency or intentionality. There are no journeys of self-discovery that the self directs. You don’t find yourself but learn that you have been there all along, as a Facebook shadow profile, or a set of facial coordinates extracted from CCTV footage. The systems hail us, and our response is a surrender.