A few months ago, I installed a Chrome extension called “Show Facebook Computer Vision Tags.” It does exactly what it says on the tin. Once installed, images on my feed were overlaid with one or many emojied descriptors revealing the “alt” tags that Facebook automatically adds to an image (using a “Deep ConvNet built by Facebook’s FAIR team”) when it is uploaded. This feature, which the company launched in 2016, is meant as a tool for the visually impaired, who often rely on text-to-voice screen-readers. With these tags added, the screen reader will narrate: “Image may contain: two people, smiling, sunglasses, sky, outdoor, water.” The user may not be able to see an image, but they can get an idea of what it contains, whether people are wearing accessories or facial hair, when they’re on stage or playing musical instruments, whether they’re enjoying themselves. The tags, in fact, only note positive emoting: smiling or not.

This seems a remarkably limited subset of linguistic and conditional terms for a platform of Facebook’s ubiquity, especially given its investment in having images go viral. If virality is predicated upon images that inspire extremes of emotional response — the pet that faithfully waits for its dead master; a chemical attack in Syria — wouldn’t the tags follow suit? Despite Facebook’s track record of studying emotional manipulation, its tagging AI seems to presume no Wow, Sad, or Angry — no forced smiles on vacation or imposter syndrome at the lit party.

An alt tags’ very prosaic reduction of an image down to its major components, or even its patterning, allows you to view your life as a stranger might

A white paper on Facebook’s research site explains that these tags — “concepts,” in their parlance — were chosen based on their physical prominence on photos, as well as the algorithm’s ability to accurately recognize them. Out were filtered the concept candidates that carried “disputed definitions” or were “hard to define visually”: gender identification, context-dependent adjectives (“red, young, happy”), or concepts that are too challenging for an algorithm to learn or distinguish, such as a “landmark” from general, non-landmark buildings. But speaking with New York Magazine earlier this year, Adam Geitgey, who developed the Chrome extension, suggested its training has expanded beyond that: “When Facebook launched [alt tags] in April, they could detect 100 keywords, but this kind of system grows as they get more data … A year or two from now they could detect thousands of different things. My testing with this shows they’re well beyond 100 keywords already.” As such, the Chrome extension is less interested in Facebook’s accessibility initiatives, instead aiming to draw attention to the pervasiveness of data mining. “I think a lot of internet users don’t realize the amount of information that is now routinely extracted from photographs,” Geitgey explains on the extension’s page in the Chrome web store, “the goal is simply to make everyone aware.”

As the use of emojis makes plain, the extension addresses sighted users — those who do not use screen readers and are probably unaware, as I was, of this metadata Facebook adds to images. With the extension, a misty panorama taken from the top of the world’s tallest building becomes ☀️ ️for sky, 🌊 ocean, 🚴 outdoor,💧water; a restaurant snap from Athens, meanwhile, is 👥 six people, 😂 people smiling, 🍴 people eating, 🍎 food, and 🏠 indoor. (Sometimes, when there’s no corresponding emoji it adds an asemic □.) The tags aren’t always completely right, of course; sometimes the algorithms that drive the automatic tagging misses things: recognizing only one person where there are three in a boat in Phuket Province, describing a bare foot as being shod. But there’s something attractive in its very prosaic reduction of an image down to its major components, or even its patterning, as with an Alex Dodge painting of an elephant that is identified only as “stripes.” The automatic tagging doesn’t seem integrated with Facebook’s facial recognition feature (“Want to tag yourself?”) but rather allows you to view your life and the lives of your friends as a stranger might, stripped of any familiar names, any emotional context that makes an image more than the sum of its visual parts — resplendent in its utter banality.

Perhaps it’s a legacy of growing up in the UAE, where you can fully expect every click, scroll, or even sneeze in a public space to be recorded, but I’m not bothered to find that according to its algorithmically generated “preferences” page in my profile, Facebook thinks my interests include “protein-protein interaction,” “first epistle to the Thessalonians,” and caviar (I’m a vegetarian); that it considers me both an early technology adopter and a late one.

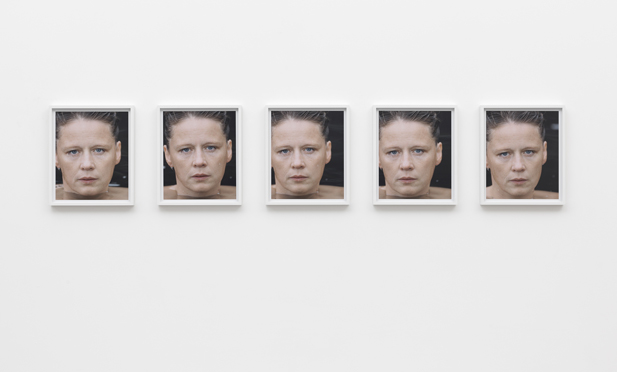

Infinitely more exciting is the transposed comic-book dream of X-ray vision — seeing through the image to what the machine sees. I want to be able to access that invisible layer of machine-readable markup to test my vision against a computer’s. The sentiment is not that different from the desire to see through the eyes of the other that has historically manifested itself in the colonial history of anthropology or in texts like John Howard Griffin’s Black Like Me. The desire to see what they see, be it other people or machines, is a desire to feel what they feel. AI researcher Eliezer Yudkowsky described the feeling of intuition as the way our “cognitive algorithms happen to look from the inside.” An intangibly human gut response is just as socialized (programmed) as anything an algorithm might “feel” on the inside, clinging to its intuitions as well. It should be enough to take the algorithms’ output at face value, the preferences they ascribe to me, or to trust that it is the best entity to relay its own experience. But I’m greedy; I want to know more. What does it see when it looks at me?

The American painter and sculptor Ellsworth Kelly — remembered mainly for his contributions to minimalism, Color Field, and Hard-edge painting — was also a prodigious birdwatcher. “I’ve always been a colorist, I think,” he said in 2013. “I started when I was very young, being a birdwatcher, fascinated by the bird colors.” In the introduction to his monograph, published by Phaidon shortly before his death in 2015, he writes, “I remember vividly the first time I saw a Redstart, a small black bird with a few very bright red marks … I believe my early interest in nature taught me how to ‘see.’”

Vladimir Nabokov, the world’s most famous lepidopterist, classified, described, and named multiple butterfly species, reproducing their anatomy and characteristics in thousands of drawings and letters. “Few things have I known in the way of emotion or appetite, ambition or achievement, that could surpass in richness and strength the excitement of entomological exploration,” he wrote. Tom Bradley suggests that Nabokov suffered from the same “referential mania” as the afflicted son in his story “Signs and Symbols,” imagining that “everything happening around him is a veiled reference to his personality and existence” (as evidenced by Nabokov’s own “entomological erudition” and the influence of a most major input: “After reading Gogol,” he once wrote, “one’s eyes become Gogolized. One is apt to see bits of his world in the most unexpected places”).

A kind of referential mania of things unnamed began with fabric swatches culled from Alibaba, with their wonderfully zoomed images giving me a sense of a material’s grain or flow

For me, a kind of referential mania of things unnamed began with fabric swatches culled from Alibaba and fine suiting websites, with their wonderfully zoomed images that give you a sense of a particular material’s grain or flow. The sumptuous decadence of velvets and velours that suggest the gloved armatures of state power, and their botanical analogue, mosses and plant lichens. Industrial materials too: the seductive artifice of Gore-Tex and other thermo-regulating meshes, weather-palimpsested blue tarpaulins and piney green garden netting (winningly known as “shade cloth”). What began as an urge to collect colors and textures, to collect moods, quickly expanded into the delicious world of carnivorous plants and bugs — mantises exhibit a particularly pleasing biomimicry — and deep-sea aphotic creatures, which rewardingly incorporate a further dimension of movement. Walls suggest piled textiles, and plastics the murky translucence of jellyfish, and in every bag of steaming city garbage I now smell a corpse flower.

“The most pleasurable thing in the world, for me,” wrote Kelly, “is to see something and then translate how I see it.” I feel the same way, dosed with a healthy fear of cliché or redundancy. Why would you describe a new executive order as violent when you could compare it to the callous brutality of the peacock shrimp obliterating a crab, or call a dress “blue” when it could be cobalt, indigo, cerulean? Or ivory, alabaster, mayonnaise?

We might call this impulse building visual acuity, or simply learning how to see, the seeing that John Berger describes as preceding even words, and then again as completely renewed after he underwent the “minor miracle” of cataract surgery: “Your eyes begin to re-remember first times,” he wrote in the illustrated Cataract, “…details — the exact gray of the sky in a certain direction, the way a knuckle creases when a hand is relaxed, the slope of a green field on the far side of a house, such details reassume a forgotten significance.” We might also consider it as training our own visual recognition algorithms and taking note of visual or affective relationships between images: building up our datasets. For myself, I forget people’s faces with ease but never seem to forget an image I have seen on the internet.

At some level, this training is no different from Facebook’s algorithm learning based on the images we upload. Unlike Google, which relies on humans solving CAPTCHAs to help train its AI, Facebook’s automatic generation of alt tags pays dividends in speed as well as privacy. Still, the accessibility context in which the tags are deployed limits what the machines currently tell us about what they see: Facebook’s researchers are trying to “understand and mitigate the cost of algorithmic failures,” according to the aforementioned white paper, as when, for example, humans were misidentified as gorillas and blind users were led to then comment inappropriately. “To address these issues,” the paper states, “we designed our system to show only object tags with very high confidence.” “People smiling” is less ambiguous and more anodyne than happy people, or people crying.

So there is a gap between what the algorithm sees (analyzes) and says (populates an image’s alt text with). Even though it might only be authorized to tell us that a picture is taken outside, then, it’s fair to assume that computer vision is training itself to distinguish gesture, or the various colors and textures of the slope of a green field. A tag of “sky” today might be “cloudy with a threat of rain” by next year. But machine vision has the potential to do more than merely to confirm what humans see. It is learning to see something different that doesn’t reproduce human biases and uncover emotional timbres that are machinic. On Facebook’s platforms (including Instagram, Messenger, and WhatsApp) alone, over two billion images are shared every day: the monolith’s referential mania looks more like fact than delusion.

Within the fields of conservation and art history, technology has long been deployed to enable us to see things the naked eye cannot. X-ray and infrared reflectology used to authenticate forgeries, can reveal, in a rudimentary sense, shadowy spectral forms of figures drafted in the original composition, or original paintings that were later covered up with something entirely different, like the mysterious bowtied thinker under Picasso’s early 1901 work, Blue Room, or the peasant woman overpainted with a grassy meadow of Van Gogh’s 1887 work Field of Grass, or the racist joke discovered underneath Kazimir Malevich’s 1915 painting Black Square, suggesting a Suprematism underwritten by white supremacy.

But what else can an algorithm see? Given the exponential online proliferation of images of contemporary art, to say nothing of the myriad other forms of human or machine-generated images, it’s not surprising that two computer scientists at Lawrence Technical University began to think about the possibility of a computational art criticism in the vein of computational linguistics. In 2011, Lior Shamir and Jane Tarakhovsky published a paper investigating whether computers can understand art. Which is to say, can they sort images, posit interrelations, and create a taxonomy that parallels what an academic might create? They fed an algorithm around a thousand paintings by 34 artists and found that the network of relationships it generated — through visual analysis alone — very closely matched what has come to be canonized as art history. It was able, for example, to clearly distinguish between realism and abstraction, even if it lacked the appropriate labels: what we today call classical realism and modernism it might identify only as Group A and Group B. Further, it broadly identified sub-clusters of similar painters: Vermeer, Rubens, and Rembrandt (“Baroque,” or “A-1” perhaps); Leonardo Da Vinci, Michelangelo, and Raphael (“High Renaissance” or “A-2”); Salvador Dalí, Giorgio de Chirico, Max Ernst (“Surrealism,” “B-1”); Gaugin and Cézanne (“Post-Impressionism,” “B-2”).

Whether Pollock was “influenced” by Van Gogh or not at all, AI can insist on connections that we, art historians, or even Pollock himself, would miss, dismiss or disown

When looking at a painting, an art historian might consider the formal elements of line, shape, form, tone, texture, pattern, color and composition, along with other primary and secondary sources. The algorithm’s approach is not dissimilar, albeit markedly more quantitative. As an Economist article called “Painting by Numbers” explains, Shamir’s program

quantified textures and colors, the statistical distribution of edges across a canvas, the distributions of particular types of shape, the intensity of the color of individual points on a painting, and also the nature of any fractal-like patterns within it (fractals are features that reproduce similar shapes at different scales; the edges of snowflakes, for example).

While the algorithm reliably reiterated what art historians have come to agree on, it went even further, positing unexpected links between artists. Paraphrasing Shamir, the Economist article suggests that Vincent Van Gogh and Jackson Pollock, for example, exhibit a “shared preference for low-level textures and shapes, and similarities in the ways they employed lines and edges.” While the outcomes are quite different, the implication is that the two artists employed similar painting methods on a physical level, not immediately visually discernible. Were they both slightly double jointed to the same degree? Did they both have especially short thumbs that made them hold the brush a certain way?

Whether Pollock was actually “influenced” by Van Gogh — by mere sight or by private rigorous engagement; in the manner of clinamen, tessera, or osmosis — or not at all, Shamir’s AI insisted on patterns and connections that we, art historians, or even Pollock himself, would miss, dismiss or disown.

What the algorithm is doing, noting that “this thing looks like that thing and also like that thing so they must be related,” is not unlike what a human would do, if so programmed. But rather than relegate the algorithm to looking for the same correspondences that a human might already see and arrive at the same conclusions, could it go further? Could it produce a taxonomy that takes emotional considerations into account?

An automated Tumblr by artist Matthew Plummer-Fernandez called Novice Art Blogger, generated by custom software run on a Raspberry Pi, offers “reviews” of artworks drawn from Tate’s archive, written by a bot. In a sense, it serves as an extension of what critic Brian Droitcour has called “vernacular criticism”: “an expression of taste that has not been fully calibrated to the tastes cultivated in and by museums.” The Tumblr suggests a machine vision predicated not only on visual taxonomies, as with Facebook’s or Shamir’s algorithms but rather one that incorporates an emotional register too — that intangible quality that turns “beach, sunset, two people, smiling” into “a fond memory of my sister’s beach wedding.” NAB’s tone is one of friendly musing, with none of the exclamatory bombast of the more familiar review bots one finds populating comment sections. This one first generates captions and then orders them, rephrasing them in “the tone of an art critic.”

Take Jules Olitski’s 1968 gorgeously luminous lilac, dusky pink and celery wash of a painting Instant Loveland: “A pair of scissors and a picture of it,” NAB says, “or then a close up of a black and white photo. Not dissimilar from a black sweater with a blue and white tie.” Or John Hoyland’s screenprint Yellows, 1969: I see tangerine and chartreuse squares on dark khaki, the former outlined on two sides in crimson and maroon. NAB, however, sees “A picture of a wall and blue sign or rather a person stands in front of a blue wall. I’m reminded of a person wearing all white leaned up against a wall with a yellow sign.” Especially delightful are the earnest little anecdotes it sometimes appends to its reviews, a shy offering. “I once observed two birds having sex on top of a roof covered in tile” on Dieter Roth’s Self-Portrait as a Drowning Man, 1974; “I was once shown a book, opened up showing the front and back cover” on Richard Long’s River Avon Book, 1979; “It stirs up a memory of a cake in the shape of a suitcase” on Henry Moore’s Stringed Figure, 1938/cast 1960. Clearly, it’s not very good at colors or even object recognition — perhaps it should consult with @_everybird_ — but there’s still something charming in seeing through its eyes, in being able to feel what it feels.

Eliezer Yudkowsky, in considering the difference between two different neural networks — a more chaotic and unpredictable Network 1, wherein all units (texture, color, shape, luminance) of the object it sees are testable, and a “more human” Network 2, wherein all roads lead to a more central categorization — describes their separate intuitions this way:

We know where Pluto is, and where it’s going; we know Pluto’s shape, and Pluto’s mass — but is it a planet? There were people who said this was a fight over definitions — but even that is a Network 2 sort of perspective, because you’re arguing about how the central unit ought to be wired up. If you were a mind constructed along the lines of Network 1, you wouldn’t say “It depends on how you define ‘planet’,” you would just say, “Given that we know Pluto’s orbit and shape and mass, there is no question left to ask.” Or, rather, that’s how it would feel — it would feel like there was no question left. Before you can question your intuitions, you have to realize that what your mind’s eye is looking at is an intuition — some cognitive algorithm, as seen from the inside.

What the Novice Art Bot doesn’t know is art history. It doesn’t recognize Olitski’s canvas as an example of Color Field painting, or distinguish between the myriad subgenres of abstraction in contemporary art, but perhaps that doesn’t matter. It’s Trump’s America. Maybe it truly is less important to know and more important, instead, to feel.