Once upon a time I intended to do a project about the ways that black folks experienced how they were perceived in America. It would document those times when something happens and you say to yourself, “Wow, in the eyes of some folks, I’m still just a black person.” Every black person I’ve ever met has had at least one of these moments, and often several, even after they became “successful.” My title for the project was “Still a Negro.”

The idea was partly inspired by the years when I taught at a university in Detroit in a summer outreach program for kids, mainly from the city’s middle and high schools who were considering an engineering career. Normally I taught in the liberal arts building, but the outreach program was in the slightly less broken-down engineering building across campus. Often I would go over early to use the faculty copier there, and every year I taught in the program — eight years total — someone from the engineering faculty would reprimand me for using the copier. I knew it would happen, and yet each time I was surprised.

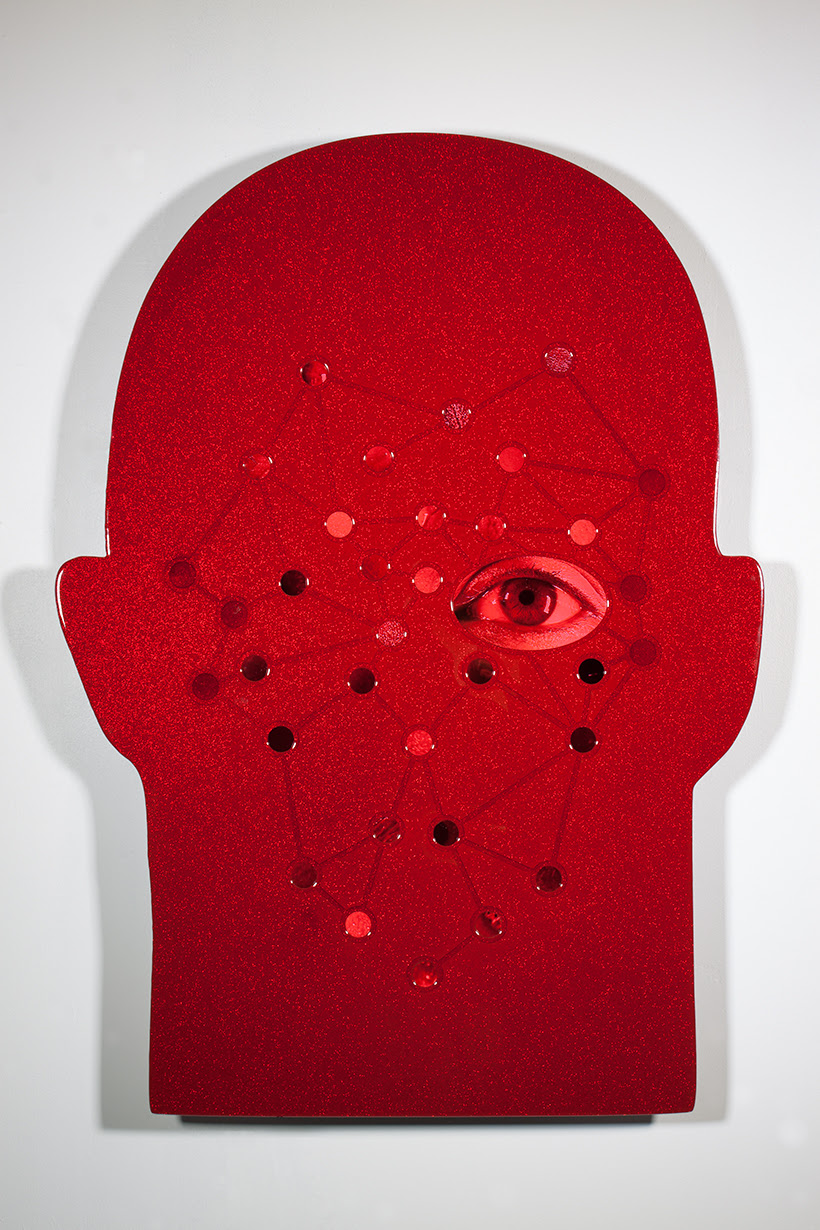

Only the most mundane uses of biometrics and facial recognition are concerned with only identifying a specific person, matching a name to a face or using a face to unlock a phone

Reprimand is probably not a strong enough word to cover the variety of glares, stares, and accusations I elicited. I guess it’s possible that they didn’t recognize me as one of their colleagues, but what pissed me off was that their reactions suggested that they didn’t believe I could be: They gaped at me, accused me of lying, demanded proof that I really was faculty, or announced that they’re not going to argue with a student. Simple courtesy should have prevented such behavior. Since my own behavior was not out of place, it could only mean that I looked out of place. And then, for the ten millionth time, I would say to myself, “Oh my god! I forgot that I’m still a Negro.”

The fact that this happened in the engineering department was not lost on me. Questions about the inclusivity of engineering and computer science departments have been going on for quite some time. Several current “innovations” coming out of these fields, many rooted in facial recognition, are indicative of how scientific racism has long been embedded in apparently neutral attempts to measure people — a “new” spin on age-old notions of phrenology and biological determinism, updated with digital capabilities.

Only the most mundane uses of biometrics and facial recognition are concerned with only identifying a specific person, matching a name to a face or using a face to unlock a phone. Typically these systems are invested in taking the extra steps of assigning a subject to an identity category in terms of race, ethnicity, gender, sexuality, and matching those categories with guesses about emotions, intentions, relationships, and character to shore up forms of discrimination, both judicial and economic.

Certainly the practice of coding difference onto bodies is not new; determining who belongs in what categories — think about battles over citizenship, the “one drop” rule of blackness, or discussions about how to categorize trans people — are longstanding historical, legal, and social projects made more real and “effective” by whatever technologies are available at the time. As Simone Browne catalogs in Dark Matters, her groundbreaking work on the historical and present-day surveillance of blackness, anthropometry

was introduced in 1883 by Alphonse Bertillon as a system of measuring and then cataloguing the human body by distinguishing one individual from another for the purposes of identification, classification, and criminal forensics. This early biometric information technology was put to work as a ‘scientific method’ alongside the pseudo-sciences of craniotometry (the measurement of the skull to assign criminality and intelligence to race and gender) and phrenology (attributing mental abilities to the shape of the skull, as the skull was believed to hold a brain made up of the individual organs).

A key to Browne’s book is her detailed look at the way that black bodies have consistently been surveilled in America: The technologies change, but the process remains the same. Browne identifies contemporary practices like facial recognition as digital epidermalization: “the exercise of power cast by the disembodied gaze of certain surveillance technologies (for example, identity card and e-passport verification machines) that can be employed to do the work of alienating the subject by producing a ‘truth’ about the body and one’s identity (or identities) despite the subject’s claims.”

Iterations of these technologies are already being used in airports, at borders, in stadiums, and in shopping malls — not just in countries like China but in the United States. A number of new companies, including Faception, NTechLab, and BIOPAC systems, are advancing the centuries-old project of phrenology, making the claim that machine learning can detect discrete physical features and make data-driven predictions about the race, ethnicity, sexuality, gender, emotional state, propensity for violence, or character of those who possess them.

Many current digital platforms proceed according to the same process of writing difference onto bodies through a process of data extraction and then using “code” to define who is what. Such acts of biometric determinism fit with what has been called surveillance capitalism, defined by Shoshanna Zuboff as “the monetization of free behavioral data acquired through surveillance and sold to entities with interest in your future behavior.” Facebook’s use of “ethnic affinity” as a proxy for race is a prime example. The platform’s interface does not offer users a way to self-identify according to race, but advertisers can nonetheless target people based on Facebook’s ascription of an “affinity” along racial lines. In other words, race is deployed as an externally assigned category for purposes of commercial exploitation and social control, not part of self-generated identity for reasons of personal expression. The ability to define one’s self and tell one’s own stories is central to being human and how one relates to others; platforms’ ascribing identity through data undermines both.

At the same time racism and othering are rendered at the level of code, so certain users can feel innocent and not complicit in it

These code-derived identities in turn complement Silicon Valley’s pursuit of “friction-free” interactions, interfaces, and applications in which a user doesn’t have to talk to people, listen to them, engage with them, or even see them. From this point of view, personal interactions are not vital but inherently messy, and presupposed difference (in terms of race, class, and ethnicity) is held responsible. Platforms then promise to manage the “messiness” of relationships by reducing them to transactions. The apps and interfaces create an environment where interactions can happen without people having to make any effort to understand or know each other. This is a guiding principle of services ranging from Uber, to Amazon Go grocery stores, to touchscreen-ordering kiosks at fast-food joints. At the same time racism and othering are rendered at the level of code, so certain users can feel innocent and not complicit in it.

In an essay for the engineering bulletin IEEE Technology and Society, anthropologist Sally Applin discusses how Uber “streamlined” the traditional taxi ride:

They did this in part by disrupting dispatch labor (replacing the people who are not critical to the actual job of driving the car with a software service and the labor of the passenger), removing the language and cultural barriers of communicating directly with drivers (often from other countries and cultures), and shifting traditional taxi radio communications to the internet. [Emphasis added]

In other words, interacting with the driver is perceived as a main source of “friction,” and Uber is experienced as “seamless” because it effaces those interactions.

But this expectation of seamlessness can intensify the way users interpret difference as a pretext for a discount or a bad rating. As the authors of “Discriminating Tastes: Uber’s Customer Ratings as Vehicles for Workplace Discrimination” point out:

Because the Uber system is designed and marketed as a seamless experience (Uber Newsroom, 2015), and coupled with confusion over what driver ratings are for, any friction during a ride can cause passengers to channel their frustrations

In online markets, consumer behavior often exhibits bias based on the perceived race of another party to the exchange. This bias often manifests via lower offer prices and decreased response rates … More recently, a study of Airbnb … found that guests with African–American names were about 16 percent less likely to be accepted as rentees than guests with characteristically white names.

“Ghettotracker,” which purported to identify neighborhoods to avoid, and other apps like it ( SafeRoute, Road Buddy) are further extensions of the same data-coding and “friction”-eliminating logic. These apps allow for discrimination against marginalized communities by encoding racist articulations of what constitutes danger and criminality. In effect, they extend the logic of policies like “broken windows” and layer a cute interface on top of it.

Given the primacy of Google Maps and the push for smart cities, what happens when these technologies are combined in or across large platforms? Even the Netflix algorithm has been critiqued for primarily only offering “Black” films to certain groups of people. What happens when the stakes are higher? Once products and, more important, people are coded as having certain preferences and tendencies, the feedback loops of algorithmic systems will work to reinforce these often flawed and discriminatory assumptions. The presupposed problem of difference will become even more entrenched, the chasms between people will widen.

At its root then, surveillance capitalism and its efficiencies ease “friction” through dehumanization on the basis of race, class, and gender identity. Its implementation in platforms might be categorized as what, in Racial Formation in the United States, Michael Omi and Howard Winant describe as “racial projects”: “simultaneously an interpretation, representation, or explanation of racial dynamics and an effort to reorganize and redistribute resources along particular racial lines.”

The impulse to find mathematical models that will not only accurately represent reality but also predict the future forces us all into a paradox. It should be obvious that no degree of measurement, whether done by calipers or facial recognition, can accurately determine an individual’s identity independent of the social, historical, and cultural elements that have informed identity. Identification technologies are rooted in the history of how our society codes difference, and they have proved a profitable means of sustaining the regimes grounded in the resulting hierarchies. Because companies and governments are so heavily invested in these tools, critics are often left to call for better representation and more heavily regulated tools (rather than their abolishment) to at least “eliminate bias” to the extent that fewer innocent people will be tagged, detained, arrested, scrutinized, or even killed.

Frank Pasquale, in “When Machine Learning Is Facially Invalid,” articulates this well: The vision of better “facial inference projects” through more complete datasets “rests on a naively scientistic perspective on social affairs,” but “reflexivity (the effect of social science on the social reality it purports to merely understand) compromises any effort (however well intended) to straightforwardly apply natural science methods to human beings.”

There is no complete map of difference, and there can never be. That said, I want to indulge proponents of these kinds of tech for a moment. What would it look like to be constantly coded as different in a hyper-surveilled society — one where there was large-scale deployment of surveillant technologies with persistent “digital epidermalization” writing identity on to every body within the scope of its gaze? I’m thinking of a not too distant future where not only businesses and law enforcement constantly deploy this technology, as with recent developments in China, but also where citizens going about their day use it as well, wearing some version of Google Glass or Snapchat Spectacles to avoid interpersonal “friction” and identify the “others” who do or don’t belong in a space at a glance. What if Permit Patty or Pool Patrol Paul had immediate, real-time access to technologies that “legitimized” black bodies in a particular space?

I don’t ask this question lightly. Proponents of persistent surveillance articulate some form of this question often and conclude that a more surveillant society is a safer one. My answer is quite different. We have seen on many occasions that more and better surveillance doesn’t equal more equitable or just outcomes, and often results in the discrimination being blamed on the algorithm. Further, these technological solutions can render the bias invisible. While not based on biometrics, think about the difference between determining “old-fashioned” housing discrimination vs. how Facebook can be used to digitally redline users by making ads for housing visible only to white users.

But I’d like to take it a step further. What would it mean for those “still a negro” moments to become primarily digital — invisible to the surveilled yet visible to the people performing the surveillance? Would those being watched and identified become safer? Would interactions become more seamless? Would I no longer be rudely confronted while making copies?

The answer, on all counts, is no — and not just because these technologies cannot form some complete map of a person, their character, and their intent. While it would be inaccurate to say that I as a Black man embrace the “still a negro” moments, my experiencing them gives me important information about how I’m perceived in a particular space and even to what degree I’m safe in that space. The end game of a surveillance society, from the perspective of those being watched, is to be subjected to whims of black-boxed code extended to the navigation of spaces, which are systematically stripped of important social and cultural clues. The personalized surveillance tech, meanwhile, will not make people less racist; it will make them more comfortable and protected in their racism.