Over the past two decades, millions of dollars have been spent on equipping elementary and secondary school campuses with military-grade surveillance tech that promises to amplify the reach and efficiency of school security personnel. As costly low-tech interventions like metal detectors have fallen out of vogue, a slew of AI-powered tools have entered the market, promising to transform older surveillance systems such as closed-circuit television cameras into “smart tools” for proactive threat detection. For example, companies like Zero Eyes offer AI-powered software that the company claims can automatically detect the presence of a gun within seconds.

Early implementations of such software, however, have struggled to differentiate broom handles from guns, raising questions on how well the technology actually works. To date, very little evidence suggests that technologies like facial recognition, social-media monitoring, and pervasive environmental sensors (i.e. live audio feeds, vape and THC detectors, “aggression” analysis) are effective at keeping kids safe. Yet there is mounting evidence that such tools perpetuate an environment where Black and Brown students are more likely to be subjected to exclusionary discipline, often for minor infractions. The opacity of these systems makes it all the more challenging to understand when and where kids are being tracked and how such monitoring fuels stereotypes about who needs to be punished and who deserves protection in the classroom.

The booming school security industry — now worth an estimated $2.7 billion, not including the billions spent on armed campus police officers every year — has grown at a steady rate in spite of the fact that shooting incidents involving students have been on the decline since the early 1990s, when children were four times more likely to be killed at school than they are today. Despite the high visibility of tragic events like the recent school shooting at Oxford High School in Michigan, school campuses remain among the safest places for children to spend their time. According to a recent analysis of federal crime data, kids are far more likely to be murdered at home than at school, as school-based homicides represent only one to two percent of the overall homicides of school-age children. More kids are killed each year from pool drownings or bicycle accidents than from incidents of school violence.

The opacity of these systems makes it challenging to understand when and where kids are being tracked and how such monitoring fuels stereotypes about who needs to be punished

So what explains the significant increase in spending on school safety? In the school-security sector, crisis is good for business. Scholars have argued that the adoption of security measures often stems from fear-based moral panics in the wake of a gruesome tragedy, such as a mass shooting, rather than from a careful examination of the evidence regarding school safety. The industry has had an easy time selling schools on new equipment, since government funds are readily available for the purpose of “hardening” school campuses. After Sandy Hook, President Obama signed an executive order that provided federal funding for more school resource officers — armed law enforcement personnel with the power to arrest students — and the purchase of additional security equipment, despite the lack of evidence that such interventions reduce school violence or rates of crime on campus. “Even 17 years after the first major school shooting to make national headlines, the research examining the effectiveness of these measures is still lacking,” criminologist Cheryl Jonson argued in a 2017 review of the existing literature.

School security companies have also been able to take advantage of other moments of crisis, tapping into funding sources that are earmarked for more general disaster and emergency response, such as FEMA’s Homeland Security Grant, a program that was introduced after schools were identified as sites of potential terrorist attacks in the years following 9/11.

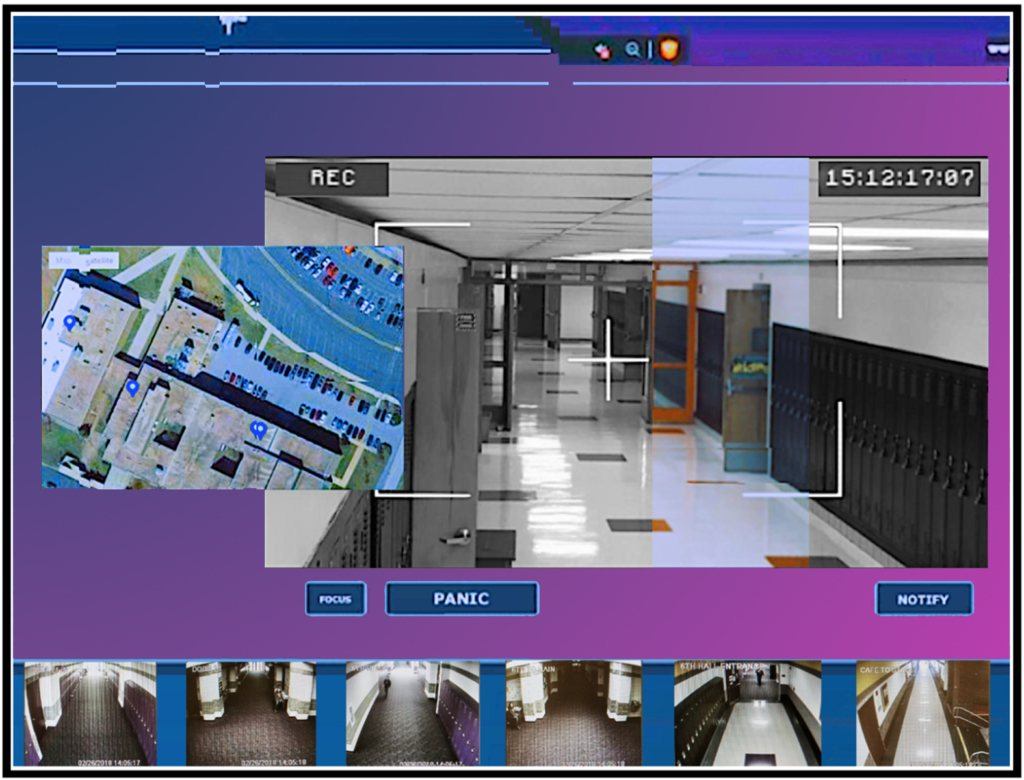

Today, school-security initiatives are driven largely by the goal of pre-emption, which has been used to justify ever more pervasive forms of surveillance that extend far beyond school gates. For example, most states now have cyberbullying laws that grant schools the authority to surveil students online, even when their behavior has no direct connection to school-related activities. As a result, a growing number of security companies now offer machine-learning software that scans students’ social media posts to flag what it deems as potentially dangerous or threatening behavior. Such tools are a major growth vector for the school-safety industry, which promises to translate an ever-increasing number of data streams into “actionable intelligence” that they claim can be used to intervene before a tragedy occurs. Those streams include live video and audio feeds, sensors that detect a wide range of environmental factors, as well as analytics tools that track off-campus activities online.

Given the extremely rare nature of school shootings, scholars of violence prevention have argued that such events are virtually impossible to predict or model through computational methods. The vast majority of students will not perpetrate an act of mass violence on school grounds, which means that any software designed to identify potential threats are likely to generate a large number of false positives, labeling kids as threats even when they aren’t. Scholars have tried to pinpoint a number of causal factors that lead to school shootings — including a history of being bullied, mental illness, exposure to violent video games, interest in weapons, and so on — but none have demonstrated a significant effect on the likelihood of becoming a school shooter. As a result, the profile of a typical school shooter remains elusive and claims that social media monitoring can help identify credible threats are, as legal scholar Amy B. Cyphert argues, “questionable at best.”

With little evidence suggesting that facial recognition keeps kids safe, the industry has still had an easy time selling schools on new equipment, since government funds are readily available

Instead, the day-to-day use of such technologies is more likely to arise in addressing minor disciplinary issues. Take, for example, the Halo IOT Smart Sensor, which positions itself as an “internet of things” technology that can be used in spaces where officials can’t place cameras due to privacy concerns, such as locker rooms. In addition to marketing itself as a tool for locating gunshots, Halo offers a sensor for detecting if kids are vaping in bathrooms and hallways, as well as audio sensors that alert security officials when an “abnormal sound” has been detected in private spaces. While some of these capabilities are fairly straightforward (i.e. humidity and smoke detection), other sensors for things like “aggression detection” are fraught with errors and laden with the potential for racial bias.

Such tools raise questions about how surveillance widens the net of punishment in schools, whereby surveillance is used to criminalize youth for minor infractions rather than detect dangerous criminal activity. It wouldn’t be the first time that school safety has been used to justify the expansion of exclusionary discipline practices (i.e. expulsion, suspension, transfer to a disciplinary facility). For example, the Gun Free Schools Act of 1994 mandated the expulsion of any student who brought a gun on campus, inaugurating an era of “zero tolerance” policies meant to remove students deemed a threat to school safety. Yet such policies were quickly expanded to justify excluding students for minor behavioral issues like truancy and vandalism. According to data released by the Texas Education Agency, more than 90 percent of disciplinary actions taken in Texas schools are for “code of conduct” violations, such as tardiness, illicit cell phone use, and dress code violations. Expanded use of school surveillance is likely to amplify these trends, in ways that have a disproportionate impact on students of color.

The fact that Black and Latinx students are more likely than White students to be punished at school is no longer up for debate. Numerous studies have documented how Black students are frequently disciplined for infractions that are both less serious and more discretionary than their White counterparts. A recent study found that schools with a larger percentage of Black students are more likely to deploy exclusionary discipline practices than schools with majority White students, even after controlling for rates of misbehavior. As with other policies that fuel the school-to-prison pipeline, the burdens of heightened surveillance are more likely to fall on the shoulders of Black and Latinx kids. Black youth are four times as likely to attend a high-surveillance school than a low-surveillance one, which increases their likelihood of being suspended and decreases their likelihood of attending college.

When it comes to school security, it’s important to think about the broader culture of discipline within schools and what interpretive lenses are used to make sense of student behavior. A school’s racial composition profoundly shapes how school authorities perceive and manage threats. For example, middle and high schools with majority Black student populations are more likely to have police on campus than other support services, like guidance counselors. A recent study revealed that law enforcement officers in schools with a larger proportion of White students were primarily concerned with protecting against external threats to the school, whereas officers in schools with a larger Black population viewed the students themselves as the primary threat to be managed. As sociologist Aaron Kupchik argues in The Real School Safety Problem: The Long-Term Consequences of Harsh School Punishment, “It seems that who goes to a school helps shape its security practices, not just what behaviors they demonstrate.”

Time and again, rare incidents of horrific violence, largely perpetrated by young White males, are invoked as a means of justifying punitive practices that disproportionately impact Black and Brown youth

Even within a single school, it’s likely that discrepancies will arise in who is monitored and punished with the help of high-tech security tools. A group of researchers from Yale found that as early as preschool, teachers tend to more closely observe Black boys when primed to expect bad behavior in the classroom. Surveillance technology is likely to amplify these trends. In places like Texas City, where 71 percent of the student body is Black or Latinx, concerns have already been raised about the ways that video surveillance has been used to investigate minor behavioral issues, such as a student throwing chocolate milk on a group of cheerleaders at a pep rally.

Time and again, rare incidents of horrific violence, largely perpetrated by young White males, are invoked as a means of justifying punitive practices that disproportionately impact vast swaths of Black and Brown youth. As scholar Ruth Wilson Gilmore explains, the “terrible few” serve as a trojan horse for introducing far-reaching carceral policies, as “the few whose difference might horribly erupt stand in for the many whose difference is emblazoned on surfaces of skin, documents, and maps.”

Because new digital technologies are much more behind-the-scenes than prior school-security interventions, their racialized impact is less overtly visible. School administrators collaborate with law enforcement to develop profiles of “risky” kids, often without the knowledge or consent of parents and students. For example, the Southern Poverty Law Center found that students of color were far more likely to be suspended or expelled for their social media posts in Huntsville, Alabama, where school officials hired a former FBI agent to monitor student activity online. One Black student was suspended for five days after an officer flagged her as a potential gang member. Only later was the reason for her suspension revealed: she had posted a picture of herself wearing a sweatshirt that featured an image of her father, who had recently been murdered.

Technology vendors have leaned on the technical specifications of their tools to try to make the case that their surveillance systems are colorblind and fair. For example, Anyvision, a facial-recognition company with contracts in schools across the country, recently sponsored a research competition that it called the “Fair Face Challenge,” which asked technical experts to “eliminate ethnic bias” in facial-recognition algorithms. On its surface, such efforts might seem like good faith attempts to address issues of racial and gender discrimination in AI systems. Yet by narrowly defining fairness in terms of statistical accuracy, Anyvision diverts attention away from real-world impact and onto computational issues that require specialized training to fully grasp.

But these tools do not exist in a vacuum. Every day, more examples emerge of the ways technically “fair” systems are routinely used in harmful ways. Algorithms designed to allocate health care resources to patients systematically discriminate against Black people. Efforts to modernize large bureaucratic systems lead to the exclusion of thousands of poor people for essential social services.

Shifting the conversation to technical concerns regarding statistical accuracy eschews deeper issues about how technology is used to fuel the criminalization and social control of marginalized groups. “Subjugation, after all, is hardly ever the explicit objective of science and technology,” scholar Ruha Benjamin has argued. “Instead, noble aims such as ‘health’ and ‘safety’ serve as a kind of moral prophylactic for newfangled forms of social control.”

Amid these developments, a growing number of students are beginning to push back on the presence of law enforcement and police technology on their school campuses. For example, last year, some former and current students from Fort Bend independent school district, one of the largest and most diverse school districts in Texas, formed a coalition to advocate for racial justice on their campuses. Following the lead of Defund campaigns that emerged in the summer of 2020, the students demanded that administrators increase funds for social-work programs by reducing spending on security and monitoring, a budget that exceeded the amount spent on social work by almost five to one.

Meanwhile, school security remains a lucrative and thriving industry. But as the students in Fort Bend show, some are ready to offer an alternative vision for what it means to keep kids safe — one which includes the potential for every student to thrive within school walls.