As families watched from home, Dick Clark stood on the steps of the town hall hosting the final New Year’s Rocking Eve of the millennium. The excited crowd chanted the countdown as the year entered its last 10 seconds, but when the ball dropped, the cries of “Happy New Year!” became shouts of “Happy New Wha!?!?” as the year switched from 1999… to 1900. Within seconds, screams filled the crowd as traffic lights exploded, drivers lost control of their cars, rotating restaurants spun out of control, planes fell from the sky, and household appliances went on the attack. And all because the Y2K compliance officer at a small town’s nuclear power plant had not gotten the job done, thereby triggering a calamitous domino effect causing anything and everything containing a computer chip to go haywire. Wandering through the chaos and looting that had overtaken the town’s streets, a girl walking with her family commented, “Well look at the wonders of the computer age now.” Her father, the offending compliance officer, replied, “Wonders Lisa, or blunders?” She retorted, “I think that was implied by what I said.”

In case you haven’t already guessed, the family in question was none other than the Simpsons, from the cartoon bearing their name, and the scenes taking place were part of the show’s Halloween episode, which aired on October 31, 1999. Homer had been in charge of making sure Springfield’s nuclear power plant was Y2K compliant, and as audiences would expect of Homer, he hadn’t. By the episode’s end, the country’s brightest minds are boarding a rocket ship for Mars, while mushroom clouds engulf the Earth.

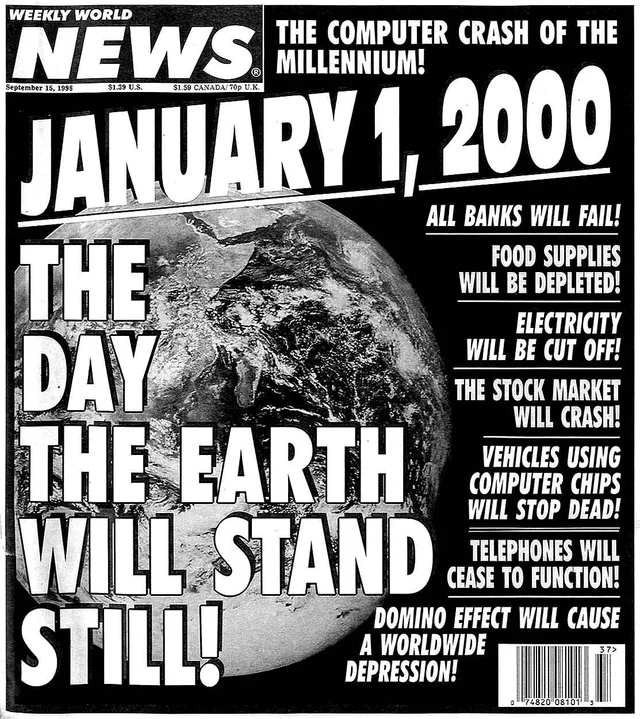

When we remember Y2K today, we usually wind up talking about the loudest and silliest voices of doom

This dramatization of what could happen as 1999 became 2000 was obviously ridiculous. No one really believed that Y2K was going to cause waffle irons to develop a taste for human blood, or traffic lights to fire lasers at people on the streets. But blackouts, elevator failures, problems with embedded microchips, social unrest, and noncompliant systems causing cascading failures — those possibilities were not so outlandish.

While The Simpsons was clearly satirizing Y2K anxiety, the fact is that “Life’s a Glitch, Then You Die” could not have been made had there not been something to satirize. Y2K had been a matter of concern within the IT, business, and government sectors throughout the mid-1990s, but public awareness exploded in the closing years of the decade. In June 1997, Newsweek warned on its cover of “The Day the World Crashes,” cautioning its readers that on Y2K, “The power may go out… the elevator that took you up to the party ballroom may be stuck in the ground floor… the traffic lights might be on the blink.” Wired Magazine — which released a completely black “Lights Out” cover — declared 1998 to be “the year the world woke up to Y2K.”

Hyperbole on the newsstand was echoed in film, television, and other media. There was the eminently forgettable Y2K: The Movie, which saw Ken Olin rushing to avert a Y2K-related nuclear meltdown just outside of Seattle. Even Superman found himself battling Y2K — or, more accurately, battling Brainiac, who inserted his own bug into LexCorps’ “Y2Kompliance” software. On 60 Minutes, Steve Kroft informed viewers that “If you fly in airplanes, depend on elevators, are hooked up to a water or sewer system, collect Medicare or Medicaid, visit automated bank tellers, or use electricity, you’re likely to have some part of your life disrupted.” One person preparing for such disruptions told the camera, “It could be bedlam so we’re stocking up,” before revealing a storm cellar filled with McDonald’s French fries. (It was an advertisement.)

As 1999 crept to a close, the New York Times reported that the surveys and polls suggested “Americans are confident computer glitches will not have much impact on their lives.” You wouldn’t know it from mainstream media, which had a tendency to highlight — and gently mock — those heading for the hinterlands, much to the chagrin of those trying to disseminate a calm explanation. Amid the deluge of coverage was a steady drumbeat of reassurances from official sources that the problem was being handled. In his 1999 State of the Union address, President Clinton declared, “We also must be ready for the 21st century from its very first moment, by solving the so-called Y2K computer problem.” But even as he emphasized “this is a big, big problem,” he hastened to reassure everyone “we’ve been working hard on it.”

By that point, most within the IT, business, and government sectors were fairly confident that the measures they’d taken would prevent a major crisis. And as it turned out, they were right. When we remember Y2K today, we usually wind up talking about the loudest (and silliest) voices of doom. In the midst of the climate crisis and the pandemic, something about Y2K feels almost quaint. Indeed, some have joked on social media that the pandemic is what we thought Y2K would be, and that we were better prepared for Y2K than we were for the pandemic. While it feels somewhat crass to compare Y2K to a deadly pandemic that has claimed millions of lives, such comparisons speak to the challenge of recalling the apocalyptic hype while overlooking the work that went into making sure such hype wound up just being hype.

The Millennium Bug threatened to hatch as 1999 became 2000, but its eggs were laid in the 1960s. Today, when carrying hundreds of gigabytes in your pocket is the norm, it may seem odd to recall a time when computer memory was limited enough (meaning expensive enough) that programmers decided to save memory space (and thus money) by representing dates using six characters instead of eight. This meant that the first two digits of the year were dropped: August 14, 1956 would be programmed as 081456 (instead of 08141956). Whether calculating social security payments or expiration dates, computerized systems were fine just so long as they could assume that the century dates were 19. Once those century dates became 20, things had the potential to mess up: a credit card with a 00 expiration date could be interpreted by a computer as one that had expired nearly a century ago; an interest payment could be calculated based on the wrong century.

When the century flipped over, and computers did encounter these dates, there was a chance that nothing would go wrong. There was also a chance that the computer would churn out incorrect information that would spread throughout other systems; and, in the most worrisome situations, a chance that some computers would shut down entirely. As a computer breakdown on this scale was a novel danger, the implications of the worst-case scenario were a matter of some contention — given how reliant so many systems had become on the functioning of computers, a major computer crash could potentially ripple across everything from banking, to hospitals, to the grid itself. And while experts disagreed on just how bad the worst-case scenario might look, they were sufficiently spooked to insist it was necessary to avoid finding out.

It’s not reassuring when a Senate committee feels compelled to note that “nation-wide social or economic collapse” won’t happen

The House of Representatives held its first Y2K-related hearing in 1996. The Congressional Research Service, at the urging of Senator Daniel Moynihan, prepared its initial assessment of the problem that same year. In 1998, the Senate’s Special Committee on the Year 2000 Problem was established, and John Koskinen was appointed “Millennium Czar” by the Clinton Administration. IT professionals had noted the risk long before government officials did. Heck, the computer scientist Bob Bemer was warning about the problem in 1971. By 1988, a headline in the New York Times warned that “for computers, the year 2000 may prove a bit traumatic.” In 1993, the software engineer Peter de Jager truly sounded the alarm when he published an article in Computerworld called “Doomsday 2000.” While he didn’t pick the title himself, de Jager compared Y2K to “a car crash,” while warning “we must start addressing the problem today or there won’t be enough time to solve it.” Generations of IT professionals had allowed the problem to fester, confident that somebody else would come along and fix it. “With less than seven years to go,” de Jager wrote, “someone is going to be working overtime.” The “someone” in question included the readers of tech publications like Computerworld.

De Jager was outspoken, but by no means alone. Capers Jones, the chairman of Software Productivity Research, Inc., assessed “The Global Economic Impact of the Year 2000 Software Problem” and described it as resembling “the slow accumulation of arsenic.” Some members of the IT community went further: The software engineer Edward Yourdon, along with his daughter Jennifer, would later publish the bestselling Time Bomb 2000, in which they wrote, “The more we’ve investigated the situation during the course of preparing this book, the more worried we have become.” The book was rich in technical details, basing its analysis in Yourdon’s impeccable IT credentials; and while it didn’t veer into religious apocalypticism, it continually put forth grim tidings.

The assessments coming from U.S. government were also fairly pessimistic, initially. In 1996, Representative Stephen Horn, among the first elected officials to take the threat seriously, began issuing a series of “report cards” to grade the government’s readiness for Y2K. In his second report card of 1998, he assigned the government an F. Clinton’s “Y2K czar,” John Koskinen, reassured the public that the problem was well in hand. But his claims were often held up by media outlets in contrast to grimmer pronouncements made by officials like Horn.

As public and political interest in Y2K picked up, those who had been issuing warnings for and within the IT community also found themselves speaking to a broader public. They were summoned to testify before various Congressional committees and quoted for their expertise in newspapers. Their more pessimistic pronouncements were seized upon by those on the fringe as proof that the danger was catastrophic; mainstream media seized on this fringe at the expense of real professional assessments. As Howard Rubin commented in his “Diary of a Y2K Consultant” (which appeared in IEEE’s Computer Magazine), “they don’t really want to speak to me, they want the crazies.”

When the Special Committee on the Year 2000 Problem issued its initial report, on February 24, 1999, anyone who had hoped that its findings would squash public anxiety was almost certainly disappointed. While the committee borrowed a phrase from Mark Twain to reassure readers that “The good news is the talk of the death of civilization… has been greatly exaggerated,” they immediately added, “The bad news is that committee research has concluded that the Y2K problem is very real and that Y2K risk management efforts must be increased to avert serious disruptions.” A major role of the committee was to keep pressure and focus on the issue; the report continually impressed the stance that even as the work was being done, so too was there much work remaining. The committee noted that they did not believe the U.S. would “experience nation-wide social or economic collapse.” But it’s not entirely reassuring when a Senate committee feels compelled to note that “nation-wide social or economic collapse” won’t happen.

The question of what the “serious disruptions” might look like, how severe they would be, and how to appropriately prepare for them provided fertile soil for many anxieties. Business leaders and government officials sought to reassure the public that, even in the face of uncertainty, the potential disruptions were unlikely to be much more than bumps in the road. But a not inconsiderable number of people were bracing for the worst — and many media outlets were only too happy to devote ample coverage to those filling up their basements with extra canned goods and toilet paper.

In expressing warnings, many members of the IT community emphasized that their hope was to frighten those in authority into action. Similarly, many figures within the government were trying to exert pressure on the business community to make the needed fixes, while assuring the public that the matter was being dealt with. These messages were interpreted quite differently in some circles, particularly those already convinced of impending apocalypse.

Rather than treat Y2K as a wholly new sort of conspiracy, Y2K generally served as a new phenomenon onto which preexisting conspiratorial views could be projected. Those who had anxieties about the growing power of computers, concerns over a coming “cashless” society, anticipation of the biblical apocalypse, fear of impending martial law, and warnings of a coming “New World Order” found ample material in Y2K to feed into their roadmaps to oblivion. While the technical specifics remained opaque to non-technical audiences, the fact that experts and government officials were so concerned provided a legitimate foundation atop which the conspiratorially minded could build.

The scale of the problem briefly pushed a conversation about the pros and cons of computing into the mainstream

For some of the more religiously inclined, Y2K mapped easily onto already existing concerns about technology. Nearly a decade before de Jager wrote “Doomsday 2000,” Noah Hutchings and David Webber of the Southwest Radio Church were warning of the demonic power of computers and of the fact that “if the computers were suddenly silenced the world would be thrown into chaos.” (Which, in fairness, is a rather prescient foreshadowing of what many were predicting might happen with Y2K.) Many prophetic writings about computers referred to the biblical verse Revelation: 13, which describes how the antichrist would force all to have a mark “in their right hand, or in their foreheads,” without which none would be able to buy or sell. Grant Jeffrey, a prolific writer on Bible prophecy, warned his readers that “advanced computer technology… have made the fulfillment of the 666 Mark of the Beast control system possible.” Once Y2K awareness had set in, he warned that “those people dedicated to creating a New World Order” would achieve their goals by exploiting a crisis of “such vast proportions that no nation, on its own, could possibly solve it.” He proclaimed that “the Y2K computer crisis provides a unique opportunity.”

Other responses came from libertarian and anti-government sectors. Typical of this is John Zielinski’s Surviving Y2K: The Amish Way, which mixed a romantic argument for transitioning to the lower-tech lifestyle of the Amish with warnings that the government was run by satanic pedophiles. The pseudonymous Boston T. Party noted in his book Boston on Surviving Y2K that he was expecting “a severe crash, resulting in depression and martial law.” Alongside these works were numerous survival guides, cookbooks, and investment manuals to inform anxious readers on everything from exactly how much toilet paper to buy, to how to get rich off Y2K by buying gold. It should be noted that some left-leaning groups were also anticipating major disruptions. The Utne Reader, nearly a decade after it published Chellis Glendinning’s “Notes toward a Neo-Luddite Manifesto,” published and distributed its own Y2K Citizen’s Action Guide — though the focus was on community preparedness, not individual bunker building.

Plenty of media coverage focused on those expecting the worst. Certainly, these individuals are part of the story of Y2K. Nevertheless, framing all those concerned about Y2K as doomsayers to be ridiculed was unfair, and risked missing the deeper source of many of their concerns. Some saw Y2K as the gateway through which a biblically predicted cataclysm would enter; others saw Y2K not as reason to prepare for the end times, but to prepare to help those in need. Shaunti Christine Feldhahn’s “Joseph Project” sought to get churches organized to provide emergency services in the wake of Y2K disruptions. Though Y2K did present an opportunity to proselytize, Feldhahn also emphasized that it was a time “to gently, lovingly, and sincerely help others in our community.” The Rev. Dacia Reid, in a sermon she delivered at multiple Unitarian Universalist congregations, argued that metaphorical “lifeboats” were needed, just in case disruptions lasted longer than expected. “The thing about lifeboats is that they are not individual,” she said. “They are actually communal.”

In the aforementioned Utne Reader guide, Paloma O’Riley — whose “Cassandra Project” sought to create a network of community readiness groups — highlighted that “individual preparedness is for those who can; community preparedness is for those who can’t,” emphasizing the need to think of those most at risk. Testifying before the Senate Special Committee, O’Riley drove this point home, lambasting sensationalized media coverage and unclear government messaging, noting that “preparedness helps make individuals and communities more resilient.” Much of the community preparedness talk around Y2K framed this readiness work as comparable to insurance, or getting ready for any kind of potential disaster, like a hurricane or blizzard.

Many of these reactions to Y2K also spoke to a recognition of how dependent life had become on often opaque computer systems. As Feldhahn put it in her book Y2K: The Millennium Bug, “most of us don’t realize just how fundamental computer technology is to the smooth functioning of our entire society.” In her sermon, Rev. Reid had noted, “Our lives are computer orchestrated in virtually all areas, from national security and air traffic control to catalog ordering and computerized auto repair diagnostics and parts inventory.”

Even Grant Jeffrey’s Millennium Meltdown expressed the belief that Y2K could “lead to a sober reappraisal of the pros and cons of accepting every single computer technological advance without considering carefully its impact on the quality of life of those affected by the new system.” While those who don’t share his religious perspective might scoff at much of his writing, this speaks to a desire for a serious reckoning with the costs and benefits of computerization that would be at home in many of today’s debates. Webber and Hutchings’ book Computers and the Beast of Revelation is seldom listed among the classics of computer criticism, but it includes an insight from the computer scientist Joseph Weizenbaum: “Take the great many people who’ve dealt with computers now for a long time… and ask whether they’re in any better position to solve life’s problems. And I think the answer is clearly no. They’re just as confused and mixed up about the world and their personal relations and so on as anyone else.”

Though these works varied in the level of calamity they were predicting — and the glee with which they anticipated the downfall of civilization — they were united in the sense that they did not need to convince readers that Y2K was real. To fulfill that task, they could simply point to technical experts, and quite often to pronouncements by government officials. Granted, there is a difference between citing experts and citing them correctly. Many Y2K experts were self-consciously hyperbolic — deliberately striking a gloomy stance in the hopes that doing so would trigger action. The expert’s warnings were couched in numerous “ifs,” the underlying argument being that these worst-case scenarios could be avoided if the necessary repairs were undertaken. Y2K conspiracists tended to ignore the “ifs,” or to qualify them by noting it was unlikely the repairs would be completed in time.

In his opening statement at a hearing on the topic of community preparedness, Senator Robert Bennett stated, “I am not a doomsayer: Y2K will not be the end of the world as we know it.” He went on to praise the number of people hard at work and the progress they were making, but added, “on the other hand, I am not a Pollyanna: I cannot say that everything will be fine.” (He ruefully acknowledged the glut of “rumors and disinformation about Y2K” that could be all too easily found on that “new medium” of the Internet.) Bennett advised the American public, “if you’re going to stockpile anything, stockpile information,” also suggesting that it wasn’t such a terrible idea to have some non-perishable food and extra batteries for the flashlight — not specifically to be ready for Y2K, but because it was always wise to have a bit of extra food and to make sure the batteries in the flashlight aren’t dead.

The lack of calamity helped re-inscribe the faith briefly threatened by the crisis

As 1999 came to a close, President Clinton’s Y2K Conversion Council distributed a straightforward “Y2K and You” pamphlet that sought to carefully walk the line between assurances that everything would be okay, and a recognition that problems might occur. The guide recommended having “adequate clothing, tools and supplies, flashlights, batteries, a battery-powered radio, and a first aid kit,” but it carefully emphasized that these were just the standard sorts of things that FEMA and the Red Cross advised all people to have in their homes. As the guide put it, in a reminder that is both truthful and a little unsettling, “there are no guarantees any of us will have power tomorrow, let alone on January 1.”

By then, those who had spent years worrying about Y2K’s potential impact had reason to take a deep breath. While Y2K has its roots in decisions made in the 1960s, the work of fixing it largely played out in less than a decade. The speed with which Y2K went from an issue of awareness, to panic, to amelioration, was swift. This meant that what a technical expert warned of in 1997 could be out of date by 1998. And by the time a Y2K conspiracist was quoting a technical expert, that expert could have adopted a significantly more optimistic stance. De Jager published “Doomsday 2000” in 1993; by June of 1999 he was saying, “For the most part, we’ve done what we’re supposed to do to fix the problem, and it’s time to lighten up… society won’t fall apart.”

Those early engineers who were programming dates using six figures instead of eight were aware that what they were doing would create a problem come the year 2000. But they proceeded based on the all-too-common human assumption that someone else would fix it before the deadline hit. Besides, the idea that the code being written in the 1960s would still be in use by the late 1990s seemed unbelievable at the time. By the 1990s, a legion of IT workers and project managers had mobilized to fix the problem, and though the actual work was not always the most technically complicated, the scale of the challenge (alongside the looming deadline) required a herculean response. None of this is quite as exciting or memorable as saying that the sky is about to fall.

Those who were actually involved in this remediation work and expecting some “bumps in the road” really did see the bumps they were expecting. In its “aftermath” report, the Senate Special Committee featured a lengthy appendix with information on hundreds of computer problems that had actually occurred, but noted that “most have been quickly corrected.” Given that companies that encountered and quickly fixed problems in the early days of 2000 had nothing to gain by publicizing them, “the full extent of Y2K problems will probably never be known.”

Those who had been secretly hoping to see planes fall from the sky were disappointed. Nuclear power plants had not melted down, banks had not deleted everyone’s balance — even though those were not the sorts of calamities that serious Y2K watchers were warning about. As time went on, what lingered in memory was the apocalyptic imagery that had graced magazine covers, not the stories of all the programmers and bureaucrats who had made sure the code was fixed. The disdain with which Y2K is remembered is closely bound up with the fact that, in almost all cases, the power did stay on when December 31, 1999 became January 1, 2000.

Y2K is the story of an economic problem (the cost of memory), which became a technological problem (the code that needed to be checked, corrected, and tested), which ultimately became a social problem (as the broader public tried to make sense of Y2K). When we remember Y2K today, what we usually wind up talking about is the fearsome ways in which it manifested itself as a social problem. With the privilege of hindsight, it can be easy to look back at all of the hubbub around Y2K and shrug at its silliness, but to do so is the opposite of learning the lessons it has to offer us. Y2K is not a story of a warning, followed by nothing, followed by barely anything going wrong. Rather, it is the story of a warning, followed by a massive amount of work in response to the direness of that warning, followed by barely anything going wrong because the work had been done. In the midst of so many crises that demonstrate the dangers of not taking action when the tocsin is sounded, Y2K is an example of what happens when the warning is heeded. It is also a reminder that people will remember the warning and the end result, but won’t often remember all the work that was done.

It’s worth recognizing the level of uncertainty that surrounded Y2K throughout much of the 1990s. While some rather unscrupulous individuals certainly capitalized on this uncertainty to make a quick buck off of people’s fears, it was flowing from government reports that continually emphasized that no one knew exactly what would happen, and advice from reputable sources advising people that it wasn’t a bad idea to have some extra food on hand and to make sure they had hard copies of their essential documents. For those who were still growing accustomed to just how intertwined their lives had become with the successful functioning of opaque computer systems, Y2K could be quite disorienting. It demonstrated clearly that people were living in a world where the things they depended on — from the lights staying on, to the grocery store staying stocked, to being able to get money from the bank — were themselves dependent on computer systems that could potentially be brought low by something as simple as two little numbers.

It can be tempting to remember Y2K as just another techno-panic. And yet, to remember it that way risks forgetting that such panic was a result of societies eagerly embracing computer technology in the first place. One of the things that Y2K revealed is that once you are reaping the benefits of computers, you are also at their mercy. While it wasn’t the first time computers had presented a major problem (or the first time computerization was publicly criticized), the scale of the problem briefly pushed a conversation about the pros and cons of computing into the mainstream. The irony is that while some hoped Y2K would result in a thoughtful reexamination of the benefits and dangers of computers — regardless of what happened on January 1, 2000 — the lack of calamity helped re-inscribe the faith briefly threatened by the crisis.

In the 1990s, Y2K served as a disquieting notice of how dependent life had become on computers. By the 2020s, this is easily overlooked. Today it is not uncommon to encounter debates about the pros and cons of a particular social media platform, or a particular new gadget, or some computer-exacerbated problem, such as misinformation. But what sets Y2K apart from so many of today’s computing-related controversies is that it wasn’t about Facebook’s algorithms or Elon Musk’s fantasies; it was about the less flashy, but far more significant fact that everything had come to rely on computers. Unlike contemporary debates that hinge on the question of where computing is taking us, Y2K asked those who experienced it to consider where computing had already taken them.

As the 20th century ended and the 21st began, the Senate’s Special Committee argued that Y2K was “an opportunity to educate ourselves first-hand about the nature of 21st century threats.” After all, “Technology has provided the U.S. with many advantages, but it also creates many new vulnerabilities.” Y2K was an event that forced people to reckon with those vulnerabilities; and, while the initial test was passed, they are still with us today.