This piece was excerpted and adapted from Being Numerous: Essays on Non-Fascist Life, published April 30 by Verso.

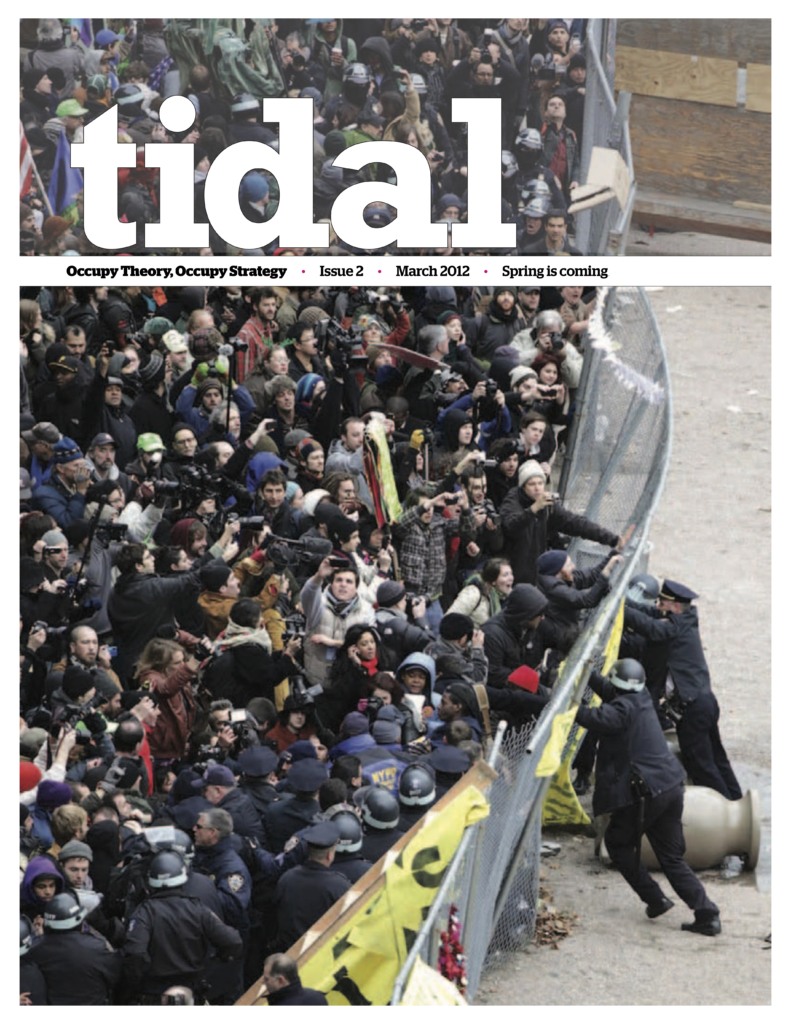

A photo from the sometime-halcyon days of Occupy Wall Street has come to haunt me. The image, which was used as the cover for the second issue of Tidal, Occupy’s theory journal, at first glance seems to capture a trenchant insurrectionary tableau. A massive mob of protesters appears on the cusp of breaking down a fence, held up by a measly line of riot cops defending the emptiness of Duarte Square, a drab expanse of concrete in downtown Manhattan. Look closer, though, and a different scene comes into focus: No more than a scattered handful of protesters are actually pushing against the fence. The rest of the crowd, pressed tightly against each other, hold phones aloft, recording each other recording each other for the (assumed) viewers at home. The fence of Duarte Square was barely breached that December day in 2011.

The photo captured the near knee-jerk proclivity many participants in mass protests have developed to recount every action live over social media, with the idea that this was inherently bold and radical; taking the narrative of protest into our own hands, our own broadcast devices, refusing reliance on traditional media institutions.

Regardless of where you stand on the question of whether social media platforms have helped, hindered, or otherwise shaped protest movements (or all of the above), the Tidal image has taken on a different valence in the years following the Snowden revelations. For the phones in that photograph were not only a hindrance to the crowd’s purported effort to swarm Duarte Square; they were surveillance devices.

This much became undeniable by 2014 (at the latest): The devices and platforms we rely upon — to communicate, gather information, and build solidarity — offer us up as ripe for constant surveillance. The surveillance state could not be upheld without its readily trackable denizens. To sidestep our tacit complicity in this would be to fail to recognize how deep our participation in our own surveillability runs — it’s how we live.

It seems almost quaint (and this is no good thing) that just a few years ago we were shocked to learn the extent of our mass surveillance state. But the NSA leaks were, at the time, a revelation. They shed light on a fearsome nexus between the government, communications, and tech giants. And beyond this, they offered a lesson in the challenges of fighting a system of control in which we are complicit.

There was a certain folly, but also a commendable optimism, in the immediate, outraged responses to Snowden’s leaks. Journalists and activists sought an object, a vessel, a villain. Who is to blame? Where are the bad guys? How do we fight back? There were obvious culprits in need of censure: Whether it was then director of national intelligence James Clapper, then NSA director Keith Alexander, Google, AT&T, or the PRISM data collection and surveillance program, we looked to blame someone or something we could isolate and locate. Politicians’ and activists’ efforts centered on top-down NSA reform and demands for tech giants to be more transparent. As such, they missed the nuance and gravity of what was at stake.

It was a prime moment for bipartisan pantomime. Democrat and Republican lawmakers came together in performative outrage to demand an end to the NSA’s bulk collection of Americans’ communications data. The preposterously named USA Freedom Act, signed into law in June 2015, gained traction primarily on this point. It proposed some limits on bulk data collection of Americans’ communications, but it also restored some of the worst provisions of George W. Bush’s 2001 Patriot Act. The Obama White House assembled advisory committees who duly issued lengthy reports and promised more reviews to come. Perhaps worst of all, scrambling for position as the “good guys,” tech leviathans including Google and Facebook pushed publicly for greater transparency. It seems laughable now, after Facebook’s opaque policies and products may have helped sway an election; nevertheless, every week still brings a new promise of “transparency” from the great clouds of Silicon Valley.

None among the programs revealed in Snowden’s trove has really stopped

The fight for bold executive and legislative reform of state surveillance came to little. Government agencies are still using programs like PRISM, launched in 2007, which authorizes the NSA to demand vast reserves of stored data, in concert with pretty much every major tech company, including access to our private communications without warrants. None among the programs revealed in Snowden’s trove has really stopped.

By focusing on legible seats of power, activist groups and outraged political players largely sidestepped the question of how surveilled subjects uphold — cannot but uphold — their position as surveilled. A lot of discussions about government surveillance were framed counterfactually: Would we have consented to our current level of mass surveillance had we known what we were signing up for as digital denizens? In 2014, James Clapper admitted that the NSA should have been more open with the public about the ubiquitous hoarding of their communications. But he doubled down: “If the program had been publicly introduced in the wake of the 9/11 attacks, most Americans would probably have supported it,” he said. Clapper couldn’t help but resort to a perverse conditional logic in which the public would have consented to what they could not, in fact, consent to. As Ben Wizner, legal adviser to Snowden and the director of the American Civil Liberties Union’s Speech, Privacy and Technology Project, commented in response to Clapper, “Whether we would have consented to that at the time will never be known.” We have not consented to our own constant surveillance, even if the way we live has produced it.

Since 2014, conversations about surveillance through techno-capitalism have shifted away from unconstitutional government spy programs and toward questions about how, and to what corporate and political ends, tech giants extract and use our data. Or, more precisely, how these corporations use (and produce) us qua data — the data, after all, is not ours. This discursive shift makes sense; to focus primarily on the NSA incorrectly frames contemporary surveillance as a problem of unwanted, oppressive government scrutiny. This is, no doubt, a problem — as anyone summarily placed on a no-fly list would attest. But the government programs Snowden revealed operate over a terrain where mass (and mutual) surveillance is already the norm, the baseline, of social participation: the offering-up of ourselves, as surveillable subjects, through almost every trackable interaction, all organized by a tiny number of vastly powerful corporations.

The extent to which we truly “want” and “accept” these conditions is moot. While we are unquestionably active participants in upholding a state of surveillance, to suggest that we are therefore consenting would be to overstate our choice in the matter. We are not all inherently reliant, as a point of economic necessity, on surveillance-enabling devices and interfaces (although many workers, like Uber drivers and TaskRabbit cleaners are). However, participation in surveillance systems is inescapable for those who abide by the social and economic spirit of the now, because these Silicon Valley–owned networks and interfaces have become the stage on which the social, the intimate, and the commercial — even the political and the revolutionary — are enacted.

Paul Virilio, one of the most prescient thinkers of how technology (re)orders the world, talked about how each technology, from the moment of its invention, carries its own accident (its potential for accident) — it introduces a type of accident, a scale of disaster, into the world that had not existed before. As he put it, “When you invent the ship, you also invent the shipwreck; when you invent the plane you also invent the plane crash; and when you invent electricity, you invent electrocution … Every technology carries its own negativity, which is invented at the same time as technical progress.” The fact that we presume innovative advancement without damage is hubris worthy of Icarus. As Virilio saw it, technology containing its own accident is “so obvious that being obliged to repeat it shows the extent to which we are alienated by the propaganda of progress.”

We have not consented to our own constant surveillance, even if the way we live has produced it

Virilio’s framework rejects a dim binary in which we must deem our current and developing technologies “good” or “bad.” Rather, it demands a constant ethical calculus: We must ask of a technological possibility what potential accidents it contains and whether they are tolerable. It’s not always knowable, but it is always askable. And better asked sooner rather than later. As Virilio pointed out in 1995, high-speed trains were made possible because older rail technologies had produced a form of traffic control that allowed trains to go faster and faster without risking disastrous collisions. The accident had been considered. But “there is no traffic control system for today’s information technology,” he wrote then, and again, more recently: “We still don’t know what a virtual accident looks like.” He knew, however, that this accident would be “integral and globally constituted”; such is the totality of online networks’ enmeshment with everyday life, all at instantaneous speed. “We are pressed, pressed on each other / We will be told at once / Of anything that happens” — so the poet George Oppen foresaw in his great 1968 work Of Being Numerous.

Our engagement with the devices of the surveillance state goes deeper than the technological tools we use — indeed these are not simply tools but apparatuses. For philosopher Giorgio Agamben, an apparatus is not simply a technological device, but “literally anything that has in some way the capacity to capture, orient, determine, intercept, model, control, or secure the gestures, behaviors, opinions, or discourses of living beings.” As such, a language is an apparatus as much as an iPhone. He wrote of his “implacable hatred” for cell phones and his desire to destroy them all and punish their users.

But then he noted that this is not the right solution. The “apparatus” cannot simply be isolated in the device or the interface — say, the phone or the website — because apparatuses are shaped by and shape the subjects that use them. Destroying the apparatus would entail destroying, in some ways, the subjects who create and are in turn created by it.

There’s no denying that the apparatuses by which we have become surveillable subjects are also systems through which we have become our current selves, tout court, through social media and trackable online communication — working, dating, shopping, networking, archived, ephemeral, and legible selves and (crucially) communities. A mass Luddite movement to smash all phones, laptops, GPS devices, and so on would ignore the fact that it is no mere accident of history that millions of us have chosen, albeit via an overdetermined “choice,” to live with and through these devices.

“The Californian Ideology” — the dream (dreamed by a handful of rich, white men in the early 1990s) that the internet would be a democratizing force of decentralized power and knowledge — was always a myth born of myopic thinking, one which failed to take into account that the internet was born within, not beyond, the strictures of capitalist relationality and brutal social hierarchy. Public access to information expanded on a vast scale, but at the same time, consolidation of power over the information network was stunning. As writer and neuroscientist Aaron Bornstein has pointed out,

Each year more data is being produced — and, with cheap storage and a culture of collection, preserved — than existed in all of human history before the internet. It is thus literally true that more of humanity’s records are held by fewer people than ever before, each of whom can be — and, we now know, are — compelled to deliver those records to the state.

When in 2009, then executive chairman of Google Eric Schmidt said that “if you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place,” he exemplified the sort of Silicon Valley privilege that undergirds unfettered surveillance systems. This dangerous Google ethic forgets that not every individual is afforded the opportunity to make the details of their life, personal records, preferences, and histories available for public consumption without consequence. A highly paid Silicon Valley engineer may freely Instagram his deviance at Burning Man with abandon. But undocumented immigrants, sex workers, and incarcerated individuals — to name just a few marginalized communities — don’t necessarily have that privilege.

Beyond this, resistance to mass surveillance is not only an issue of what we may or may not want to hide but how our lives are organized and susceptible to social control, how we become, as Foucault put it, “docile bodies.”

For the most part, resistance to surveillance is framed in terms of privacy — be it insisting on better privacy policies from tech companies and the state, or encouraging better privacy practices among individuals. Such efforts may take the form of resistance through transparency: What is being done with our data? What do companies know about us? Or otherwise, that of protection through obfuscation: encryption services, Onion routing, surveillance-thwarting makeup. The options are reformist and reactive, at best. Interferences, hacks and ruptures in networks-as-normal, when carried out by nongovernmental actors, tend to be fleeting. Such is the asymmetry of power.

The internet was born within, not beyond, the strictures of brutal social hierarchy

Our current, collective options for resistance illustrate the extent to which surveillance technologies are sewn into — and give shape to — the fabric of daily life. To partake in the benefits, pleasures, necessities, and inescapabilities of social media participation, surveillance is a “form of life” in the sense that Wittgenstein used the term: Surveillability is the background context by which these interactions and experiences are made possible. Surveillance is not epiphenomenal to social media life — it is its bedrock. Under late capitalism, that is.

This is what Oppen anticipated: “Obsessed, bewildered / By the shipwreck / Of the singular / We have chosen the meaning / Of Being Numerous.” He was not, half a century ago, hinting at totalized surveillance through social media, but he speaks to the interrelations undergirding our current condition. We participate in the social order; we avoid the “shipwreck” of our singular selves with which we are nonetheless obsessed, but we have not yet (for now) chosen to be collective, or communized, or united. We have chosen to be numerous: As online selves, we are enumerated as surveilled data points. We live by and through the numbers: the likes, the clicks, the follows. Crucially, though, Oppen wrote that it was “the meaning” of being numerous that we have chosen — and there is hope in the thought that we might choose differently, together, this meaning.

Through enmeshed digital and meatspace lives, millions of people have, at times, already chosen to be numerous in ways never before possible. Engagement on social media produces and demands complicated sets of relations, connections, truths, and illusions. And it has at times been fueled by, and in turn produced, revolutionary subjects. There is no question, for example, that Twitter shed a novel light on Iran’s 2009 Green Revolution, on the Tunisian revolution of 2010 to 2011, and Egypt’s 2011 revolution. Social media were both a product of, and a factor in, creating the conditions of possibility for these uprisings. Tweets shaped their narratives because tweets were their narrative. Tweets called bodies into insurrectionary spaces, and bodies used Twitter to expand those spaces further. Most every street protest I’ve attended for the last seven or so years has been to some extent organized through social media. The #MeToo hashtag fostered important discussions and connections over the ubiquity of patriarchy and sexual violence, while helping fuel concrete collective action taken by hotel and fast-food workers.

That does not mean we can talk of “Twitter revolutions,” as the media once reductively and absurdly buzzed about the Arab Spring. Hashtags as social movement tools have profound limitations (suffice it to mention #Kony2012), but so do all tactics. Social media’s usefulness in revolutionary struggle and protest (and they have been useful) further complicates the question of whether we can tolerate the “accident” — by our lights, in Virilian terms — of surveillance. Not to mention the “accident” that these platforms offer equal opportunity (with scant interest in oversight) for fascists to become numerous beyond a given locality too.

Can we tolerate that our digital habits and reliances contain accidents for us, which are desired outcomes for those who control the digital infrastructure? In a 2000 interview, Virilio said, “Resistance is always possible” and called first of all for the development of “a democratic technological culture.” As he put it: “Technoscientific intelligence is presently insufficiently spread among society at large to enable us to interpret the sorts of technoscientific advances that are taking shape today.” He was right, but the answer is not that we all learn how to code and hack (although that wouldn’t hurt). The problem is that now the technoscientific power over the infrastructure of our social media lives is even more consolidated and hierarchical than is corporate and governmental control over the infrastructure of the streets in a city.

It’s hard to see a way out of the dilemma. But this is not to say would-be resisters aren’t trying. Cultural critic Ben Tarnoff, for example, has put forward the idea of socializing our data, under the template of democratic resource nationalism, as a way to cede power (and the extractive value captured from the data we produce) from the tech giants back to the denizens of the digital commons. As he explained it in the journal Logic:

Such a move wouldn’t necessarily require seizing the extractive apparatus itself. You don’t have to nationalize the data centers to nationalize the data. Companies could continue to extract and refine data — under democratically determined rules — but with the crucial distinction that they are doing so on our behalf, and for our benefit … In exchange for permission to extract and refine our data, companies would be required to pay a certain percentage of their data revenue into a sovereign wealth fund, either in cash or stock.

If the data were not privately owned — if we could truly democratically determine the rules that govern how companies extract it and for what — the social media sites of extraction would look very different.

We can’t talk of a “democratic technological culture” while Big Data remains undemocratized, and regulation alone won’t get us there. Tarnoff recognizes that democratization requires that data be used to benefit the public directly, rather than through the false promises of the corporations who currently benefit from a regime of capture and exploitation. His plan would use democratic control of data, on which late capitalism runs, to undermine capitalism. Marx would be thrilled.

Tarnoff stressed the difficulty and risks involved in such a reconfiguration of data: “Transparency, coordination, automation — if these have democratic possibilities, they have authoritarian ones as well.” Such a thought is, in itself, what it looks like to consider aloud the ethics of a possible technological future and the accidents it contains. I’m not suggesting that the nationalization of data is the one correct solution, or even a possible one; it is, as Tarnoff notes, “delightfully utopian.” But it is, at the very least, a vista to a “democratic technological culture” — one to which we might be able to consent, in great numbers.