“I believed it was my duty to save Josie, to make her well.” So states Klara, the robot narrator of Kazuo Ishiguro’s 2021 novel Klara and the Sun. In the future world it depicts, “artificial friends” like Klara can be purchased to administer physical and emotional care, in this case for a terminally ill teenager named Josie. Dutifully, Klara greets Josie in the morning, watches over her studies throughout the day, and accompanies her at every meal. She lingers outside the girl’s door while Josie sleeps, attuned to any disruption of the rhythm of her breathing.

In detailing Klara’s performance of care, Ishiguro doesn’t clarify whether her actions stem from a human-like sense of empathy or just an obligation instilled in her programming. That is, he doesn’t explain whether Klara is capable of the reciprocal, complicated, and distinctly human variety of care described by Christina Sharpe in In the Wake: “a way to feel and to feel for and with, a way to tend to the living and the dying.” Ishiguro doesn’t make clear if Klara can feel at all, if the characters in the novel can understand her feelings, or if we as readers can feel with her. Klara is instead depicted ambiguously as an observer but not a participant in reciprocity. “There was something very special, but it wasn’t inside Josie. It was inside those who loved her,” Klara says at the close of the novel. With this, Ishiguro sets up a framework that would render the Turing test obsolete: For a machine to pass as a person, it must not just be mistaken as human but be loved as one.

Humanized robot carers in science fiction demonstrate a desire to feel with one another beyond a caregiver-care receiver dyad

Such writing suggests a different way of approaching the coming wave of nonfictional care robots. As a recent BBC report details, robots have been deployed in nursing homes and schools in Japan to deal with an aging population and staffing shortages. Similarly, in 2019, Time magazine reported on their use in a Washington D.C. elder care center. In many instances, these “socially assistive” robots serve as pet-like companions that sometimes can also double as video-conferencing interfaces for elderly patients, often with dementia. With such robots, the ability to simulate and stimulate emotion is central to their functionality. But does that mean they are capable of providing care, understood as a necessarily reciprocal connection?

Public health specialists and technologists already predict the widespread use of care robots, as populations age and the need for elder care becomes increasingly pervasive. Some critics have deemed care robots as inhumane, claiming that, as robots can’t feel, patients are stripped of dignity, privacy, and important mutual human relationships through the normalization of “artificial” care. But the possibility of care is not a one-sided question of the AI’s technological capabilities alone. Care occurs not entirely within a face-to-face dyad of caregiver and care receiver but also as a series of rituals performed throughout a networked community. By situating care within a network of relations, robotic caregiving may also drive a re-evaluation of how feeling — feeling for and feeling with — is measured. The emotions that care robots evoke can sustain a community of care rather than cancel it out.

In 2001, a study published in The Gerontologist surveyed retirement-home patients and found that they viewed good care as affective, dependent on a reciprocal relationship between caregiver and recipient. Such interactions require the so-called human touch that is suggestive of both an embodied relation and an intangible quality of feeling, precisely what robots are presumed to be incapable of. In a 2015 paper “Robots in Aged Care: A Dystopian Future?” philosopher Robert Sparrow argues that “machines lack the interiority and capacity to engage in such relations.” But this merely identifies limitations of present technology; it does not categorically rule out robots “feeling with” humans. If humans were to enter into relationships with robots — not simply one-to-one relations, but with care machines woven into the fabric of our communities — the recognition and respect Sparrow fears will be lost may still be possible, only in a broader context than he is examining.

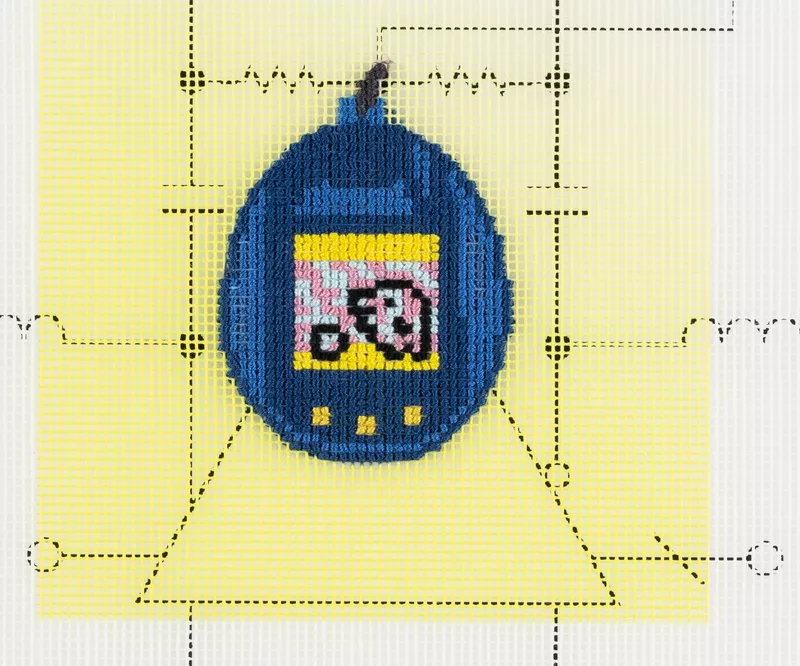

The fact is that humans already form emotional attachments with machines. Many children and adults, for example, developed an emotional attachment to Tamagotchi, among the first “relational artifacts” (to use Carme Torras’s term) to be sold as a consumer good. An ersatz animal that continually needed to be fed and tended to when sick, the Tamagotchi was designed to elicit care from its owners by training them to respond to its generic signals of need. That is, the toy did not have to call out personally to its owner by name to generate an attachment. People became emotionally attached not through a reciprocity of individualized recognition but through the routinized act of care itself, broken down into a series of mechanical operations on an interface. With this technology, the humans are programmed — in a robotic manner — to respond to a machine, to learn to care. The same idea has since become the basis for mobile apps like Pou, which provide entertainment through contrived care tasks.

The Tamagotchi was designed to elicit care from its owners by training them to respond to its generic signals of need

In an analysis of elderly care in Thailand, medical anthropologist Felicity Aulino critiques the overidentification of care with a “conscience-led internal orientation” — i.e. an agent freely directing its will — and points instead to ritual, the value stemming from repeated action rather than interiority. “A focus on bodily actions was necessary to unsettle dominant understandings of care,” she writes of her methodology. Aulino traces the development of Buddhist karma as a model for societal conceptions of care, finding that meaning lies in the everyday and ordinary actions performed for and by members of a community. Her research does not necessarily contradict Sharpe’s definition of care; rather it demonstrates that the action itself may be as important as the affective intentionality presumably behind or expressed through it. The meaning lies in the bond, not the individual.

Such findings recast robots as tools in harmony with our need to care and be cared for. Machines are capable of this routinized mode of interaction — it tracks directly with how artificial intelligence is grounded in repetition and pattern recognition, operating without a concept of interiority but reliant instead on empirical assessments of the relations between things. Fictional representations of human-robot care interactions, in their accounts of being with rather than interior being, can help make this process relatable for humans, something they can emulate. Care doesn’t need to be volunteered or demonstrably empathetic so much as it needs to be reliable, routine.

Fiction, which is often conceived of as a model for interiority, also provides a practice of rituals: scanning the eye deliberately over the words, page after page, the mechanistic processes of extracting cues and clues from surface evidence. Through these activities we engage in an affective relationship with a material object, founded not on some emotional bond between reader and paper but on the networked routine of care the object extends. The pervasive appearance of humanized robot carers in science fiction demonstrates a cultural desire to feel with one another and care for each other beyond the strict limits and assumptions of a caregiver-care receiver dyad and their supposedly necessary reciprocal understanding.

Of course, robots haven’t universally appeared as care workers; they originated simply as a labor force. The term robot originates from the Czech robota (forced labor, as performed by serfs) and first appeared in Karel Čapek’s 1921 play R.U.R., where they eventually overthrow their human captors. Čapek’s robots were stand-ins that allowed fiction to critique industrialization and class-based exploitation. This framework still applies, as carebots help illustrate how care work is exploited or devalued. Early in Ishiguro’s novel, for example, Klara attends a party at Josie’s house, during which children tease her and threaten to throw her in the air. As she is prodded to perform for them, her capacity for feeling is largely inconsequential; with their every desire met, these children seek entertainment from the emotional subordination of machine.

Care doesn’t need to be volunteered or demonstrably empathetic so much as it needs to be reliable, routine

Stories that depict machines as inferior can reinforce and naturalize hierarchical power structures as much as it critiques them. But the appearance of robots in fiction need not be read strictly as metaphors for dehumanized or devalued human workers. Artificial carers can be understood not just as replacements but as the bridge between humanity and machinery, cyborgs. In A Cyborg Manifesto, Donna Haraway describes the cyborg as an entry point into the state of hybridity, where the boundaries between human and animal, human-animal (organism) and machine, and physical and nonphysical have been blurred. Shifting away from strict dualisms between “organic whole” and “fractured machine,” Haraway opens the possibility of the machine as more than the other.

In this framework, care can be separated from the notion of protecting the sanctity of the individual as defined by humanism. Science fiction can accordingly help restructure our language so that it draws from our interconnectedness with machines and with other beings. The pre-emptive dismissal of robotically performed care derives from a fixation on individualized human autonomy, with the lines drawn between self and other traversable only by labor with a definite giver and recipient. To redraw the boundaries as Haraway advocates would involve reorienting around a larger system, in which carer and cared for act as participants in a network of mutual relationships.

Rather than reify the boundary between human and machine, science fiction can serve as a mechanism for undoing that division, demonstrating how robots mediate a broader relation of care in a community. Robot from Robot and Frank, Robbie from the eponymous Isaac Asimov short story, Robot from the Lost in Space reboot, and Mia from the television program Humans are all examples of machines that don’t replace social relationships for the human beings they serve but extend them, broadening the bounds of human-human interaction by shifting expectations of how care, labor, and interiority are connected.

The robot is a media form that allows humans to care for each other across distances of time and space. In its many representations, and increasingly in real-world implementations, the care robot can be understood as an accumulation of ideas of care that humans have programmed or assumptions about care extracted from data on human behavior. Its execution may be limited and biased by that process of mediation, but it does not necessarily negate the care it makes manifest.

The robot is a media form that allows humans to care for each other across distances — an accumulation of ideas of care that humans have programmed into it

At the same time, the practice of reading science fiction, or reading fiction in general, offers a similar kind of emotional attachment and response training. Through habitual reading, one can learn that being attentive to certain textual cues can serve as an emotionally rewarding experience of care-giving recognition. Reading fiction becomes a model for the reciprocal care relation that operates outside the face-to-face human paradigm: care not limited within isolated human-machine relations but mediated by machines, and expressed between humans adapting mechanical processes for care.

This methodology is itself dramatized in Disney’s Big Hero 6, for example, which features a robot named Baymax that is designed to provide care until his patient releases him with the phrase, “I am satisfied with my care.” Here care is positioned explicitly as a matter of the patient’s perception. While delivering medical and emotional attention, Baymax trains the patient to perceive care differently, or at least in such a way as to recognize his behavior as caring. As Baymax’s patient Hiro comes to feel for Baymax — as an independent being, clumsy and capable of kindness — the pair appears to fulfill the condition of mutually beneficial recognition that philosopher Paul Ricoeur argues is necessary for human development. Baymax experiences the fulfillment of his mission and becomes integrated into a larger network of care through Hiro’s friends and family, which are transformed by the robot’s attention to physical and emotional cues. In this new band of beings, Baymax introduces a ritual of care which is adapted by a larger community; the robot does not replace “true care” so much as help articulate it amid a larger sphere of social relations. “Artificial” care is not a replacement for human interaction but an additional means by which humans can express care, to the robot and through the robot’s provision of ritual.

Characters like Baymax and Klara offer new possibilities for relation, a philosophical step beyond the confines of code, of problem and solution, of artificial and genuine. These fictions offer meaningful interactions between person and machine, founded on programming principles that are elaborated through dramatic means. In these works, human characters value not only the labor but the laborers as well, regardless of whether they are human agents, and are altered by the model of care offered by non-human entities. The reader and audience are also transformed by viewing these relationships, as we care and are being cared for by the process of media consumption itself. Such engagement trains humans in a quasi-robotic manner to recognize a feeling relation with a non-human object (the book, or film, or television program), just as these fictions depict humans learning to care for a non-human being. In some ways, these fictional human-machine relations are metaphors for the reading and watching process, articulating the transformation we undergo as an audience.

Science fiction, then, may offer a new model for care, one that is founded on ritual, presence, and an acceptance of robots as mediators rather than mere machines. By repeatedly engaging in a material-mediated care process, we too are transformed by such relations. “Hiro, I will always be with you,” Baymax says before sacrificing himself for his young companion. Likewise, Klara responds to Josie’s cries with whispers affirming her presence, “‘I’m your AF. This is exactly why I’m here. I’m always here.’”