Despite its name, gamification has never really been about making experiences more game-like. If there were a common characteristic that defined all games, it would certainly not be the use of badges, achievements, and points as incentives for engagement. Games, if anything, share an embodiment of the spirit of play — a temporary suspension of the rules of life to make space for intensities of experience: levity, rivalry, concentration, joy. If historian Johan Huizinga — whose 1938 book Homo Ludens is one of the pivotal works of game studies — had the opportunity to define gamification according to his theory of play, he might have reserved the term for a “temporary abolition of the ordinary world” where “inside the circle of the game the laws and customs of ordinary life no longer count.”

Now gamification evangelists like Jane McGonigal advocate for games to be understood as fundamentally productive, offering a set of tactics to make life under neoliberalism appear more fun and addictive — a “magic circle” we should never step out from, even if we had the choice. The concept first gained traction at the end of the 2000s within game development and marketing communities, which saw an opportunity to use aspects of games to monetize the web. In 2008, before the word had a standardized spelling, a blog explained “gameification” as “taking game mechanics and applying [them] to other web properties to increase engagement.” In the Wharton School of Business’s popular online course titled Gamification, the instructor professes that “there are some game elements that are more common than others and that are more influential than others in shaping typical examples of gamification.” These elements are “points, badges, and leaderboards.” These offer scores that constitute “a universal currency, if you will, that allows us to create a system where doing one sort of action, going off on a quest with your friends, is somehow equivalent or comparable to doing some other sort of action, sitting and watching a video on the site.”

“Achievements” notifications were not programmed by game developers to meet players’ demands, but were a requirement codified by platforms

Many games have scores, of course, but typically they serve the limited purpose of determining a winner of a particular contest. Gamification takes scores as an exportable measure of qualities that are no longer internal to the game that has generated them. “Score” becomes just another word for data — a “universal equivalent” whereby life activity and behavior can be reckoned with in quantified terms. From this perspective, games are primarily a means of data production, not a more intense or rewarding form of experience. Accordingly, apps that convert activity into points are hardly concerned with improving the quality of engagement, nor are they limited to the task of encouraging it. Consider the data collection practices of prominent gamification apps such as Nike’s fitness tracker Nike+, the productivity role playing game Habitica, or the language training app Duolingo. Gamification gurus praise these apps for how they import game mechanics, while watchdogs condemn them for privacy violations.

The use of gamification for data collection is not a secret. The professor of the Gamification course openly celebrates it: “One of the aspects of gamification is that you’re going to get lots of information potentially about your players. Information about who they are, their profile and so forth, but also tremendously granular data about what they’re doing. Every action they take in the game, potentially can be collected, and that’s a great thing.” Points, badges, and leaderboards have always been about the data and not the play.

This kind of instrumentalization is alien enough to the nature of games that it is possible to speak of the gamification of games themselves: when scoring systems are added to make behavior within a particular game quantifiable, commensurate, and exportable. Digital games now commonly have features that function more like app notifications: achievements, badges, or trophies that register outside the game world. It is no accident at almost every “hardcore” game for modern consoles — Call of Duty, Grand Theft Auto, Super Smash Bros, etc. — pings the user every time a new achievement is unlocked and displays this information in the user’s profile. For example, during the Covid-19 quarantine I’ve amassed 51 out of 93 possible “achievements” in Ubisoft’s Assassin’s Creed: Odyssey. These achievements, which include badges for “outwitting the sphinx” and for executing “100 headshots,” are viewable in the streaming platform Google Stadia’s “Trophy Room.” These achievements have no function within the game; the player can’t use them for anything except to look back on the time poured into playing with a sense that it amounted to “something.”

Achievements notifications were not features programmed by game developers to meet players’ demands, but as a requirement codified by platforms. Microsoft Xbox’s development kit defines an “Achievement” as a “system-wide mechanism for directing and rewarding users’ in-game actions consistently across all games.” [Emphasis added.] In other words, they are not conceived as part of an individual game but as part of the larger experience of “gaming” in general. Game developers in turn must design games with this logic of achievement in mind.

Every major game platform defines achievements similarly, whether it is PlayStation, Steam, Xbox, or one of the other new cloud-gaming platforms operated by Google, Amazon, and Nvidia. This ubiquitous implementation of “achievements” — data about players’ behavior and skill that is not contained to the game itself — reflects how games have been gamified: Achievements and rewards reduce the heterogeneous experience of different players playing different games to a common currency, allowing platforms to gather and compare data across all the games their systems can run. This data can then be used for ends that have little to do with the games themselves — exemplifying what Shoshanna Zuboff, in The Rise of Surveillance Capitalism, called a “ behavioral surplus.”

In Google’s ad system, the “time spent exploring rather than completing levels” could indicate interest in real-world vacations

What could data drawn specifically from game-playing offer? In 2005, Google attempted to patent a system that would use neural networks, Bayesian inference, and support vector machines to uncover exploitable correlations between in-game behavior and untapped advertising opportunities. The patent listed a number of heuristics — avatar choices, gameplay style, time spent gaming — as potentially relevant to advertisers. The system could, for instance, “display ads for pizza-hut” if “the user has been playing for over two hours continuously.” In addition to inferring pizza appetite, the system would assess players’ personality based on gaming behavior. Such metrics as the time spent bartering instead of stealing within a game would serve as a potential indicator of the player’s interest in “the best deals rather than the flashiest items.” The “time spent exploring rather than completing levels” could indicate interest in real-world vacations. The patent is careful not to claim that these correlations actually exist; instead, it merely describes a system that could collect in-game data and then discover predictors of a player’s extramural wants, needs, and desires.

Classifying gamers based on the data obtained through games is now a multibillion-dollar industry known as game analytics. The company GameAnalytics boasts the ability to collect and analyze data on 850 million monthly active players across 70,000 game titles. Such data can be used to segment players based on their services, playing styles, locations, and demographics. But harvesting data from gamers to make broader inferences about people has a long history. So long as there are winners and losers, players can be ranked by their ability to win.

One such ranking algorithm — the ELO rating system — was developed in the 1960s to rank chess players. It computes the relative skill of players by weighting their wins and losses by the skill level of the opponents. Through this system, the individual chess match becomes a subset of a larger game that is not confined to the board but persists in ordinary space and time, comparing players to people they have never played and enforcing a general climate of competition. This same method can be applied beyond chess. Mark Zuckerberg infamously “gamified” his classmates at Harvard by applying this ranking algorithm to photos of fellow female classmates that he illegally obtained.

The first video game to publicly rank game players with a high-score board was Space Invaders in 1978. This mechanic quickly became a popular feature among arcade games. In 1979, Star Fire and Asteroids were the first games to allow players to personalize scores with three letter combinations. As Nick Montfort and Ian Bogost show in Racing the Beam: The Atari Video Computer System, the new mechanic of high scores codified a culture of competition among arcade gamers. The goal of the game was no longer simply to have fun or to win. “High scores” subordinated play to status, yoking “achievement” to zero-sum competition. For a point of comparison, in this Wired article from 2007, the co-founder of the Soviet Arcade Museum, Alexander Stakhanov, describes public rankings in video games as distinctly western — and by extension, capitalist. In Soviet arcade games, public leaderboards were never a feature. Instead, Stakhanov says, “If you got enough points you won a free game, but there was no ‘high score’ culture as in the West.”

The capitalist gaming model won out, and rankings remain a prominent aspect of game analytics. But the diversity and volume of data collected through digital games has expanded considerably since the days of Space Invaders. Microsoft’s ranking algorithm TrueSkill 2 uses a number of metrics such as “player experience, membership in a squad, the number of kills a player scored, tendency to quit, and skill in other game modes” to develop a relative ranking of every player on its platform. In fact, every gaming platform is now designed to collect numerous data points, as their privacy policies specify. This includes account information, payment information, user content, messages, contacts, device identifiers, network identifiers, location, achievements, scores, rankings, error reporting, and feature usage as well as maintaining the right to share or resell the data to third parties. It also includes common “key performance indicators,” or KPIs, such as virality, retention, active users, and revenue per user, as well as data specific to games such as user inputs and time spent completing tasks — which, according to this analysis from IBM, include “time to complete levels, solo versus interactive behaviors, avatar selection, interaction style indicators, gender of avatar, game strategy behavior variables, game-related tweets, social network activity, language, and more.” PlayStation’s privacy policy states that Sony can collect “what actions you take within a game or app (for example, what obstacle you jump over and what levels you reach).” Similarly Xbox’s third-party sharing notice explains that “information we share may include … data about your game play or app session, including achievements unlocked, time spent in the game or app, presence, game statistics and rankings, and enforcement activity about you in the game or app.” The choose-your-own-adventure narrative structure common to many games is more than a narrative device; it too is a measurement apparatus.

Of course, lots of devices, social media platforms, and apps already collect enormous amounts of data on users, far more than most of those users likely realize. But games can be designed to generate particular kinds of performance data under more carefully controlled conditions. As IBM’s analyst explains, collecting a range of data about players “is no different from the traditional customer view towards applying advanced analytics for player retention, churn, and marketing response efforts,” but stresses “the new variety of data” from games and the “tremendous volume and velocity at which it is generated.”

Because of the variety and magnitude of data collected, games are capable of extracting exploitable information about players’ values and habits. Numerous academic papers purport to have discovered statistically significant relations between users’ behavior in games and outside them. Several academic research papers have concluded that “a video game can be used to create an adequate personality profile of a player.” Similar studies of game-play data from multiplayer online battle arenas like Fortnite have found that it “correlates with fluid intelligence as measured under controlled laboratory conditions.” Other research suggests that “commercial video games can be useful as ‘proxy’ tests of cognitive performance.”

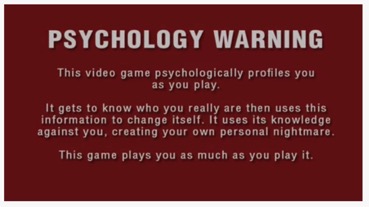

Captured data can also be fed back into the platform designed to produce it. In-game choices can be tuned to measure latent characteristics about players, such as their compulsiveness, their sociability, and their cognitive ability. Silent Hill: Shattered Memories turned the capacity for psychological profiling into a salient part of the game’s narrative, featuring an in-game psychologist that would use such techniques as the Myers-Briggs test to classify players by personality type. Much like TikTok tracks its users’ behavior to reshape their feed algorithmically, the game assesses players’ choices and behaviors (which restroom do they enter, how long they take to examine photographs, etc.) to alter the game into the player’s own “personal nightmare.”

In general, as Google’s patent suggested, the combination of game choices, achievements, playtime, and purchase history is especially valuable to advertisers. Since video games are often expertly designed to keep users invested in them for many hours at a time, the data they yield may be especially valuable to marketers seeking to capture consumer attention. Games can serve as real estate for ads, a repertoire of techniques for behavioral surveillance and control, and an experimental ground in which these can be tested.

Open worlds are well adapted to surveillance and control; endless possibilities for exploration are matched by equally endless opportunities for data collection

In 2019, industry researchers estimated the global in-game advertising market to be more than $128 billion. Not only can games feature virtual billboards, but in-game items or other products within the game world can be swapped out for brands more tailored to the user’s tastes. Users can be forced to sit through personalized ads in cut scenes as they move through the game. Another strategy is to use in-game data to estimate a player’s “lifetime value” or LTV — the monetary value a user is expected to generate for the game developer — and adjust the game accordingly, focusing on players with the highest expected value, nudging them to continue buying game-world tokens, character modes, virtual materials, and other items. Such microtransactions not only milk profit from players through their purchases; they can also indicate what sorts of mundane virtual tasks gamers are willing to put up with and what tasks gamers are willing to pay to avoid. Intellectual-property-law scholars have argued that microtransactions can be used to estimate players’ “intertemporal discount factor,” a financial metric originally designed to assess an investor’s preference for immediate returns or delayed rewards. If a player proves willing to overspend for instant gratification, this data about the player can be sold to advertisers, and may prove especially useful when supplemented with the other data collected by platforms. Microtransactions, then, are more than transactions; they are data on top of money.

Additionally, there are games specifically designed to test the aptitude of job applicants. Even before employers put faith in “game-based assessment,” the U.S. military funded the development of the online networked game America’s Army as a recruitment tool. In an interview between U.S. Army Colonel Wardynski and the self-proclaimed “world’s foremost expert and public speaker on the subject of gamification” Gabe Zichermann, Wardynski admits that the objective of America’s Army was not direct recruitment: “Our objective was decision space.” Wardynski explained that “if [the army] is not even in your decision space, forget recruits. How do I get into a kid’s decision space?”

Beyond their potential for manipulating individual players, gamified games are proving to be important playgrounds for artificial intelligence. Game spaces attract AI developers because the open and dynamic worlds of games have already been rendered discrete and quantified. In gamified games, the points, badges, and leaderboards originally designed to monitor, control, and incentivize human players can be repurposed by engineers to monitor, control, and incentivize machine-learning algorithms. Open worlds are well adapted to surveillance and control, where the seemingly endless possibilities for exploration are matched by the equally endless opportunities for data collection.

Companies with ties to Google — such as DeepMind and OpenAI — use games to try to develop strategic AI capable of playing complex open-world games. Game-playing AIs have already beaten the world’s top human players of traditional games like Go and video games like Dota 2. Microsoft’s project Malmo is an effort to use open-world network games like Minecraft to help build intelligent agents capable of navigation, bartering, and collaboration. The purpose of this research is not purely theoretical. The defense contractor Aptima won a $1 million bid with DARPA to develop artificial agents that learn to work alongside human players in Minecraft, by modeling unique play styles of human players. With AI trained on Minecraft players, DARPA hopes to one day develop AI capable of monitoring soldiers on the battlefield. Amazon, Google, and Microsoft have now sponsored a series of competitions that prompt teams of developers to engineer algorithms capable of accomplishing various game tasks such as navigating randomly selected game worlds, mining a virtual diamond from the depths of a Minecraft’s digital caverns, or scheduling a rail network of in-game trains. Much like the gamers in the worlds being experimented upon, winning teams in these competitions are awarded points, badges and rankings on the competition platform AIcrowd. The platform gamifies AI development itself by turning engineering into a series of competitive rounds, measured by ranking and activity scores and rewarded with icons of gold, silver, and bronze badges.

These efforts make it evident that the principles of gamification assume that humans are no different from algorithms in how they respond to rewards. Like machine learning algorithms, humans in algorithmically controlled spaces can be nudged and reprogrammed to have better habits. Far from making life more game-like, gamification makes human behavior more manageable and predictable, provoked by feedback loops and captured as data.

Given the potential of games to harvest such valuable information, it is not surprising that Google and Amazon have been developing cloud-gaming platforms. What is surprising is that the discourse on “cloud gaming” often focuses on the user experience without stopping to ask who the end users are. Google’s Stadia has received a lot of backlash from gamers, but mainly because it’s expensive, not because it’s exploitative. Yet cloud gaming, like the other services it offers, is about developing compelling alibis for Google’s main business of collecting data and selling ads. Google and Amazon’s move into cloud gaming integrates the data-generating power of games with their existing data-collecting empires. Games are important assets because of the unique affordances they offer to attention retailers: surveillance, control, and undivided attention.

An online marketplace, like a game, is a highly controlled yet seemingly “open world” where choices can be monitored closely. Just as in the two-sided platforms for goods and services managed by Google and Amazon, these choice environments can be engineered to nudge behavior in predefined directions or to collect user data. With psychological insight into the values, ideals, and fantasies that users are not so willing to admit in search queries, emails, and purchasing habits, games are valuable supplements to the data Google and Amazon already collect. Just as “hardcore” games have become more standardized with open worlds, achievements, and trophies, we can expect that the promised freedom and seamlessness of cloud gaming will come with increased surveillance and more penetrating monetization. If we accept the cliché “games are a series of interesting choices,” it is about time to start asking for whom.