You got storyline fever, storyline flu

It’s filtering how everything looks to you

Don’t you reckon it’s affecting your attitude?

Storyline fever got its hooks in you

—“Storyline Fever,” Purple Mountains

In April, mere weeks into the U.S.’s uneven adoption of stay-at-home orders, surreal scenes began unfolding at anti-lockdown protests across the nation. Four different rallies occurred in Michigan, where armed men in tactical gear stormed the state capitol building, and children danced in Trump and Obama masks like something out of The Purge. Hairdressers gave haircuts in defiance of social-distancing orders on the capitol steps, and someone hanged an apparent effigy of Michigan governor Gretchen Whitmer from a noose attached to an American flag. In Kentucky, too, a protester hanged an effigy of governor Andy Beshear outside the statehouse, along with the phrase sic semper tyrannis — “thus always to tyrants” — an apparent allusion to John Wilkes Booth’s declaration after assassinating Abraham Lincoln. At “Operation Gridlock Denver,” protesters in cars and trucks obstructed traffic, including access to medical facilities, and called counter-protesting nurses “communists,” telling them to go to China. At Re-Open Illinois, Confederate and Nazi imagery mingled with slogans such as “Give Me Liberty or Give Me Covid-19.” In Raleigh, North Carolina, a heavily armed protester with an anti-tank rocket launcher took a lunch break at Subway, as his friend posed with a wooden rifle for social media. Outside the Idaho state capitol, militia leader Ammon Bundy punctuated the absurdity by comparing compliance with lockdown orders to the Holocaust and slavery, while repeating the Holocaust-denier lie that Jews resigned themselves to their own genocide.

Such outlandish, conspiracy-influenced proclamations ruled the day at anti-lockdown demonstrations, outweighing more understandable concerns about job insecurity and unclear health messaging from federal and state authorities. Organized by astroturfing groups that imitate grassroots activism, these rallies were one part pseudo-event and one part political revival, a Great Awakening spectacle for true believers. The rallies provided a common banner for anti-vaxxers, anti-government militias, Holocaust deniers, 5G and viral bioweapon reactionaries, Boogaloo/Civil War accelerationists, and followers of QAnon, the everything-and-the-kitchen-sink conspiracy theory that functions as a connective tissue for fringe and far-right worldviews. In a stark indication of the danger that viral conspiracy theories pose to public health, this cacophonous group added pressure for states to reopen in spite of the inevitability of a record spike in infections (which is now coming to pass across the U.S.) and has encouraged the rejection and politicization of medical advice to wear masks and maintain social distancing.

The appeal of conspiracy theories is manifold: a rebuke of institutional corruption and incompetence, answers to life’s ambiguities, high stakes drama, the self-esteem boost of supposedly “seeing behind the curtain”

The appeal of conspiracy theories is manifold: a rebuke of institutional corruption and incompetence, definitive answers to life’s ambiguities, the high stakes drama of good vs. evil, the self-esteem boost of supposedly “seeing behind the curtain,” the opportunity to give formless anxieties a human shape. On social media platforms, conspiracy beliefs can also become addictive and immersive — they become a gamified layer of everyday life, imbuing mundane objects, situations, and gestures with larger-than-life significance. Participants in conspiratorial forums can collaboratively and synchronously search for suspicious patterns and secure a sense of negative solidarity, as digital comrades in arms fighting an epic battle against the forces of darkness.

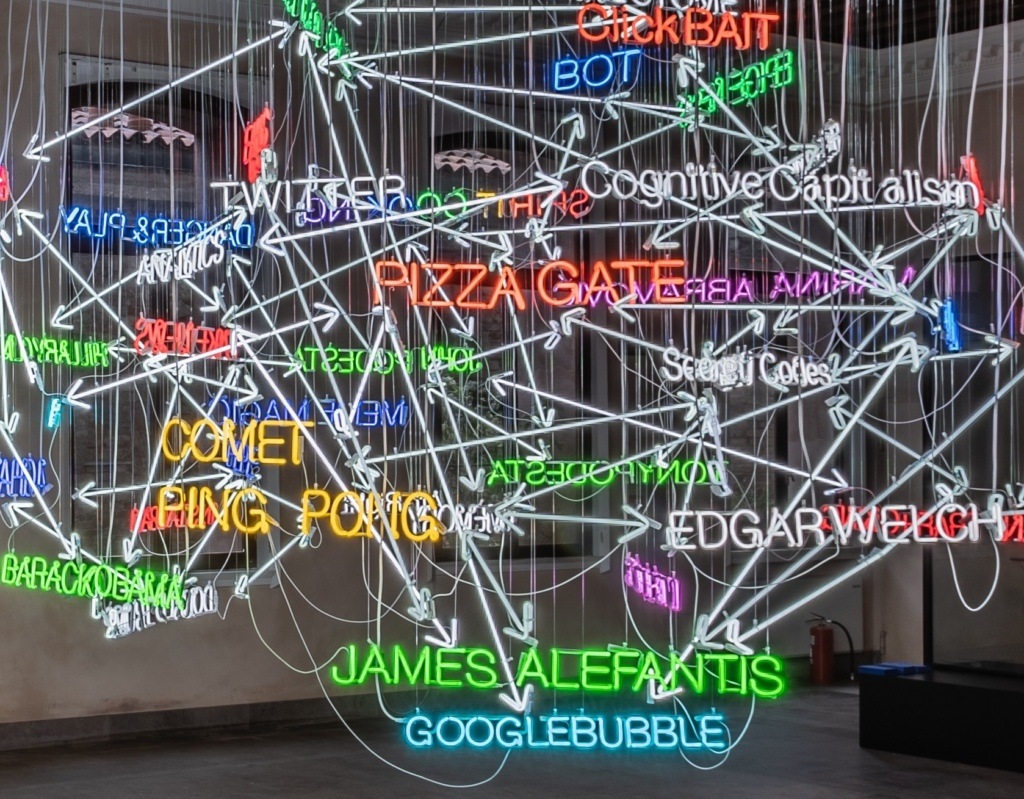

More specifically, one can see contemporary conspiracy theories like Pizzagate and QAnon as mimicking the structure of alternate reality games, in which authors and players collaborate in various media forms (message boards, email chains, social media platforms) to spread rumors, clues, and puzzles for participants to investigate together. The experience spills into physical space through meetups where participants can share information, printed pamphlets that extend the narrative, and “legend tripping,” which refers to searching for clues at designated geographic locations. One can even find oneself “playing” an ARG without even knowing it, as these crowd-sourced fictions aim to blend seamlessly into the player’s reality. Such blurring of story and reality is intrinsic to the unique forms of fun and pleasure these games tap into.

This creative approach to interactive storytelling and cooperative play, which emerged with the new technological capabilities of the 1990s, seemed innocent enough when it was adapted for use in viral marketing campaigns for movies (AI, The Dark Knight), games (Halo 2), and albums (Nine Inch Nails’ Year Zero). These corporate ARGs sought to reclaim marketing dominance in a crowded attention economy. “Marketers were already encountering a growing problem,” Frank Rose explains in The Art of Immersion (2011): “how to reach people so media saturated that they block any attempt to get through.” But when ARG-like structures enter the political sphere, their implications can become dangerous, as not only coronavirus disinformation but also Q-inspired shootings and murder plots demonstrate. (The FBI has even labeled QAnon a domestic terror threat.)

In her 2004 essay “A Real Little Game: The Performance of Belief in Pervasive Play,” Jane McGonigal writes about the pleasures and benefits of ARGs in terms that, tellingly, could also describe conspiracy theories: “The best pervasive games do make you more suspicious, more inquisitive, of your everyday surroundings. A good immersive game will show you game patterns in non-game places; those patterns reveal opportunities for interaction and intervention.” In this sense, conspiracy theories are already games, and ARGs are already conspiratorial.

The game-like structure and appeal of conspiracy theorizing across an array of media has been readily weaponized by trolls, grifters, true believers, and provocateurs alike. Engaging in conspiracy culture is like playing a secret game based on insider knowledge, and it is this feeling — of joining an anointed community that has transcended the ordinary world — that propels Q’s current popularity. Perhaps when the medium is ARG, conspiracy thinking is the message. But revealing the game structures, pop media tropes, and affective rewards that shape movements like QAnon can help inoculate those attracted to such forms of play from full immersion in conspiracy culture.

The alternate reality game began in the late 1980s, when multidisciplinary artist Joseph Matheny pioneered the format with Ong’s Hat, a transmedia narrative about an interdimensional cult run by Princeton scientists at an ashram in the New Jersey Pine Barrens. Drawing on real people and places, Matheny crafted a series of documents as supposed evidence for the cult’s existence. He seeded them to the public through snail mail, posts on early internet bulletin boards (some of which can still be seen at alt.conspiracy and alt.illuminati), and Xerox copies planted inside independent weeklies and esoteric literature at libraries, book stores, and coffee shops. Over the course of a decade, the story’s media range grew to include CD-ROMs, books, video, and radio, including some interviews on the popular paranormal radio show Coast to Coast AM, which exposed Ong’s Hat to a wider audience of conspiracy enthusiasts.

Ong’s Hat was a unique hybrid of speculative history and speculative autobiography that participants could co-author by joining the investigation online. It was meant to be defamiliarizing and immersive; Matheny envisioned Ong’s Hat as a playground for experiencing synchronicities and then comparing them with other players’ — an exercise in intersubjectivity. But immersive storytelling and the intersubjectivity it constitutes can have a dark side, wherein communities can form around shared delusions that take on a momentum of their own. To Matheny’s surprise, some participants confused his fictional narrative with an actual conspiracy. When he broke character to remind them it was just a game, these true believers accused Matheny of being part of a disinformation campaign or running a mind-control experiment. He lost final say over the universe he invented.

Engaging in conspiracy culture is like playing a secret game based on insider knowledge, and it is this feeling that propels Q’s current popularity

When I spoke to Matheny, he partly attributed this reaction to the Coast to Coast segments attracting conspiracy-minded participants, to those who used Ong’s Hat to fulfill their X-Files-inspired “I want to believe” aspirations, and to proto-trolls looking for a pot to stir. Eventually these elements escalated their participation in Ong’s Hat to harassment, threats, and attempted home invasions, and Matheny was forced to discontinue the project in 2001. The term ARG, he says, began to be used on conspiracy and trolling forums around this time as a euphemism for gamified harassment campaigns. Matheny told me that he has since seen hardcore Ong’s Hat believers resurface in other conspiracy movements, including Weinergate, Gamergate, Pizzagate, QAnon, and the coronavirus cluster of 5G, vaccine, and quarantine paranoia. To Matheny, the 20th century U.S. tradition of conspiracy theories was “great folklore, great Americana,” to be taken figuratively. But he perceived this rightward and more serious turn in conspiracy circles at the turn of the century, with a crescendo in 2014, the year that some of his formerly left-leaning friends came out as neo-reactionary monarchists.

Matheny now refers to Pizzagate and QAnon as “dark ARGs,” signaling their family resemblance. “There was a bonding that happened, and probably a cooptation of troll culture into conspiracy circles,” he says. “This converged with fundamentalist people who were also doomsday preppers, and they had all adopted trolling behaviors, speaking in bad faith, giving circular arguments. All these weird subcultures have come together. How it happened was gradual. There wasn’t a puppeteer, but there are definitely people who took advantage of it. Breitbart, Bannon, Spencer.” This convergence of fundamentalism and trolling in conspiracy culture proved to be a toxic brew, facilitating zealous delusion and selective insincerity simultaneously. “The irony helps these people sidestep criticism,” Matheny says. “‘I’m just kidding, I’m just trolling.’ So I shouldn’t take anything you say seriously? Then they react with anger. And now there are movements being crafted to take advantage of this.” Irony serves as a gateway to belief as well as a source of plausible deniability, the same dynamic that can lead anti-PC trolls to become actual neo-Nazis. As George Orwell put it, “He wears a mask, and his face grows to fit it.”

Affinity through irony is useful as a Trojan horse for recruitment, indoctrination, and deflection of responsibility. But it appears to play less of a role in anti-vaxxer, COVID-denier, and QAnon circles than it does in the alt-right more generally, perhaps because these movements have developed a more distinctly religious character. Immersion in QAnon seems less about trolling outsiders than fully committing to a belief system. Marc-André Argentino reports in The Conversation that QAnon already has its own church, an offshoot of the Protestant neo-charismatic movement called the Omega Kingdom Ministry, “where QAnon theories are reinterpreted through the Bible,” and “QAnon conspiracy theories serve as a lens to interpret the Bible itself.” Beyond the winks, nudges, and “I’m just kidding” responses to being held to account for problematic speech, an earnestness animates the QAnon faithful.

QAnon’s syncretism of far-right memes, negative partisanship, moral panic, and authoritarian fandom provides a false sense of counterculture edginess, underdog team spirit, and righteous purpose for its ranks — as if the Empire has convinced itself it’s the Rebel Alliance. Q’s transmission of supposed insider information directly to his followers reifies, ritualizes, and dramatizes this formula. Given the attendant and communal pleasure in investigating new online revelations from Q — which come in daily hits, sometimes even more — it’s little wonder that dissenters seem like inauthentic plants to Q’s devotees. Their game-addicted enthusiasm and prolific social media activity is reflected in meme caches earmarked for information warfare on their social forums.

Displaying a kind of conspiratorial ecumenicalism, the QAnon faith accepts fringe theories of all stripes into its corpus, then disaggregates them back into the social network as dog whistles and memes about everything from the Titanic and Anthony Fauci to Jeffrey Epstein and Princess Diana. This tactic conscripts people who have not even heard of QAnon into sharing its disinformation, such as when anti-human trafficking advocates spread baseless Q theories about Wayfair furniture being used to ship children. Continued exposure to these memetic fragments provides a structure of plausibility for the mythos as a whole; this can then dragnet the initially unwitting participants, like those who shared the Wayfair memes, into curiosity about joining the movement.

In ARGs, players express their investment in the collective storytelling by acting in the “real world.” This same mechanism can also ground the conversion of conspiracy theorizing into a quasi-religious conviction. When the Pizzagate gunman went to Comet Ping Pong in 2016 looking to free child sex slaves from the pizzeria’s nonexistent basement, he was legend tripping along the lines sketched out by ARGs. The armed men who stormed the state capitol in Lansing to pressure Governor Whitmer into reopening Michigan can also be seen as legend tripping. Like Ong’s Hat believers investigating the Pine Barrens, the digital soldiers of QAnon could authenticate and demonstrate commitment to their belief by traveling to protest at a physical site of state authority. In Whitmer, the deep state cabal — the ubiquitous enemy in the QAnon corpus — could be given a name, a face, and a local address to be met with the threat of force.

A flurry of op-eds and tweets called these armed demonstrators LARPers, live-action role players, in part because their ill-fitted gear and weapons came across like poorly researched Halloween costumes, not battle-ready uniforms. It was also apt in the sense that there was a game-playing spirit to their engagement that sought to transcend itself and efface the difference between game and reality. LARPers don’t carry live ammunition, however, and they don’t try to impose their political will through intimidation. Costumes such as these should not be taken as temporary role-play but as a deliberate display of allegiance to an aggressive alternative political reality. “A lot of these groups are like cults,” Matheny says. “They have beliefs that border on religiosity … And when you contradict them, it’s like telling them Jesus isn’t real.” The game-like structure becomes a vehicle for something more, a self-propelling cult that rejects expertise and normative judgments of any kind unless they already align with the group’s articles of faith.

In their indebtedness to the 1980s moral panic over Dungeons and Dragons, far-right conspiracy movements stem from a tradition that is hostile to the very idea of role playing. Role play is contingent on navigating between real and imagined worlds, which affords opportunities for allegorical thinking, exploration of alternate identities and universes, and creative problem solving. But when this boundary collapses, we have what Joseph Laycock calls “corrupted play,” a term that helps explain dark or weaponized ARGs. As he explains in his 2015 book Dangerous Games: What the Moral Panic Over Role-Playing Games Says About Play, Religion, and Imagined Worlds,

Play becomes corrupt when it ceases to be separate from the profane world. Instead of playing for “the sake of play,” corrupted play becomes entangled in the logic of means and ends … Corrupted play is not about intensity so much as duration. The frame of the game is lost, and what was once voluntary becomes compulsory … The moral panic over role-playing games can also be interpreted as a form of corrupted play.

Just as QAnon does today, the moral entrepreneurs of the 1980s and ’90s Satanic Panic engaged in their own form of game (corrupted play) and constructed their game world by appropriating tropes from the very horror, sci-fi, and fantasy materials they rallied against. By taking RPG lore literally while rejecting its gaming devices — boards, dice, cards, figurines, and the design of maps, plots, and characters — the Satanic Panic’s moral crusaders created not an RPG but something more like an emergent ARG, a game that uses life itself as its frame, increasing the potential for corrupted play.

In conspiracy thinking, nothing is random; there are no coincidences

In This Is Not a Game: A Guide to Alternate Reality Gaming (2005), Matheny’s friend and collaborator Dave Szulborski codified the principles behind ARGs, the first of which is “TINAG: this is not a game.” This means that there should be no trace of mediation, no interface between the participant and the game — no logging off. Instead, the ARG should implant itself in the player’s pre-existing reality: “My initial goal when I first began thinking about an idea for a new game was to try and truly capture the TINAG feeling and have players wondering exactly what was real and what was not, and if they were even playing a game at all,” Szulborski writes. Unlike a video game, an ARG has no controllers, no heads-up displays, no navigational way-point systems. TINAG can be achieved only through technologies that users have already absorbed into their daily lives: email, blogs, social media, telephones, snail mail. Szulborski stresses the importance of a “puppet master” who plants a “rabbit hole”: “Quite often the rabbit hole takes the form of a plea for help on an internet message board or in an email someone receives. At the very least it will be a description of some strange or dangerous situation someone has found himself or herself in.”

QAnon has all these main ingredients of an ARG: tantalizing background lore based on a mystifying blend of fact and fiction, recurrent rabbit holes planted by a mysterious puppetmaster, and interactive authoring on digital platforms that already permeate players’ lives, culminating in an immersive “this is not a game” experience. In broad terms, QAnon lore is the expanded Pizzagate universe. It depicts Donald Trump as a freedom fighter in a secret war against a global cabal of “deep state” pedophiles who practice Satanic ritual abuse and cannibalism. Many Q followers believe Covid-19 is a cover for rounding up cabal members. Like a comic-book crossover, QAnon’s secret war is a remake of the Satanic Panic combined with the Democratic supervillains invoked on right-wing talk radio. The anonymously written Amazon best seller QAnon: An Invitation to the Great Awakening, however, betrays the most direct source of the conspiracy’s tawdry mythos: Monsters Inc., a Pixar movie about monsters who eat children’s fears. And Q’s backstory — a government operative with a Q-level security clearance, which grants access to top-secret nuclear-weapons information — is reminiscent of characters like Solitaire 1957 from The Majestic ARG.

Q drops clues, cryptic questions, and platitudes on anonymous imageboard sites such as 4Chan and 8Chan/8Kun (hotbeds of alt-right trolling and psyops) for believers to collaboratively interpret and spread to more mainstream venues like Facebook and YouTube. In a very confused metaphorical shorthand, Q followers call themselves “bakers” because they assemble Q’s “breadcrumbs” into “dough.” The breadcrumbs usually come in the form of vague and leading questions that have no definitive answers. The cumulative effect of all this cultural production is immersion, as bits of the narrative reappear and evolve on social media, blogs, conspiracy forums, self-published user resources (including merchandise, information warfare manuals, and self-help books), YouTube videos, and partisan news sources. The storytelling ecosystem is ever-present in believers’ daily lives, becoming a part of their lived experience. A tweeted Trump typo, “covfefe,” gets retconned as a coded prophecy for a Covid-19 cure. Awkward public body language, a call to arms. A celebrity’s clothing choice becomes a symbol to unpack. A random hand gesture, a proxy for unspoken words.

In conspiracy thinking, nothing is random; there are no coincidences, and the online hivemind has 24 hours a day and limitless digital resources with which to “prove” it, all in a thrilling co-authored narrative framework that rewards bias-driven pattern recognition. To encourage this apophenia, Q often asks, “How many coincidences before mathematically impossible?” Such immersion in a fabricated mythology is the envy of ARG design: so complete that it saturates understanding of any and all new information, effacing the distinction between empirical fact and spurious interpretation. Such was Sulborzki’s goal, for example, when he designed his last game, Urban Hunt:

Because I wanted this game to blur the lines between reality and the game to an extent that had never been done before, it made sense to build the story around subject matter that did that also. This wasn’t too hard to do actually, as it seems our society is on a path to do just that — merge reality with fiction. Between reality shows that aren’t real and urban legends that get accepted as real because they are repeated endlessly on the internet, we have become a culture that doesn’t know where its reality starts and ends. So those two areas where reality was already blurred, reality television and urban legends, became the basis for the game’s story.

Sulborzki foresaw the ARGification of the public sphere, but — in this passage, at least — only seemed to recognize the entertainment potential. The capacity for ARG-like structures to motivate and spread disinformation and propaganda has since become acutely visible. Every non sequitur and false connection of collaborative conspiratorial thinking gets papered over by the next one, ad infinitum. When the ARG is structured like a massively multiplayer online game through social media, this process metastasizes uncontrollably.

In his recent summary of the field, Conspiracy Theories: A Primer (2020), Joseph Uscinski cautions against the assumption that the right wing has a monopoly on conspiracy theories, because conspiracy-mindedness is more like a personality trait than a political ideology. Citing examples such as 9/11 and the JFK assassination, Uscinski demonstrates that moderates and left-leaning people do also fall prey to conspiracy theories when they confirm their biases or simply stoke their imaginations. However, conspiracy-mindedness is a spectrum, not a binary; it’s a cognitive tendency toward faulty pattern recognition, low tolerance for ambiguity, and Manicheanism that can be inflamed by peer group, an insular media diet, feelings of isolation, and low self-worth. The far right has excelled at leveraging this phenomenon into dominance of online conspiracy culture.

Black Lives Matter protests are led by community organizers who actually participate in their own demonstrations, not by astroturfing groups or an anonymous riddler

When the authors of Network Propaganda (2018) analyzed 2.9 million stories from the 2016 presidential campaign and the first year of the Trump presidency, they found that “the right-wing media ecosystem differs categorically from the rest of the media environment” and has been “much more susceptible . . . to disinformation, lies, and half-truths.” Outlets such as Fox News and Breitbart have not only aired alt-right memes and conspiracies; they have also been “adept at producing their own conspiracy theories and defamation campaigns” independently. “The right-wing media ecosystem” is disproportionately “skewed to the far right and highly insulated from other segments of the network, from center-right (which is nearly non-existent) through the far left.”

Conventional ARG designers have to work hard to achieve this kind of diffusion effect, writing pre-existing media objects — books, films, news reports — into their narratives as intertexts, so that aspects of the game can physically manifest in the player’s life at any given moment. But for QAnon, deep state and Clinton body count conspiracy theories were already in play on prime-time, granting familiarity and ubiquity to the emerging belief system, and the favor is returned as the movement contributes to the disinformation feedback loop. This facilitates an inversion of the idea of journalistic merit, as sources that contradict the networked counter-reality get rejected as fake.

This asymmetry in the media ecosystem helps explain why there is no leftist equivalent of QAnon (for contrast, consider Zeitgeist director Peter Joseph’s rejected attempt to assume leadership of Occupy) as well as no comparison between the anti-lockdown protests of April and May and the protests against police brutality in more recent months. Black Lives Matter protests are led by community organizers who actually participate in their own demonstrations, not by astroturfing groups or an anonymous riddler who will never issue a concrete plan. BLM members use social media for messaging, signal boosting, and strategizing, but the ARG element of decoding puzzles with collaborative detective work is absent, as serious organizing would be impeded by the kind of digital goose chases that typify QAnon.

Unable to think beyond its own Wizard of Oz-like conception of power, QAnon invents or resurrects even more conspiracy theories to make sense of BLM: George Soros is imagined as the BLM puppet master, a regurgitation of the paid-protester meme, and to overcome the irrefutability of the George Floyd footage, Q followers have concocted a perverse fantasy that Floyd and Chauvin were actors in a staged event (much like the crisis actor theories that attempt to trivialize school shootings).

BLM promotes affective engagement, too, but with the flow of history and social progress, not cultish apocalypticism. These differences align with the conceptions of reality behind each movement: urban legends and reinvented blood libel about globalist Satanists, cannibals, and pedophiles haunt the QAnon imagination, while historical analysis of the effects of slavery, segregation, mass incarceration, the school-to-prison pipeline, and police brutality inform the platform of BLM. Consequently, unlike the unfulfillable prophecies and millenarian obsessions of QAnon, BLM pursues achievable and concrete policy changes. Q, instead, offers boogeymen, victimhood, an unrealizable revenge fantasy, and science denialism.

Despite all this, the aesthetics and solidarity-building aspects of ARGs can serve a beneficial role in protest movements and beyond, but only in the absence of the “corrupted play” dynamic that immerses players in impenetrable delusion as it has in QAnon. When conspiracy belief encourages such deep investment in a scripted cosmology, the non-gamed appears staged in its deviation from prophecy. In this regard, conspiracy theorists are not the red-pill takers of The Matrix that they aspire to be, but more like the cult of Realists in Cronenberg’s Existenz (1999). Those who hate the game are the most gamified of all.