“Where the wickedness lies,” cyberneticist Stafford Beer claims in his 1974 book Designing Freedom, “is that ordinary folk are led to think that the computer is an expensive and dangerous failure, a threat to their freedom and their individuality, whereas it is really their only hope.” If distrusting computers is “wickedness,” then the world has seemingly become far more wicked in the decades since.

Beer was an eclectic 20th century British theorist who achieved remarkable innovations in the seemingly disparate fields of capitalist management consulting and state-sponsored socialism. He believed that cybernetics (what he called “the science of effective organization”) represented a new frontier in institutional and organizational design, a powerful tool that would inevitably be taken up if not by the forces of democracy and freedom then by their enemies, authoritarians of either the corporate or government variety (if not both). As he asks in Designing Freedom, “What should be done with cybernetics? … Should we all stand by complaining and wait for someone malevolent to take it over and enslave us? An electronic mafia lurks around that corner.”

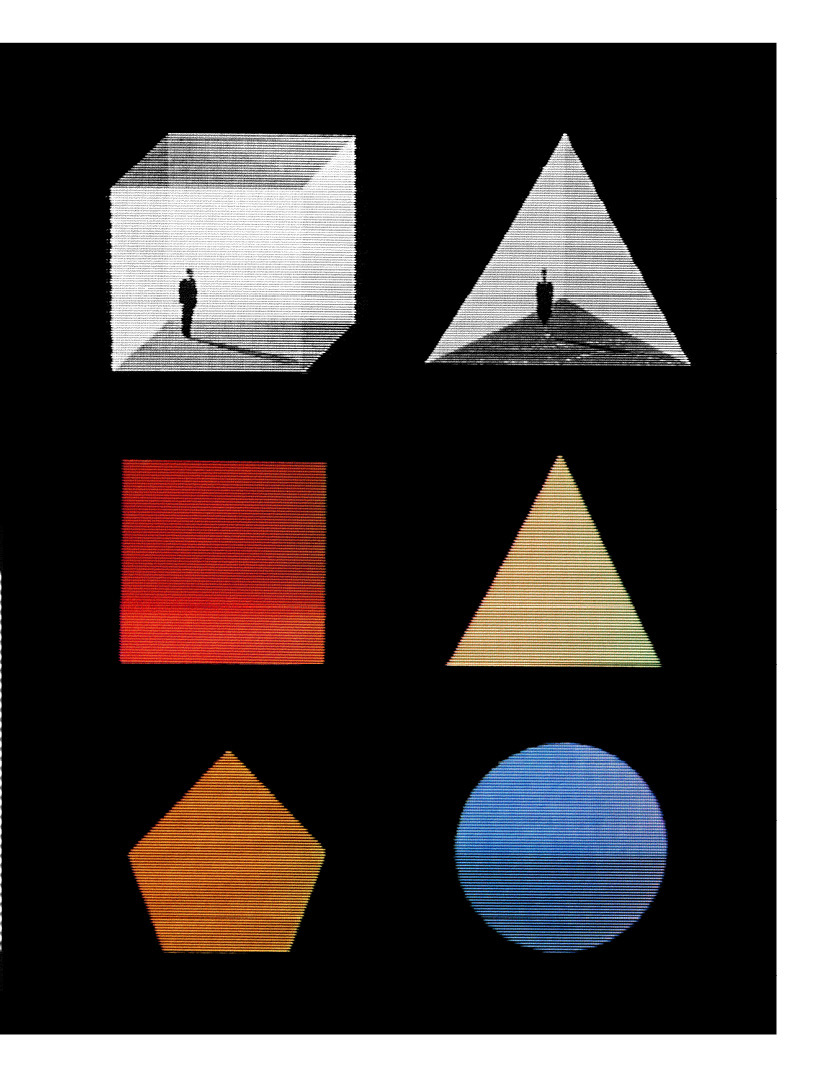

As the scale of systems grows, the hierarchical organizations regulating them begin to sag under their own weight

The “electronic mafia” is an evocative and not entirely inaccurate description of current tech company executives and the venture capitalists that fund them. Their enforcement techniques are more subtle than broken kneecaps and concrete shoes, but nonetheless, in a relative blink of an eye, it has become nearly impossible for most people to live a normal life without paying a kind of protection money in the form of consenting to the control technologies of surveillance, algorithmic feeds, real-name profiles and the like.

Today, Beer’s question — what should be done — often appears reframed as a “techlash” that rejects technology itself rather than the particular powers that currently shape its development. The electronic mafia loves this framing, because it guarantees their victory. They have inserted themselves so deeply into everyday life that it is difficult to imagine them gone, and the average person is so tightly optimized that they can’t afford to give up the cheap conveniences for an abstract principle. “Technology is neither good nor evil, nor is it neutral,” the saying goes, but if the only people successfully dictating how to make use of it are evil, well, it’s not hard to see how that plays out.

A better debate is how we should decide to use communications technology. Rather than accept the status quo — where online behavior is funneled into easily manageable forms to be better captured by a handful of firms — can new social arrangements accommodate what the internet makes possible? Can they enhance human freedom rather than curtail it?

Despite the flamboyant stupidity of many “Web3” projects and boosters, some observers are inclined toward generous readings because of the failures of the centralized, platform-centric web. This is where Stafford Beer can help: He explicitly rejects the centralization vs. decentralization framing — in Designing Freedom he notes that he has “seen the two policies advocated alternatively for the same institution by successive groups of consultants.” Instead, what matters to Beer is the relationships between different layers of a system: whether the actors at each level have appropriate, timely information from below and above, and the extent to which they are empowered to act. In other words, both capitalist and state socialist organizations can fail by reducing their human agents to mere machines, preventing the flow of information necessary to adapt to a complex and continually changing world. Rather than deduce a system’s politics by the predilections of its designers or its degree of centralization, it is more important to appreciate how it adjusts itself.

Beer was born in 1926 and joined the British Army in 1944, at the age of 18, rapidly advancing through the ranks. After World War II, he spent a few years in the Operations Research branch of the precursor to the Ministry of Defense, which would provide him with a particular perspective on the science of coordinating and managing human activity. During the Cold War, Western discussion of this topic was generally preoccupied by the grand debates between capitalism and communism, but the British military was still run on command-and-control principles. The key challenge it faced was timely information flow from the periphery to the command center so that it could effectively distribute resources and manpower across its sphere of engagement.

This style of systems analysis, Beer found, translated beyond the military sphere. After the war, Beer became one of the founders of academic operations research — a subfield of management science concerned with decision-making — and worked to optimize the operations of factories and larger corporate organizations. He saw huge success working with United Steel in the 1950s before leaving to found an independent consulting company and develop his theories for an even larger, societal scale.

Much of his work from the 1960s and 1970s, including his monumental The Brain of the Firm, has been influential in academic research and business school curricula, particularly his “viable system model,” which was innovative in its orientation toward improving information and feedback flows within a company. But Beer is perhaps most famous for orchestrating the Chilean economic experiment under socialist president Salvador Allende: Project Cybersyn, the effort to establish an electronic “nervous system” that could rapidly incorporate economic information from across the vast distances of Chilean geography. The goal was to enable economic planning without the informational gaps and ruinous time lags that had hampered Soviet-style organization.

The Soviet economy was plagued by the so-called economic calculation problem: the difficulty in keeping track of which raw materials and skilled laborers needed to be sent to which factories and how to adapt to changing conditions. For example, say a snowstorm destroys a factory: that means a shortage of machine tools, which delays tractor repairs, which causes a wheat shortage. A market economy (as Hayek famously argues in “The Use of Knowledge in Society”) uses prices to transmit and process the information that addresses this problem (the machine tools become more expensive, so other producers start to make more of it in order to make more money, addressing the problem “locally”), but a command economy requires all this information to somehow filter up to be processed by the center.

In a poorly designed organization, individuals have strict purviews

Beer’s work with Cybersyn involved a full reorganization of the production side of the economy, giving lower layers of producers the flexibility to solve their own problems using their specific knowledge of time and place while also ensuring that they provide accurate and timely reports to the more central layers tasked with coordinating between local producers and with longer-term strategic planning. But as Eden Medina’s book Cybernetic Revolutionaries details, escalating crises during Allende’s tenure brought on by foreign financial pressure and internal unrest diverted time and resources away from Cybersyn. Even given these constraints, Leigh Phillips and Michal Rozworski argue in The People’s Republic of Walmart that Cybersyn was successful enough to demonstrate the viability of a planned economy. In their view, massive corporations like Walmart and Amazon today carry out economic planning at a scale far beyond Chile in 1973; modern technology plus the political will to implement something like Cybersyn would enable a genuinely new political economy.

Allende was ousted in 1973 after a military coup, putting a halt to the socialist Cybersyn project. That same year, Beer gave a series of lectures for CBC Radio that would become Designing Freedom. There he outlines an argument for how new information technology — at the time, just fax machines and a handful of computers less powerful than a contemporary laptop — enables humans, as the apparently oxymoronic title suggests, to “design freedom.” In Beer’s view, the capacity of computers to communicate instantaneously across distances and to perform inhuman feats of computation allows for institutions that would permit humans to use their full capacities, collectively and at scale.

In a strict command system (either corporate or government), most workers are reduced to using only a fraction of their human capacities: The division of labor means restricting the scope of action. So we have the overnight security guard who contributes only their eyes to the regulation of society, or the call-center employee who can only use their voice, restricted to a tightly specified set of options. Each of these people, Beer argues, is a complex system capable of an incredible range of information reception, mental processing, and physical action — not to mention specifically human qualities like empathy, creativity, and spontaneity — but the existing economic system ignores those capacities in favor of rendering the complicated and unpredictable world legible to the inefficiently designed organizations that run things.

From today’s vantage point, the language of cybernetics in the previous paragraph — humans understood as “complex systems” and in terms of “capacities” for society’s “regulation” — may appear unusual. But from about 1950 to the mid-1970s, it was influential among both intellectuals and the general public. Norbert Wiener, the mathematician who coined the term cybernetics in his 1948 book of the same name, was then something of a household name. For Beer cybernetics was essential if human society were to be organized at the scale of something like the U.S. or Walmart, or even the British Army.

Humans “regulate” themselves in many ways: norms, economies, governments, brute force. But as the absolute scale of our societies has grown along with our technical sophistication, we have delegated more of the task of regulation to large, hierarchical organizations, which sag under their own weight. As populations have grown — the number of human beings in the world has tripled in Joe Biden’s lifetime — these organizations have grown even more quickly, adopting more and more layers of hierarchy and thus bureaucracy. Rocketry provides a good analogy: There is a limit to how large and powerful liquid-fuel rockets can be because each additional booster stage adds more weight for the rest of the boosters to lift. Similarly, the increase of information production and circulation provided by computer capacity only amplifies the complexity in a system, not its efficient functioning. So for example, content moderation at the scale of Facebook becomes simply impossible; it’s not something they could fix if they just tried harder.

“Whoever opts out of their regulatory role is robbing the total system of its power to be stable,” Beer argues

There is a fundamental trade-off between objective, transparent rules and an adversarial population of this size pursuing a range of radically disparate goals. Beer’s work sought to address and mitigate that trade-off, in search of systems that could be scaled up without generating even bigger problems. According to Beer, bureaucratic controls like physical surveillance and strict reporting requirements can enhance the scale at which organizations can carry out their activities, but this comes at the cost of slowing the down or narrowing their scope. For instance, McDonald’s can produce massive quantities of a very small variety of foods, but with less ability to improvise than an average short-order cook; FDA bureaucratic systems can verify the safety of new medicines but cannot react quickly to rapidly changing threats.

What is required, Beer claimed, was “variety engineering”: his term for the way that society deals with complexity. Key to Beer’s thought is that the human and social systems are nested within each other, as larger systems emerge from the interactions of the units at lower levels. This framework allows us to respect the importance of scale without prioritizing one level over another: Like a fractal, each level is equally complex. So each human, unlike a bee in a hive or an ant in its anthill, is both a unique individual and a contributor to a robust society. Where the individual ant is essentially worthless and only the collective of ants really counts as an organism, humans and their society are co-equal and intertwined.

This is important because it establishes that human beings, in all our exquisite variety, are the ultimate source of complexity. Hence Beer can argue that humans must all provide the regulation of the system of human society, the way we live together. The more that lower levels of organization can adapt to local conditions, the more complexity there is in the system as a whole; otherwise, problems continue to accumulate that cannot all be addressed with universal directives from a central authority. This requires individual people to take responsibility, to maintain local organizational capacity, and to have sufficient slack that they can collectively solve problems without having to kick them up the chain of command.

In a poorly designed organization, individuals have strict purviews. In a centralized bureaucracy, the electrician can spot an obvious problem with the plumbing that will flood the entire building and shrug: not my problem. In decentralized neoliberal precarity, the electrician can shut off electricity for non-payment, spoiling the food and rendering the family unable to work their way out of debt and shrug: not my problem. In both cases, the electrician is prevented from adopting a regulatory role, from making local adjustments that would ultimately help the system function better.

The engineering problem faced by our hypothetical electrician has a clear mechanical solution. They could then circulate the information both horizontally and vertically. Beer’s early work saw big gains from allowing factory workers more opportunity to mingle casually and keep each other abreast of goings-on in different parts of the shop rather than kicking every problem up the command chain and requiring a centralized solution. To scale throughout society, though, some message about the problem and solution should be circulated: If many buildings are facing the same problem, a sectoral manager could take note and figure out what is causing this trend, perhaps some materials shortage facing plumbers.

Stop using the platform web, which reduces us to Like/Subscribe/Share cogs in the machine of digital commodity circulation

When it comes to the internet, many of us have adopted a pose of helplessness or indifference. Disempowered from fixing obvious problems like the dozens of entities tracking our behavior or the ads we have to see to conduct standard professional networking, we have outsourced the responsibility to centralized actors. “Whoever opts out of his or her regulatory role is robbing the total system of its power to be stable,” Beer argues. “We have robbed society of regulatory variety by our passivity.” The electronic mafia who have been pushing the “magnificent bribe” of convenience for technological dependency are more than happy to encourage that passivity. They’d like us all to spend our time buying things and consuming media. Beer would argue that it is key to stop doing that, and instead to take an active role in the regulation of the system.

What would this look like in practice? We should learn to valorize technological skills in the same way that previous generations of revolutionaries came to appreciate the practical skills of life. Number one: You (yes, you) should learn to code. It’s true that the phrase learn to code has been somewhat co-opted by the electronic mafia that it seeks to counter. But if the only people actively trying to build the internet have capitalist aims, we will of course end up with a capitalist internet. Also: Set up your own website. Use, and eventually help test or even develop, open-source tools that can help others assume their regulatory powers. Stop using the platform web, and work to build durable online communities designed for human rather than capitalist ends.

The platform internet has enabled collective action at speeds and scales never before seen. But these actions, from the Arab Spring to the BLM protests to the January 6 insurrection, are limited in what they can accomplish. In Beer’s terms, the systems are not using their full human capacity. Zeynep Tufekci describes the “tactical freeze” of these leaderless movements: They get a lot of bodies in the streets, but they are unable to coordinate on new tactics or bargain credibly.

Middle-layer organizations — above the family but below the state; things like fraternal societies, religious communities, and in particular labor unions — served a crucial role in 20th century democracy. Their decline is a major reason for contemporary dysfunction. We need to encourage the digital analogue of these organizations. There are, of course, already Twitter communities, Facebook groups, YouTube fan bases, but these are organized according to platform logic: ferocious competition for fast-twitch attention, with the goal of maximum audience segmentation to better target ads. These organizations are capable of coordinated online action (just go on Twitter and make fun of BTS), but platform affordances make sustained action and tactical diversity nearly impossible.

A Beerian effective organization would avoid the tyrannies of both structure and structurelessness

But some online communities — artist collectives, political activists, nerdy friend groups — are experimenting with new technological and social possibilities like private Discords and DAOs to address longstanding collective action problems that plague organizations that try to scale up without explicit hierarchy. Discord is one example of a platform where potentially large groups can break into smaller groups to discuss specific issues, to store information, and to flexibly devolve moderation with less risk of the context collapse and algorithmic attention gamification that happens on corporate platforms.

These organizations face the same fundamental tradeoff as the autonomously organized Chilean factories that Beer hoped to fit into the larger economic system. A Beerian effective organization would avoid the tyrannies of both structure and structurelessness, achieving internal stability while remaining flexible enough to adapt. The individuals comprising an organization are both empowered and responsible, acting to solve local problems in small groups and to communicate with the larger group when necessary. The larger group has an understanding of the shape of the entire organization, is incorporating from individuals and smaller groups, and can re-arrange itself if the need arises. And there is a parallel relationship between the larger group and the society as a whole: there are other groups, doing other things, and they can communicate about society-wide problems that arise. This sometimes means that the groups will be rearranged, that some groups should be recombined to form new groups.

One of the most important advantages of a market economy is the “creative destruction” entailed by some corporations going bankrupt, freeing up labor and capital for new purposes. This function would be served, in Beer’s model, by something like a management consultant (someone like himself: a cybernetician). But just as important is ensuring that people are assured that this creative destruction does not endanger their livelihoods, that there is sufficient slack in the system. Throughout, the individuals comprising the society need to have access to the crucial information and to be capable and willing to experiment, learn, and adapt.

The internet will not improve if the overwhelming majority of users’ continue to approach it solely as consumers. We need to accept the responsibility of producing and regulating the internet, to create and inhabit digital institutions that respect and empower the fullness of our humanity rather than reduce us to Like/Subscribe/Share cogs in the machine of digital commodity circulation.

Similarly, a better society will not miraculously arise automatically through the processes of representative democracy. The only hope is to build it collectively through trial and error and the accumulated efforts of millions of wonderfully complex humans, rather than rolling out the welcome mat for the next generation of electronic mafia. Millions of young people around the world trying to build out alternative technological infrastructures seems like a better bet for improving the internet than voting for Joe Biden over Donald Trump. Our only hope is in our collective capacities to build and regulate a society empowered by modern communication technology. No one else is coming to save us.