I bought my first car in 2006 — the only car I’ve ever owned. I had moved to Southern California from far away, and soon realized that the bus wasn’t going to cut it. For $2000 I bought an ’89 Volvo 760, a totally uncool sedan, and made a friend drive it home for me because I was terrified. A few days later, though, I was chugging down the freeway, singing along to the radio as I passed strip malls and gas station clusters. It was a revelation: Driving through the California suburbs was like being at the center of so many stories I’d previously consumed only through television, stories, and songs. What’s more, I finally had an answer for that most common of security questions: “What was the model of your first car?”

In security question terms, I grew up lucky. I have a mother with a maiden name and a father whose middle name I know. I had a childhood pet. I don’t have a favorite high school teacher, but I did have an elementary school, and, eventually, a first car. With these precious facts, I have been able to navigate the sign-up pages of online bank websites and ensure a back-up method if I’m locked out of my Skype account. In other words, I’ve lived an easeful life when it comes to making the internet work for me and being recognized as a legitimate subject online. Not everyone has that privilege.

Verifying your identity online is a minefield of unanswerables and discriminatory narratives

What is assumed of us, and what identities are we asked to assume, in order to move through the world of the internet? The basic request for a mother’s maiden name requires so much: It asks for a mother, married, who took her spouse’s name, with whom you have enough of a relationship to know her first surname. But what about those of us without one mother, raised by queer parents or grandparents or adopted or in foster care, or with mothers that never relinquished their “maiden” names? For those whose lives have followed an alternative temporal logic (for instance, what Jack Halberstam describes as “queer time,” an experience of chronology “in opposition to the institutions of family, heterosexuality, and reproduction”), or those living with economic precarity that precludes property ownership or stable employment, the basic act of verifying your identity online is a minefield of unanswerables and discriminatory narratives. Security questions rest on unexamined assumptions about what constitutes identity, and what biographical details can be assumed as universal, private, and memorable to internet users. They are a form of quiet disciplinary power; specifically, they help enforce the hegemonic subjectivity required to provide an effective and sustained labor force.

While security questions have declined in popularity as a mode of online identity verification since their peak in the mid-aughts, the newer security protocols that have replaced them are not free of hegemonic power. The waning of the security-question era was concurrent with the development of a new regime of labor and social organization — one characterized by omnipresent surveillance and ubiquitous work, shaped and accelerated by digital technology and the internet — which required a new form of worker discipline. Like the security questions before them, the new verification protocols are easy to take for granted. In looking at the ways we are asked to identify and verify ourselves online, we can catch a glimpse of the disciplinary tools that are shaping us into the kinds of subjects and workers required by the economic system in which we live.

Security questions existed as a method of identity verification long before the internet. In their essay “What is your Mother’s Maiden Name,” Dr. Bonnie “Bo” Ruberg tracks the long history of security questions back to 1850, when New York’s Emigrant Industrial Savings Bank — a financial institution founded for Irish immigrants who were often excluded from traditional banks due to xenophobia and nativism — implemented verbal test questions as an alternative means to verify identity, since many of their customers were illiterate and not able to provide a signature as identification. Correct answers to questions about personal biographical information came to replace the written signature, in a system where signatures were an ineffective means of providing identity.

Over the next 50 years, security questions became a popular means of verification across many banking institutions. They entered widespread use, as Ruberg describes, “in contexts far beyond the specific cultural and political pressures that inspired their original implementation,” and remained in popular use through the 20th century. The convention of the security question transitioned seamlessly to the early internet — another arena where alternative means of identity verification were needed in the absence of physical signatures or visual recognition. Online information security protocols were, and continue to be, heavily influenced by standard banking practices. Internet security researchers in the late ’80s and early ’90s recommended a combination of passwords and security questions about personal and family history: a direct update of the kinds of questions used by banks over the previous 100-plus years.

The suite of common security test questions has been remarkably consistent, in part because websites tend to outsource their authentication protocols to outside contractors, resulting in a common pool of questions used across a wide swath of sites. The “specific semantic and social content of security questions” as Ruberg puts it, imagines a user that has followed the normative narrative of stable childhood in a single location, followed by investment in a heterosexual romantic relationship and the formation of a new nuclear family. The assumption of a linear flow of life, and experience that hits a number of memorable markers (from childhood pet to first child), sets up a policing of identity that excludes many users. While online banks and insurance company websites may not be making life difficult on purpose for customers with different life markers, the widespread adoption of this narrow set of security questions informs the cultural expectations of, per Ruberg, “what it means to be a legitimate subject.”

This was the sensation that overcame me as I drove down the California freeway in my new first car — the feeling that I was finally becoming a legitimate subject, slipping into a self that was legible, acceptable, celebrated. Through the windshield, a rolling display of the locations and edifices that make up a describable life passed before me: schools, cars, single family homes, dogs in yards, office parks and office workers. Thinking back on this crystalline moment, the thrill of proper subjectivity I experienced wasn’t just about reaching a new milestone of normative temporality, but also about inhabiting a specific geography. The assumed subject of the internet security question is expected not only to have checked off the markers of normative heterosexual adulthood, but also to have emerged from a specific spatial logic, one shaped by car travel and detached houses. That is, the suburbs.

The invisible CAPTCHA marks the arrival of an identity authentication regime that has receded entirely from view

Beginning as it did in the 1850s, at the tail end of the Industrial Revolution, the era of the security question has been one of employment outside the home, of factory and office workers, of wage-earning men who are presumed to be supported by the unwaged reproductive labor of a wife at home. The suburbs (the American suburbs) exist in the cultural imagination as the zenith of this economic era.

The labor power required to support this era of capitalist production has been shaped through a range of techniques, from what Foucault called the “disciplinary institutions” like schools, hospitals, and prisons, through to the soft power encoded in social practices and bureaucratic institutions. As the internet developed and became an important tool for work, leisure, and communication, it became another site of social discipline. To access banking services, you named the city where you met your spouse. To buy insurance, you catalogued your childhood address and first job. To secure your email account, you listed your favorite teacher and father’s middle name. These repetitions and reinforcements played a role in the disciplinary regime that ensured the continuity of the workforce, and in doing so shored up the acceptable boundaries of what constitutes “identity” and what form a life should take.

The heyday of the security question was the 2000s, hitting peak adoption in around 2007, the year of the first iPhone. In 2010, many large companies including Facebook and Google began to phase out security questions as a core method for confirming user identity. The internet was evolving as the nascent platform giants began to amass great caches of data about our online habits and movements, and Google began its epic project of surveilling and digitally mapping the world’s streets (and books, and artworks, and…). By 2010, bots and hacks were a greater threat than individual identity forgery, and security questions are both hackable and often guessable in an era of data breaches and cross-referenced digital dossiers. The ubiquity of security questions was superseded by different forms of identity verification like two-factor authentication and CAPTCHA — ones that are generally less explicitly tied to the hegemonic enforcement of stable, linear, heterosexual suburban identity.

At the same time, the primacy of the suburbs as a locus of American identity has been waning, curdling into nostalgia and uncertainty after a decade of recession, foreclosure, and retrenchment. No particular location has emerged to replace the suburbs, perhaps because it didn’t need to. In the dazzling new era of global digital mapping and inescapable digital histories, the suburbs have become less necessary as a structure to enforce and reproduce a workforce. Instead, the internet could become your home. And why not: It already knew where you lived.

The authentication regime of the security question era (loosely speaking, the 1850s to 2000s) helped discipline us as subjects and workers in the age of the factory and the office. The ubiquity of smartphones and internet connectivity enabled the rise of platform economies and created the ability for work to leave the boundaries of the factory, coming with us to our homes, communities, and public spaces. While concerns about the fragmentation of labor into all realms of life is not new — Donna Haraway noted the emergence of these networked systems of control, which she called the “informatics of domination,” in 1985’s “A Cyborg Manifesto” — the efficiency and ubiquity of today’s digital work-world is. As networked digital technologies allowed the easy allocation of work to freelance “creatives” and UberEats contractors in post-recession America, more and more labor could be abstracted from an office or factory setting and dispersed into the fragmentary space of screens and servers.

In this context, the imperative to regulate social reproduction is less of a concern, as digital work is not dependent on the eight-hour workday and the reproductive labor of the family. This enabled a kind of freedom from the old biopolitics of the factory, with the need to control how a life is lived in order to ensure that labor is available to capital. The freedom comes at a cost, though, because now the factory is everywhere. And the newly massive surveillance networks developed by Google and other technology companies mean that remote work can be efficiently surveilled, and workers can be managed remotely. Ben Tarnoff recently framed this new collision of factory-era control and efficiency with the fragmentation and worker isolation of the surveillance internet as the “elastic factory,” a flexible workplace that combines “the labor regime of Manchester, stretched out by fiber optic cable until it covers the whole world.”

Although the reasons for the slow fade of the security-question were more technical than (explicitly) ideological, the risks — sophisticated bots, data breaches — that created the need for new kinds of security protocol are part of the same technological context of datafication and constant tracking that undergird the new regime of labor and social organization. Both are characterized by omnipresent surveillance and ubiquitous and distributed work, shaped and accelerated by digital technology and the internet. The new tools for preventing fraud and verifying identity online rely on the understanding that to be on the internet today is to be tracked, and access to the tools that the internet provides requires the conscious and automated disclosure of massive amounts of personal and behavioral data. This data is collected by Google and other data-collectors, and is used to help verify our identity via our digital “footprint,” as well as being sold for profit or used to advertise to us as we move through the internet.

In the surveilled digital workplace, the suburban organization of life is no longer necessary to capital

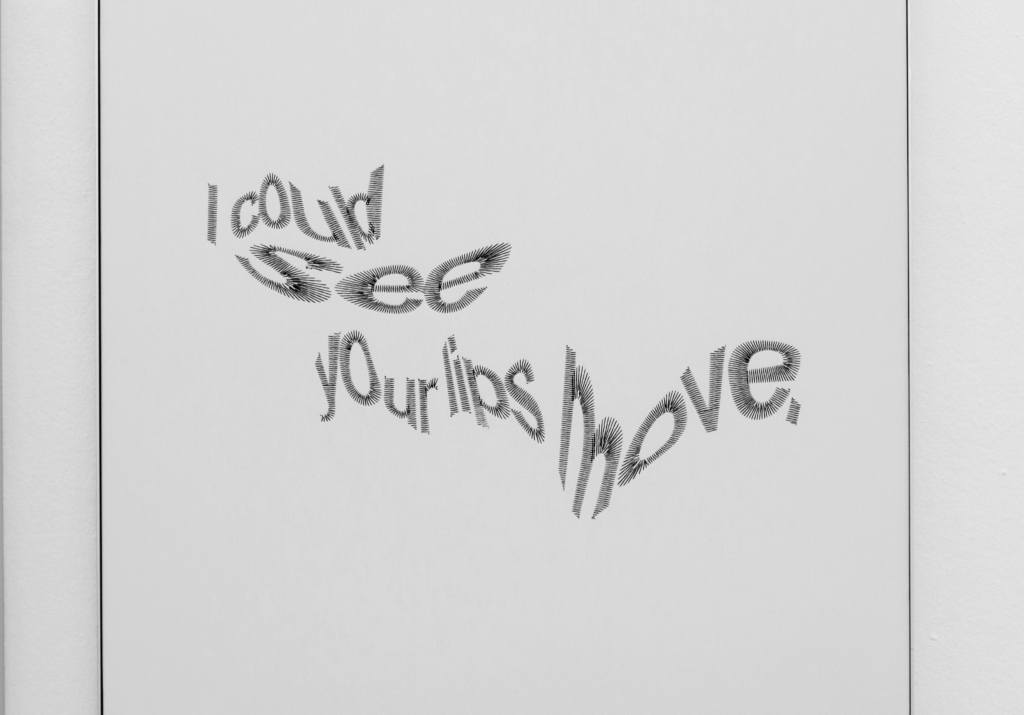

Along with two-factor authentication, which cross-references identity across devices or communication platforms, the epitome of the new wave of verification tools is the challenge–response test known as CAPTCHA. CAPTCHA, the “Completely Automated Public Turing test to tell Computers and Humans Apart,” was developed to counteract bot fraud, not by asking for personal identifying details, but by having users interpret and enter details about an image, usually a set of distorted letters and numbers, designed to be unreadable by a computer. Unlike security questions, CAPTCHA doesn’t help verify that “I am who I say I am” (Kelly Pendergrast, first car a white Volvo 760, mother’s maiden name [redacted]), but rather that “I am what I say I am.” That is: a human, an internet user, a digital worker.

“ReCAPTCHA,” a popular CAPTCHA deployment, was developed when a team of researchers realized that the millions of CAPTCHA tests being completed every day could be put towards “doing something useful.” Instead of asking users to decipher a pre-programmed but otherwise arbitrary set of distorted letters, reCAPTCHA had users work on real-life problems, for instance authenticating snippets of hard-to-read text that were scanned as part of Google Books (Google went on to acquire reCAPTCHA in 2009). With reCAPTCHA, a means of verifying identity also became a means of capturing labor. Later, the range of reCAPTCHA tasks expanded to encompass other text problems as well as image recognition tasks (“select all squares with street signs”) that provide essential free labor towards Google’s AI programs.

Without being seen to do so, and without any of the hegemonic bombast of the traditional security question, reCAPTCHA has helped discipline a new generation of workers. The economic logic of the digital economy, built as it is on the collection of huge volumes of data, requires workers to accept the extraction of free labor and personal data at the same time as they perform compensated work or access services. CAPTCHA is a perfect verification tool for the new era, training us to be effective decentralized (and undercompensated) workers in the digital factory.

Nevertheless, CAPTCHA is at least visible. When we are asked to “select all squares with street markings” or “shop awnings” or “lamp posts,” the great churning info-collecting machine of Google pops into view. We might spend a moment considering what this information is being used for (“select all squares with military vehicles”) or why we should perform this labor in order to access our email, but we generally accept the task as the price of admission that presumably results in more security and fewer crawling bots.

Since 2014, however, text and image reCAPTCHAs have been joined by their less-visible brethren: “no CAPTCHA reCAPTCHA.” This cuts out the need for the picture spotting “test,” instead relying on behavioral analysis running in the background, tracking how a user moves the mouse as well as assessing “normal” activity based on the vast trove of information that Google already has associated with specific IP addresses. The familiar, but existentially disturbing “I am not a robot” checkbox is the most well-known incarnation of CAPTCHA reCAPTCHA. More recently, the “invisible” reCAPTCHA v3 provides an even more seamless CAPTCHA experience, intended to help “detect abusive traffic on your website without any user friction.” If unusual activity is suspected, the user may be required to complete an additional reCAPTCHA test before proceeding, but in normal cases the CAPTCHA runs invisibly, without any (conscious) interaction by the user — not even a humanity-affirming check box. The invisible CAPTCHA marks the arrival of an identity authentication regime that has receded entirely from view, thanks to the massive surveillance apparatus that is the modern internet.

The relationship between evolving forms of labor and the transition from one set of security protocols to another is not a causal one. Still, tracking the intersection of online identity verification and disciplinary labor regimes provides a window into the ways we are shaped by the systems and tools we use to access work, services, and community. The disciplinary influence of identity verification on the internet — of both the security question and the picture-test CAPTCHA variety — happens without our consent, and sometimes even without our notice. The development and deployment of these systems are historically situated, resulting as they did from the complex history of authentication protocols, influenced by the financial sector, and modernized to circumvent the internet-era threat of bot fraud. And while our interaction with these verification tests can be frustrating, exclusionary, or damaging, our regular engagement with them is a reminder of the complex mechanics required to deal with complexities of human-computer interaction.

Historically, our interactions with security verification protocols also remind us that we are being surveilled. Security questions monitored our personal biographical data, and rewarded a life lived along normative lines. CAPTCHA picture tests remind us that Google is surveilling and digitizing the world with its Maps and Street View, and that our existence on the internet performs double-duty as users and as uncompensated click-workers. With Invisible reCAPTCHA, the authentication regime begins to disappear from view. The workers have been trained to function effectively in the networked digital factory, accepting the double-extraction of waged labor and free labor. The early days of spectacular surveillance have faded, leaving us to take for granted continual surveillance that happens behind the scenes, tracking our movements and behavior patterns.

In 2020, I no longer have a car. The disciplinary routine of the drive to work — gas station, backyard, strip mall, mom and dad — is no longer part of my day. Now, I work from home, from the cafe, from the library. As I slip back out of the acceptable boundaries of security-question life (goodbye suburbs, goodbye car), it’s clear that I haven’t evaded the hegemonic forces that seek to shape our lives and our labors. Instead, a glance in the rearview mirror shows that and the labor force produced by the factory and the suburban organization of life is no longer as necessary to capital. In the elastic factory, the surveilled digital workplace, discipline can afford to become invisible.