In 2012, Joan Serrà and a team of scientists at the Artificial Intelligence Research Institute of the Spanish National Research Council confirmed something that many had come to suspect: that music was becoming increasingly the same. Timbral variety in pop music had been decreasing since the 1960s, the team found, after using computer analytics to break down nearly half a million recorded songs by loudness, pitch, and timbre, among other variables. This convergence suggested that there was an underlying quality of consumability that pop music was gravitating toward: a formula for musical virality.

These findings marked a watershed moment for the music discovery industry, a billion-dollar endeavor to generate descriptive metadata of songs using artificial intelligence so that algorithms can recommend them to listeners. In the early 2010s, the leading music-intelligence company was the Echo Nest, which Spotify acquired in 2014. Founded in the MIT Media Lab in 2005, the Echo Nest developed algorithms that could measure recorded music using a set of parameters similar to Serrà’s, including ones with clunky names like acousticness, danceability, instrumentalness, and speechiness. To round out their models, the algorithms could also scour the internet for and semantically analyze anything written about a given piece of music. The goal was to design a complete fingerprint of a song: to reduce music to data to better guide consumers to songs they would enjoy.

Eventually, listeners may start to resemble the models streaming platforms have created

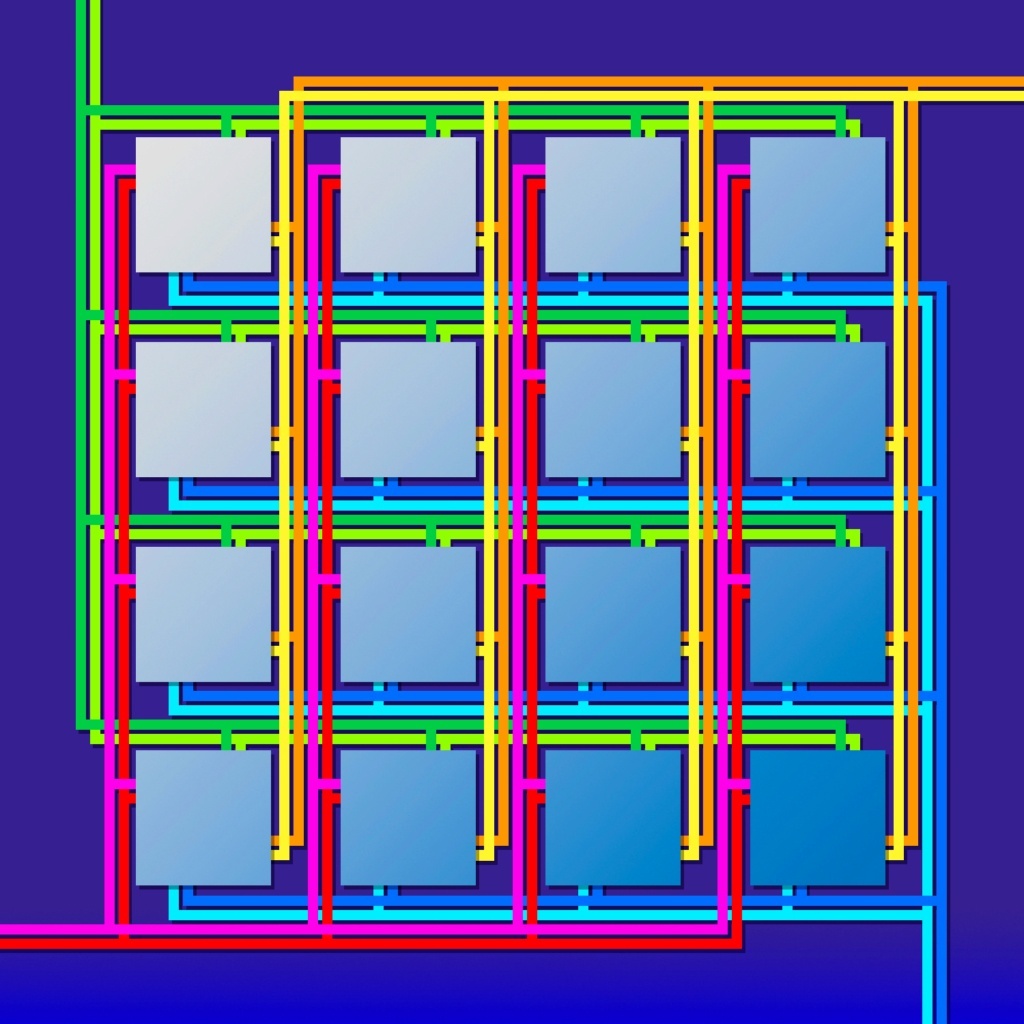

By the time Spotify bought the Echo Nest, it claimed to have analyzed more than 35 million songs, using a trillion data points. That data helped give Spotify extraordinary recommendation powers to track users’ listening habits and suggest new music accordingly, integrating data collection, analysis, and predictive intervention in a closed loop.

Philosopher of science Catherine Stinson describes such loops like this:

The sequence of events is a loop starting with a recommendation step based on the initial model, then the user is presented with the recommendations, and chooses some items to interact with. These interactions provide explicit or implicit feedback in the form of labels, which are used to update the model. Then the loop repeats with recommendations based on the updated model.

The result is that users keep encountering similar content because the algorithms keep recommending it to us. As this feedback loop continues, no new information is added; the algorithm is designed to recommend content that affirms what it construes as your taste.

No streaming platform can accurately predict taste; humans are too dynamic to be predicted consistently. Instead, Spotify builds models of users and makes predictions by recommending music that matches the models. Stuck in these feedback loops, musical styles start to converge as songs are recommended according to a pre-determined vocabulary of Echo Nest descriptors. Eventually, listeners may start to resemble the models streaming platforms have created. Over time, some may grow intolerant of anything other than an echo.

Before there were Echo Nest parameters, the 20th century music industry relied on other kinds of data to try to make hits. So-called “merchants of cool” hit the streets to hunt for the next big trend, conducting studies on teenage desire that generated tons of data, which was then consulted to market the next hit sensation. This kind of data collection is now built into the apparatus for listening itself. Once a user has listened to enough music through Spotify to establish a taste profile (which can be reduced to data like songs themselves, in terms of the same variables), the recommendation systems simply get to work. The more you use Spotify, the more Spotify can affirm or try to predict your interests. (Are you ready for some more acousticness?)

Breaking down both the products and consumers of culture into data has not only revealed an apparent underlying formula for virality; it has also contributed to new kinds of formulaic content and a canalizing of taste in the age of streaming. Reduced to component parts, culture can now be recombined and optimized to drive user engagement. This allows platforms to squeeze more value out of backlogs of content and shuffle pre-existing data points into series of new correlations, driving the creation of new content on terms that the platforms are best equipped to handle and profit from. (Listeners will get the most out of music optimized for Spotify on Spotify.) But although such reconfigured cultural artifacts might appear new, they are made from a depleted pantry of the same old ingredients. This threatens to starve culture of the resources to generate new ideas, new possibilities.

Although such reconfigured cultural artifacts might appear new, they are made from a depleted pantry of the same old ingredients

Outside the platform environment, social interaction is often generative; ideas are shared or generated collaboratively, people influence each other in unpredictable ways. But within platforms, we are catalogued as data and compared with other people’s profiles in the system, a process known as collaborative filtering. Titles are recommended based on both a user’s taste profile and the profiles of others who consume similar content. Users then provide feedback in the form of clicks, and filtering algorithms adjust their recommendations accordingly. This may have the effect of broadening one’s exposure to different content, but on the platform’s terms and along the lines of its computational predictions. The platform flashes a mirror before you, which reflects back not just yourself but how you have been merged with many other people.

If you want to freeze culture, the first step is to reduce it to data. And if you want to maintain the frozen status quo, algorithms trained on people’s past behaviors and tastes would be the best tools. They “repeat our past practices,” as Cathy O’Neil said in a 2017 talk. A culture that thinks like an algorithm also “projects a future that is like the past,” James Bridle explains, because “that which is gathered as data is modelled as the way things are, and then projected forward — with the implicit assumption that things will not radically change or diverge from previous experiences.” In a world reliant on computation to make sense of things, “that which is possible becomes that which is computable.”

As greater efforts are made to break down music into parameters legible to computer algorithms, sonic differentiation in Western mainstream popular music may be reduced further, as the data of the analog years is re-injected into the present. Many new songs will be crafted as optimized rearrangements of the old ones, seeking to tap into the correlations detected and implemented by algorithmic analysis. If our tastes slightly change, the algorithm adapts, or it can try to nudge our tastes incrementally by force-feeding us the content it has calculated that we’d be most likely to engage with. Either way, the goal of a recommendation algorithm isn’t to surprise or shock but to affirm. The process looks a lot like prediction, but it’s merely repetition. The result is more of the same: a present that looks like the past and a future that isn’t one.

Nostalgia, then, is no longer just a matter of being “homesick” for the past but is actively abetted in modified forms today by the intervention of algorithms. Not only does this new nostalgia stem from a world rendered as data; it becomes bait to keep producing data.

Initially, platforms counted in part on an extrinsic nostalgia to bring users in: what might be called “retrobait.” When Instagram launched in 2010, it attracted users with the aura and limitations of analog photography, as did its early competitor Hipstamatic (and as new entrant Dispo is trying to do now). Instagram’s array of filters allowed users to coat their digital images in the haze of analog photographs before posting them and thereby turn mere moments into memories. It’s a strategy similar to embedding Easter eggs in new works that call back to older pop culture references, as with the recent movies Space Jam, Ready Player One, and Ralph Breaks the Internet.

As social media became more entrenched and ubiquitous, nostalgia began to be shaped directly by the nature of the platforms themselves, as with Timehop, which digs through old posts and shows users what they posted in the past, and the other similar algorithmic memory features that resurface content on its “anniversary.”

Streaming platforms, which blend and rebalance old and new content in their attempt to attract and hold users, frequently make recourse to retrobait, jockeying to secure the rights to coveted old content like The Office or Friends, for example. But at the same time, they also produce original content that recombines elements of past shows (much like the Echo Nest broke down songs into supposedly detachable core components) — a sort of refined retrobait.

Not only does this new nostalgia stem from a world rendered as data; it becomes bait to keep producing data

On Netflix, one can find numerous examples, like Stranger Things, a series about a group of young kids in a fictional 1980s town that must battle the forces of evil, and House of Cards, which Netflix developed by studying the taste profiles of its subscribers. Likewise, Disney’s streaming platform Disney+ has played up the sitcom nostalgia in WandaVision, which paid homage to shows like The Dick Van Dyke Show, The Brady Bunch, Full House, Malcolm in the Middle, and Modern Family. And then of course there are the innumerable reboots, prequels, or sequels. In the music industry, the retrobait tendency has manifested as “streambait” or “Spotifycore,” music genres that rely on simple songwriting formulas stuffed with nostalgic references for algorithms to easily recommend — “the cheapest high in music,” according to music critic Jeremy Larson.

Independent social media accounts, following the incentives built into platforms, can achieve high visibility by producing their own retrobait. One can follow numerous “nostalgic aesthetics” accounts, like @publicschoolpizza, @rerunthe80s, and @vintage.cheese, that specialize in posting pop culture from the 20th century, from 1980s television commercials to vintage softcore pornography. Sometimes these accounts post content that imitates the styles of the past. There are dozens of “retrowave” or “synthwave” accounts on Instagram that mix old content with new content that just appears old, a valuable tactic for a brand like General Mills looking to synergize its retro marketing with social media. If I scroll through retrobait accounts on Instagram, the app will show me posts from retrobait accounts on my Explore page, and the feedback loop continues: nostalgia in, nostalgia out.

Old content under copyright is highly valuable to investment firms looking to cash in on nostalgia’s virality on social media. Funds like Hipgnosis and Primary Wave will purchase the rights to songs, promote them across social media, and then collect streaming royalties. After Fleetwood Mac’s “Dreams” went viral on TikTok in September 2020, Stevie Nicks, Lindsey Buckingham, and Mick Fleetwood sold their rights to a specialist fund, and soon after we got a new TikTok challenge.

Not that consumers want nothing but nostalgic content forever. But novelty is often circumscribed with the familiar: producers will write inclusivity into reboots (as with the 2016 Ghostbusters), film universes are expanded (from the MonsterVerse to the Marvel Cinematic Universe ), old canons are abolished for new ones (like the 2018 Halloween reboot, which ret-conned all films in the series save the original), and ever-more niche micro-trends of the past are revived (like the Y2K coconut girl aesthetic). These gestures refresh the intellectual property of yesterday for algorithms to amplify and give corporations new angles to market nostalgia.

Nostalgia has become a template for the serial production of more content, a new income stream for copyright holders, a new data stream for platforms, and a new way to express identity for users. And there’s so much pop culture in the past to draw from, platform capitalism will seemingly never run out. We’re told our data is collected in an attempt to predict what we want, but this isn’t quite true. In attempting to predict our tastes, streaming services work to produce them in its image. Since algorithms are trained on the past, they aren’t merely transmitting nostalgia through neutral channels; they’re cultivating nostalgic biases, seeking to predispose users to crave retro.

Meanwhile, Big Tech speaks the rhetoric of futurity, promising immersive experiences and digital solutions with their technologies. But even as Silicon Valley positions itself as progressive, its algorithms are stuck in the past.

Predictive algorithms don’t really predict anything; they just make certain kinds of pasts repeatedly reappear. These tend to privilege specific understandings of history (ones that confirm biases or stereotypes, rendering the existing distribution of power as nostalgically justified), while downplaying or outright obscuring perspectives (ones that center the experience of marginalized people). They are generally the profitable versions of history drawn from media representations and starring the IP of the largest media conglomerates: Marty McFly inventing rock and roll; the Summer of Love without 1960s progressive movements; Pentagon-approved Marvel superheroes; drag races where no one dies, not even James Dean.

Such depictions of the past are quasi-“official” records that serve state power or launder existing privilege as with the whitewashing of the Atlantic slave trade; the progress narratives that privilege “great men” like Christopher Columbus and Robert E. Lee; or the Santa Clausification of Martin Luther King Jr. They erase shades of nostalgia that do not conform to what Badia Ahad-Legardy calls the “monolithic understanding of nostalgia”: that is, the hegemonic strain of nostalgia that circulates white, normative, consumerist yearnings.

Predictive algorithms don’t really predict anything; they just make certain kinds of pasts repeatedly reappear

The data of the past is often violent and imperial — a “colonial mathematics,” as historian Theodora Dryer writes. They are the numbers of historical racism and intolerance. Algorithmic recommendation attempts to transform this data into nostalgia, into the repetition of stories that rationalized oppression. But it draws on the same sorts of biased information that posits crime where it has happened before, naturalizes wealth disparities, or reinscribes stereotypes because they are already too familiar. As James Bridle writes of predictive algorithms, “To train these nascent intelligences on the remnants of prior knowledge is thus to encode … barbarism into our future.”

At scale, algorithmic determinism locks people and events in repeating loops, a homogenizing process that mirrors a larger homogenization of society itself: the flattening of unique places into anonymous nonplaces and the consolidation of media corporations. With mounting hype for the metaverse, a new era of nostalgic hegemony is promised. Silicon Valley has long dreamed of virtual reality, and virtual reality narratives like Ready Player One and the Black Mirror episode “San Junipero” often promise nostalgic fulfillment in hypothetical digital heavens — another recombination of old and new. Outside, society is crumbling, but a virtual universe provides hopeless people with an escape: a managed environment where they can adopt avatars, hang out on Minecraft World, and climb Mount Everest with Batman.

Although the technology hasn’t yet caught up with the dream, the metaverse is already being hailed as a digital realm where intellectual properties can intermingle. You can be a superhero, or a giant robot, and you can spend your life hunting for pop culture Easter eggs. The metaverse promises to be a world for us, like all virtual reality discourses do, but it will be premised on and financed by the extraction of consumer data, and it will train its algorithms to promote the intellectual property of Disney and Warner Bros. while neutralizing any form of social change.

Reducing culture and consumers to data will continue to produce the same representations of nostalgia for backward-looking algorithms to recommend. Those who worship the power of digital technology may believe that we are on track to a utopia where people can escape from the future we’ve made. But if we let algorithms predict the future for us all, we will find there is nowhere to go but back.