Over the past decade or so, the way most of us interact with maps has been completely inverted. Once, as geographer Doreen Massey noted in For Space (2005), “the dominant form of mapping” placed “the observer, themselves unobserved, outside and above the object of the gaze.” With paper maps, this is self-evidently true: The map is already printed and doesn’t take into account your particular situation; as far as the geographic image is concerned, you are nowhere and anywhere. But with GPS-powered mapping services, this is not so unambiguous. Google Maps, for instance, locates us within our own gaze, on the map, as a blue dot directing its cone of perception in a particular direction. This doesn’t posit us as transcending the territory we survey, but the opposite: It offers a misleadingly clear representation of our precise place in a particular representation of the charted world.

But in the process, this centering of ourselves on a visible map conceals a larger and more detailed map that we don’t see, one that is constantly evolving and from which certain details are surfaced as if they should take a natural pre-eminence. What appears given as a map of “what’s really there” draws from intertwined webs of surveillance that track us in ways we can’t observe and associate us algorithmically with any number of phenomena for reasons we don’t quite understand, if we are made aware of them at all. The dot doesn’t merely show us where we are; it inscribes us into a world remade on the fly for particular gazes. What we see is in part an obscure map of our character.

This can play out in ways that surprise us. For example, when a guy on Tinder searched my somewhat unusual first name on Google Maps, rather than an anticlimactic “no results found,” the app sent him directly to the New School, my alma mater, which seemed like a striking coincidence. Even though my name appears on a few websites where “New School” also appears, it is not me and I am not it. Or am I?

The dot doesn’t merely show us where we are; it inscribes us into a world remade on the fly for particular gazes

We can guess why that might have happened, but we can’t know for sure, and that’s concerning. Google Maps, it turns out, is rife with associational glitches, in which people become places and places become character traits. Searching “avarice” sent me straight to the Metropolitan Museum of Art; “hostility,” a small-town doctor in Orangeburg, New York. “Drab” took me to some place called BOOM!Health Prevention Center, now permanently closed. Some of these associations stem directly from users’ contributions, intentional or not: The query “What is in energy drinks” appears on Maps as a business (as documented in this viral tweet), which Street View reveals to be a nondescript suburban home, presumably occupied by someone unfamiliar with how both Red Bull and Google work. Street View itself is full of obviously incorrectly tagged photos, some accidental, some deliberate. In 2014, a Google Maps editor approved an unknown user’s request to change the name of Berlin’s Theodor-Heuss-Platz to Adolf-Hitler-Platz, which lasted for almost 24 hours.

But many associations are inexplicable from the outside. What produces or prevents them can’t be determined. It’s hard to believe that, for example, the word ambiguity doesn’t appear on any website anywhere that’s even indirectly associated with a Maps location, yet it doesn’t pull up anything. (“Google Maps can’t find ambiguity.”) The cause of these inconsistencies is opaque; whatever programmed threshold exists for potential relevance is unclear. It’s not entirely evident what the map search bar is capable of — after all, it is not absurd to envision using it to find out where a friend is or what places are commonly associated with what emotional reactions or experiences. The technology for this already exists. The question is the degree to which it is operating behind our backs, on the map of proliferating connections and data points that is behind the map that’s generated for us to see.

While it might seem like just a whimsical experiment to search ideas in the location bar, the obscure or faulty correlations it occasionally turns up illustrate an algorithmic mystery that has broader ramifications. In a small way, it hints at the conduits that link the ubiquitous forms of surveillance we are placed under to our experience of the world and how these hidden correlations become potential vulnerabilities. At the same time, it suggests how the experience of location itself has been made more subjective, inflected less by the characteristics of a place that anyone can observe and more by the tailored search results that are presented about it.

From a user’s perspective, Google Maps is basically a geocoded version of any other Google search, only the results are displayed as points on a map rather than as a list of websites. It can handle objective and subjective queries: It can show you the location of a specific street address or tell you where the “nearest” liquor store is. Even before it is asked anything, it draws from multiple available data layers to produce a map that orients users in specific ways: certain information is presented by default (commercial locations deemed relevant to the user), certain information is obscured (the names of streets or transit stops), and certain information is entirely inaccessible.

The layered logic of Google Maps derives from a framework known as a geographic information system, or GIS. The first GIS was developed in 1963 by English geographer Roger Tomlinson and used by the Canadian government to implement their National Land-Use Management Program. It was later adapted for common military use. In 1992, geographer Neil Smith called the first Gulf War “the first full-scale GIS war,” as it allowed the military to map the conditions of enemy terrain and locate targets from afar. People watching on television could enjoy reports that drew on GIS features, whether or not they were indicated as such, which produced the landscape of combat almost in real time.

Apps like Google Maps direct you to go to certain places and absorb certain information while placing you under observation. Maps are rhetorical

For Smith and other 1990s-era critics, war-making was built into GIS frameworks, poisoning other applications that draw from them with the same “technocratic turn.” He quotes P.J. Taylor, who associates GIS with a “return of the very worst sort of positivism, a most naive empiricism,” in which data is seen as neutral and “any broader questions of social and political context” are ignored. But other geographers since then, including Stacy Warren and Marianna Pavlovskaya, have challenged that characterization of GIS and argued for its more qualitative and democratic potential uses, such as open-source community map-making.

The advent of commercial GIS applications, like Google Maps, hasn’t resolved concerns about the supposed neutrality of maps and how their data is presented. Instead, maps are now marked by an additional tension between the self-evident usefulness of on-demand geographic data and the kinds of surveillance necessary to make it possible. Not only are the layers of information you see on a Google Map not objective and neutral, but the fact that you can see them indicates that Google knows where you have been.

Broadly, we understand that what we do and search for online is being tracked, that various algorithms pick up on key data and point it back at us in the form of sponsored posts, targeted advertising, and personalized search results. As Google’s brief public walkthrough of its search-engine algorithms (which are proprietary and closely guarded trade secrets) explains, the user’s search history and IP address are taken into account, as well as the search terms themselves. That is, results depend not on public or correctible data but on the rigorous, automatic documentation of a users’ activities.

Similar calculations dictate what non-geospatial data is presented on Google Maps: They are based on a precisely calculated estimate of the person who happens to be looking. These are meant to be revealing; they purport to indicate something concrete about who you are, what you do or care about, or what you’re searching for this time. Such data may or may not be immediately relevant to, say, a given police query, but this is all the more reason the presumption of relevance can be enough to render it a threat. Data from Google’s GPS location tracking has been turned over to the police in “reverse-location” or geofence warrants, which compel companies to provide data on every cell-phone signature that appeared in a given geographic radius during a given time frame. The information is anonymized at first, until names and phone numbers are matched with the anonymous signatures as police narrow their search. Per recently released data from Google, the company received 8,396 such requests in 2019 and 11,554 in 2020. (This data may be an undercount.) Such warrants can be targeted at protesters, as when the Minneapolis Police Department obtained a geofence warrant related to the May 2020 uprising, and can lead to false arrests, as in the case of Jorge Molina when geofencing data placed an old phone he no longer owned at the scene of a crime.

The cops do also Google you. Not only can they easily comb social media for explicit evidence of your interests, associations, and locations; they can draw on the inferences about you that Google has embedded in its results. Google’s geosurveillance mechanisms could be vulnerable to the same basic sorts of glitches as its standard search functions. When queried, they are determined to produce some result, any result, rather than drawing a blank. Whether this could be countered by deliberate contamination of data — through “data poisoning” (Apple’s patented “techniques to pollute electronic profiling”) or Google bombing (flooding the search engine with irrelevant but heavily linked websites to bring specific results to the top) for example — is unclear.

Algorithms function similarly to geographic mapping: They ostensibly present some truth about us but actually generate a hazy new terrain of the self

It’s not incidental that the geolocation technology that locates us on a map when we’re looking for that liquor store is the same technology that locates us for the police — that while we’re tracking ourselves on the grid for our own purposes, we’re also being tracked for someone else’s, with neither existing independently of the other. And while, UX-wise, Google Maps may be a sort of GIS lite, the practical mechanisms of Google’s geolocation programming are subject to many of the same critiques as GIS has been, especially in its early stages as a military surveillance tool: that a seemingly innocuous or at least morally adaptable instrument is in fact not so; that it lends itself on an inherent level to the kind of data warehousing that facilitates overzealous and malicious exercises of state power. These critiques are perhaps more apt of Google Maps — privately owned, proprietarily secret, and immensely lucrative — than they ever were of the concept of a GIS in general, which can serve as a resource with greater transparency for non-government and non-military actors.

It’s easy to understand that a GIS map does not itself constitute some whole empirical fact: By its very design, a GIS map results from the selection of some data layers and not others; some features of the world deliberately made visible, others deliberately obscured. It inarguably presents some factual information, as when the distances and altitudes represented are reliable and mathematically accurate. The aura of the map’s accuracy, though, can obscure the manipulative nature of other layers. It can work as an alibi for how apps like Google Maps don’t simply show you where you are but direct you to go to certain places and absorb certain information while placing you under observation. Maps are rhetorical; they are anything but a neutral tool.

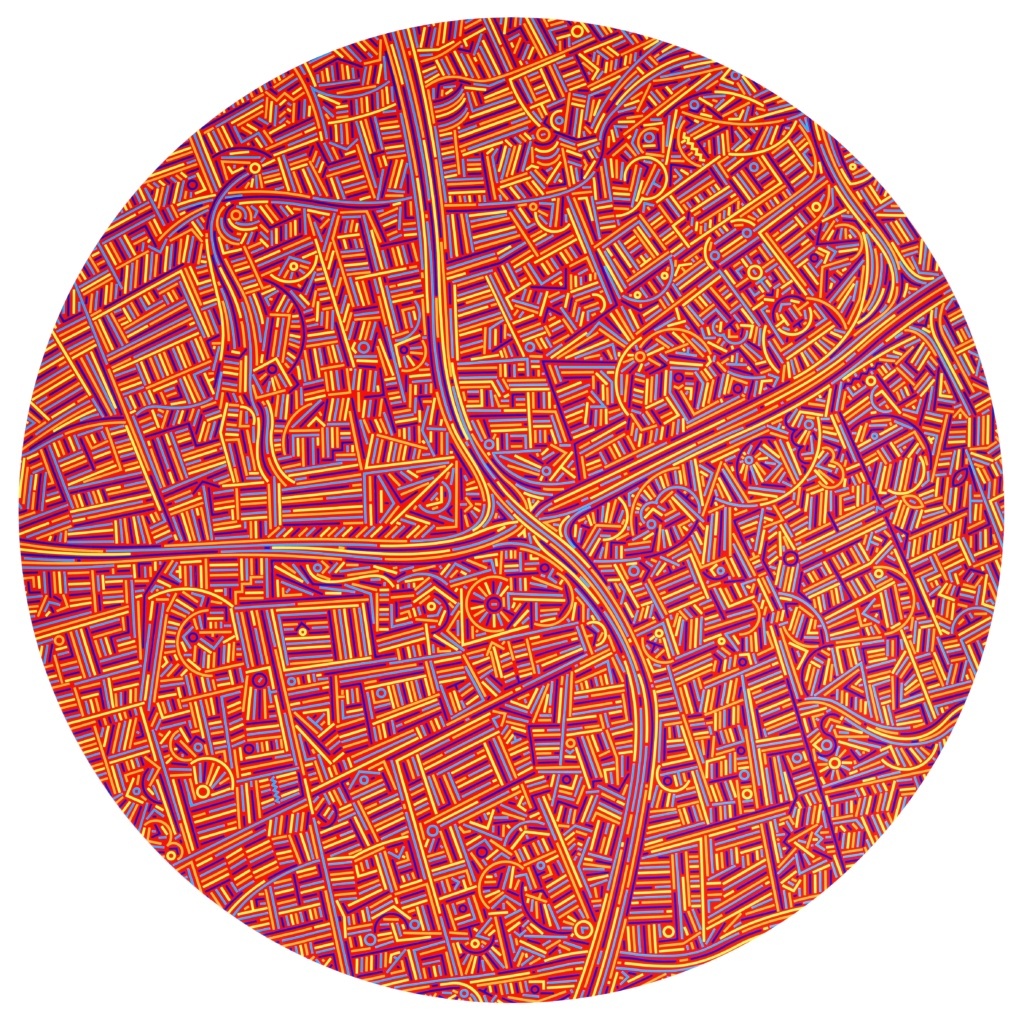

As geographer Clancy Wilmott writes, “digital maps … hide algorithmic workings and smooth scrolling surfaces that give the illusion of a representational flatness while hiding integral architectures of binary logic, digital codes and coordinates, and lines of commands.” This illusion, paired with an imperfect search function, can also become obvious; its glitches taunt us by deeming us the very embodiment of a liberal arts college or provide poignant insight into the intractable greed of the Met. It can even be accidentally illuminating, one more angle into the weird worlds of our algorithmic reflections.

In some respects, we are whoever the algorithm thinks we are. It can seem kind of pointless to insist on some kind of independent self in a realm defined by search results: Who else could we be to an algorithmic system but our data? For an algorithmically driven map system, something similar is true: Where we are, too, is not independent from our data but in a sense dictated by it.

In general, maps are not neutral presentations of absolute geographical truth but precisely constructed ways of seeing, made transmissible, available for consumption. Cartography produces geography as much as represents it. From Henri Lefebvre on, representations of space (maps, as well schema drawn from engineering and architecture) have been understood as foundational to the way capitalist spatial organization is produced and experienced.

Algorithms — search engines, generators of sponsored posts and targeted ads, curated feeds on social media, and so on — function similarly to geographic mapping: Their products orient us in a world. They ostensibly present some truth about us but actually generate a hazy new terrain of the self. This terrain emerges from and is subject to the same mechanisms of surveillance as our geographic whereabouts. The uneasy fusion of place, identity, and surreptitious data collection carries implications still to be untangled. “Where are you” is always an existentially loaded question. Ultimately, applications like Google Maps may offer too much of an answer.