Topics

Algorithms

The encoded systems that are influencing our lives and the world

Magic Numbers

So-called SpiritualTok — mediums, astrologers, tarot readers, Reiki healers and other creators — impute supernatural powers to algorithmic sorting to boost their own metrics. But treating “the algorithm” as a kind of divinity fundamentally obfuscates the nature of its power

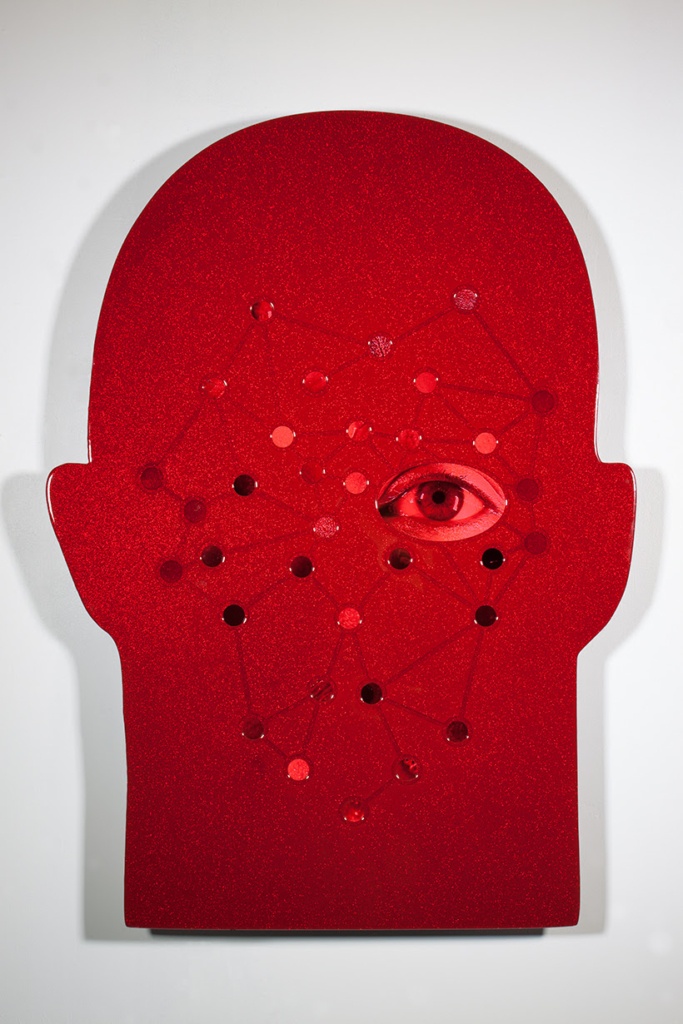

Mirror of Your Mind

When algorithmic feeds beginning showing users content pertaining to specific health conditions, users will likely feel as though they are being diagnosed with them. Within social media’s systems of self-differentiation, every kind of content can be understood as a symptom. Identity becomes a kind of chronic disease that is constantly trying to cure itself.

I Write the Songs

TikTok’s recommendation algorithm is heralded as the secret of the app’s success, but why do users want to be told what to watch? Algorithms that purport to predict who we are and what we want are “gimmicks,” in Sianne Ngai’s sense of the word: They let us enjoy our ambivalence over surrendering to them.

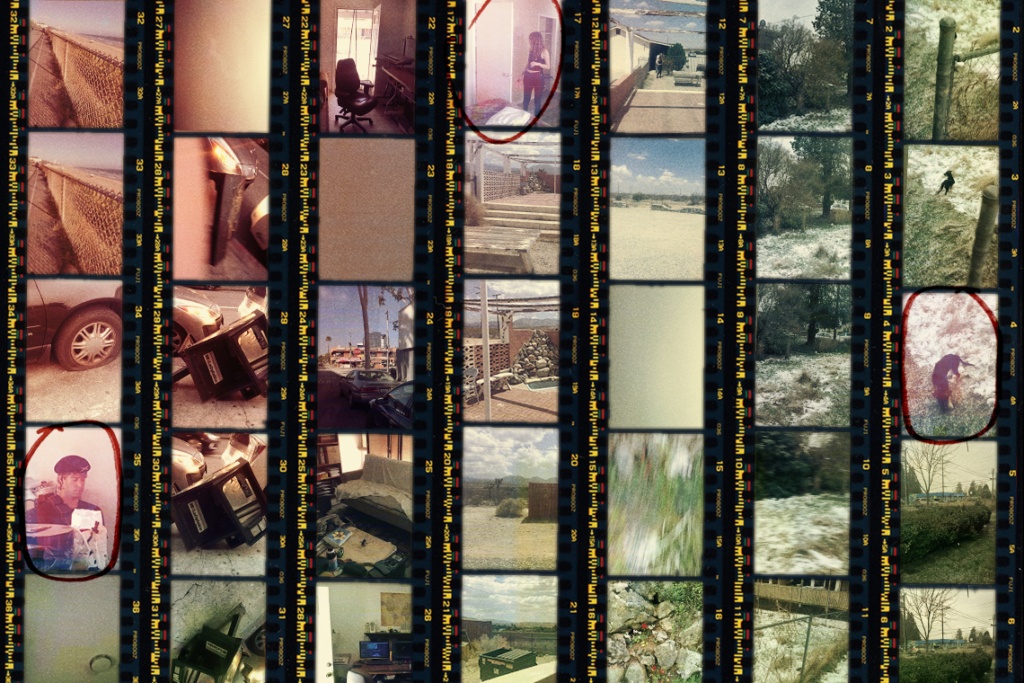

Manufactured Recollection

When the same kinds of algorithms determine which of our photos we should revisit in social media and which ads perform the best, our memories will inevitably look more like the advertising campaigns and paid influencer posts that surround us. The social consensus around what is “worth” remembering is becoming more tethered to an image’s commercial viability.

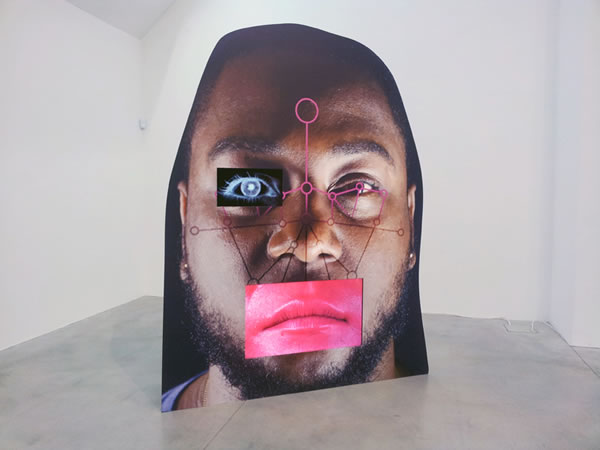

Friction-Free Racism

Silicon Valley has learned to profit by selling “friction-free” interactions, interfaces, and applications as a form of convenience. In these, a user doesn’t have to engage with people or even see them. The racism and othering implicit in this are rendered at the level of code, so these users can feel innocent and not complicit.

Odd Numbers

The “algorithmic accountability” movement seeks to make the use of algorithms more fair and transparent, but it can also be used as a rationale for making them more pervasive. To be truly critical, algorithmic accountability must not rule out the possibility of rejecting certain uses for algorithms altogether.

Just Randomness?

Historically, fairness has often been grounded in randomness, as with the drawing of lots. Some contemporary uses of algorithms try to be more fair by being more blind, as though this makes bias impossible. The trouble with this approach is that it makes us too willing to accept justice without justifications, and a view of society in which all relations are arbitrary.

Time After Time

Often algorithmically sorted feeds are seen as the opposite of “unsorted” chronological feeds. But this overlooks that chronology is a basic sorting algorithm. Linear time is so deeply foundational to our worldview that it’s almost impossible to conceive of time any other way, but social media feeds do show that there are other ways of organizing temporal events.

Sick of Myself

Under economic conditions in which maximizing our “human capital” is paramount, we are under unremitting pressure to make the most of ourselves and our social connections and put it all on display to maintain our social viability. Having algorithms track and posit our identity as a coherent whole may serve as a respite from all that work of producing ourselves as assets.

Force Fed

Algorithmic feeds deployed to track preferences and tastes are better understood as means for reproducing prevailing social conditions, organized in terms of prescribed identities. Wealthy, white, and male subjects face algorithmic sorting meant to stoke their supremacist desires. The rest face a more vulgar, violent kind of sorting in terms of race, gender, sexuality, or ability.

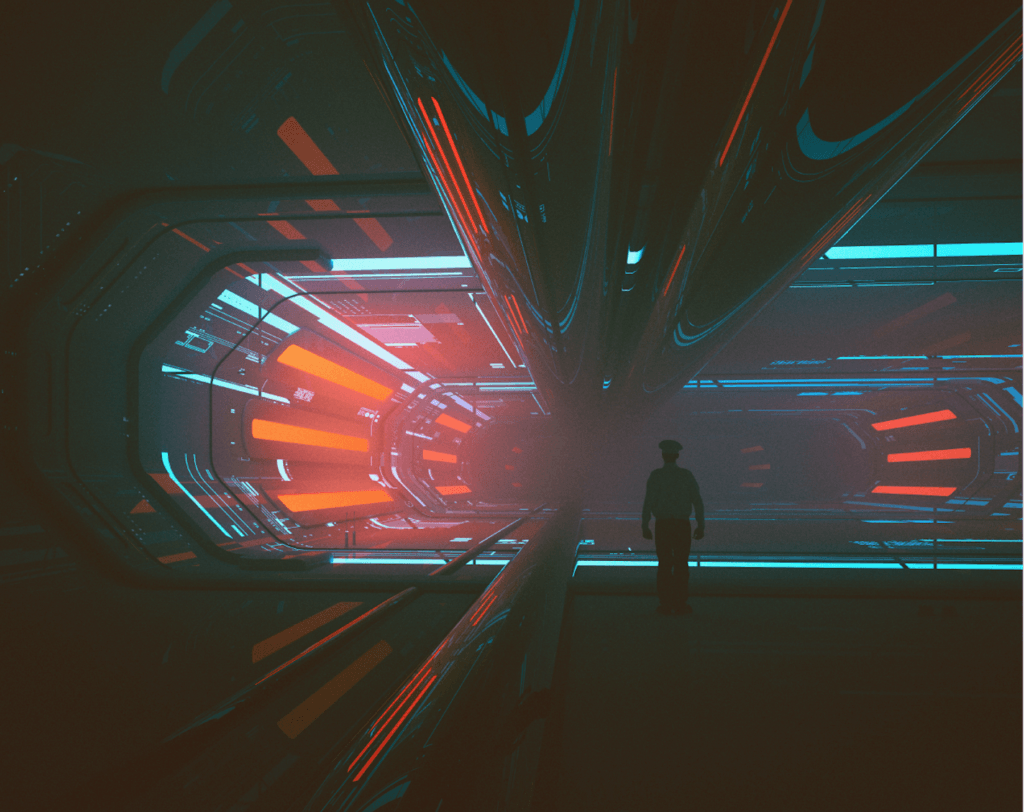

Rule by Nobody

Algorithms may be sold as reducing bias, but their chief aim is to generate profit, power, and control. When they are working well, they are not working at all for us. They function as the equivalent of bureaucracy rendered in digital code, implementing outcomes while defraying responsibility.

Broken Windows, Broken Code

Predictive policing algorithms like CompStat try to use past crime data to anticipate future occurrences and deploy resources accordingly. But in practice, they help produce the crimes and types of criminals that a racist carceral state requires, reifying and reproducing structural inequality in the process.